#! -*-coding:utf-8 -*-

from urllib import request, parse

from bs4 import BeautifulSoup

import datetime

import xlwt

starttime = datetime.datetime.now()

url = r'https://www.zhipin.com/job_detail/?scity=101210100'

# boss直聘的url地址,默认杭州

def read_page(url, page_num, keyword): # 模仿浏览器

page_headers = {

'Host': 'www.zhipin.com',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36 '

'Chrome/45.0.2454.85 Safari/537.36 115Browser/6.0.3',

'Connection': 'keep-alive'

}

page_data = parse.urlencode([ # 浏览器请求的参数

('ka', 'page-'+str(page_num)),

('page', page_num),

('query', keyword)

])

req = request.Request(url, headers=page_headers)

page = request.urlopen(req, data=page_data.encode('utf-8')).read()

page = page.decode('utf-8')

return page

if __name__ == '__main__':

print('**********************************即将进行抓取**********************************')

keyword = input('请输入您要搜索的职位:')

workbook = xlwt.Workbook()

sheet = workbook.add_sheet('sheet1')

i=0

for j in range(1,5):

soup=BeautifulSoup(read_page(url, j, keyword))

for link in soup.select('.company-text'):

sheet.write(i,0,link.get_text())

i=i+1

workbook.save("D:\\resultsLatest.xls")

endtime = datetime.datetime.now()

time = (endtime - starttime).seconds

print('总共用时:%s s' % time)

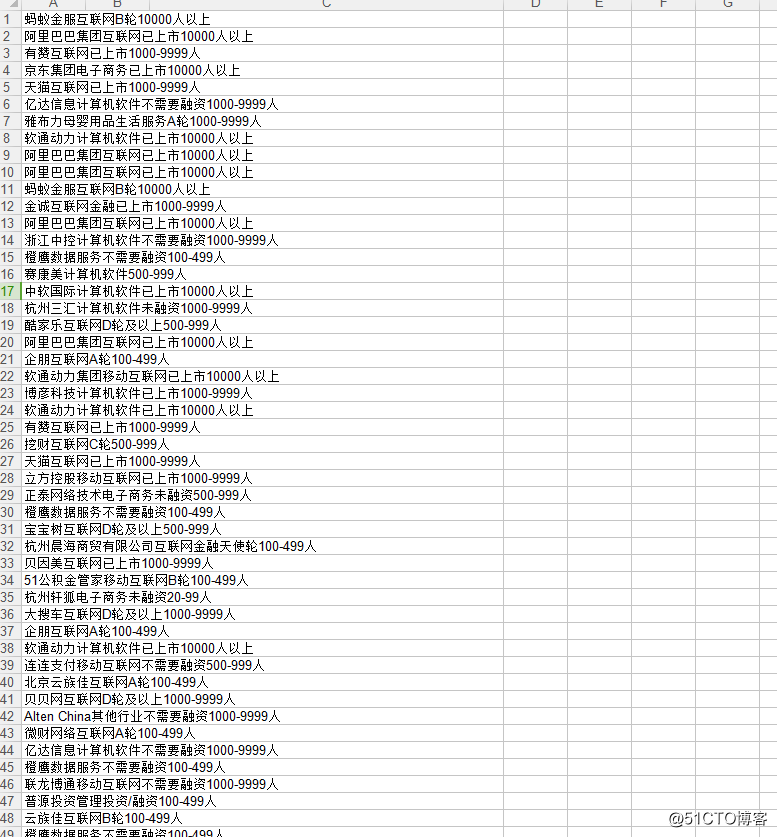

2,爬取的结果

原文地址:http://blog.51cto.com/12831900/2119687