标签:width inf 集群 amp share pmd min 分享 volume

一、依然简介Kubernetes支持的卷类型详见:https://kubernetes.io/docs/concepts/storage/volumes/

Kubernetes使用Persistent Volume和Persistent Volume Claim两种API资源来管理存储。

PersistentVolume(简称PV):由管理员设置的存储,它是集群的一部分。就像节点(Node)是集群中的资源一样,PV也是集群中的资源。它包含存储类型,存储大小和访问模式。它的生命周期独立于Pod,例如当使用它的Pod销毁时对PV没有影响。

PersistentVolumeClaim(简称PVC): 是用户存储的请求。它和Pod类似。Pod消耗Node资源,PVC消耗PV资源。Pod可以请求特定级别的资源(CPU和MEM)。PVC可以请求特定大小和访问模式的PV。

可以通过两种方式配置PV:静态或动态。

静态PV:集群管理员创建许多PV,它们包含可供集群用户使用的实际存储的详细信息。

动态PV:当管理员创建的静态PV都不匹配用户创建的PersistentVolumeClaim时,集群会为PVC动态的配置卷。此配置基于StorageClasses:PVC必须请求存储类(storageclasses),并且管理员必须已创建并配置该类,以便进行动态创建。

Kubernetes关于PersistentVolumes的更多描述:https://kubernetes.io/docs/concepts/storage/persistent-volumes/

二、关于PersistentVolume的访问方式

ReadWriteOnce - 卷以读写方式挂载到单个节点

ReadOnlyMany - 卷以只读方式挂载到多个节点

ReadWriteMany - 卷以读写方式挂载到多个节点

在CLI中,访问模式缩写为:

RWO - ReadWriteOnce

ROX - ReadOnlyMany

RWX - ReadWriteMany

重要:卷只能一次使用一种访问模式安装,即使它支持很多。

三、关于回收策略

Retain - 手动回收

Recycle - 基本擦洗(rm -rf /thevolume/*)

Delete - 关联的存储资产(如AWS EBS,GCE PD,Azure磁盘或OpenStack Cinder卷)将被删除。

目前,只有NFS和HostPath支持回收。AWS EBS,GCE PD,Azure磁盘和Cinder卷支持删除。

四、关于PersistentVolume(PV)状态

Available(可用状态) - 一块空闲资源还没有被任何声明绑定

Bound(绑定状态) - 声明分配到PVC进行绑定,PV进入绑定状态

Released(释放状态) - PVC被删除,PV进入释放状态,等待回收处理

Failed(失败状态) - PV执行自动清理回收策略失败

五、关于PersistentVolumeClaims(PVC)状态

Pending(等待状态) - 等待绑定PV

Bound(绑定状态) - PV已绑定PVC

六、在所有k8s节点上安装ceph-common

# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo # vim /etc/yum.repos.d/ceph.repo [Ceph] name=Ceph packages for $basearch baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/$basearch enabled=1 gpgcheck=1 priority=1 gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [Ceph-noarch] name=Ceph noarch packages baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch enabled=1 gpgcheck=1 priority=1 gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-source] name=Ceph source packages baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS enabled=1 gpgcheck=1 priority=1 gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc # yum makecache # yum -y install ceph-common

七、配置静态PV

1.在默认的RBD pool中创建一个1G的image(ceph集群)

# ceph osd pool create rbd 128 pool 'rbd' created # ceph osd lspools # rbd create ceph-image -s 1G --image-feature layering ##创建1G的镜像并指定layering特性 # rbd ls ceph-image # ceph osd pool application enable rbd ceph-image ##进行关联 enabled application 'ceph-image' on pool 'rbd' # rbd info ceph-image rbd image 'ceph-image': size 1 GiB in 256 objects order 22 (4 MiB objects) id: 13032ae8944a block_name_prefix: rbd_data.13032ae8944a format: 2 features: layering op_features: flags: create_timestamp: Sun Jul 29 13:00:36 2018

2.配置ceph secret(ceph+kubernetes)

# ceph auth get-key client.admin | base64 ##获取client.admin的keyring值,并用base64编码(ceph集群) QVFDOUgxdGJIalc4SWhBQTlCOXRNUCs5RUV3N3hiTlE4NTdLVlE9PQ== # vim ceph-secret.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret type: kubernetes.io/rbd data: key: QVFDOUgxdGJIalc4SWhBQTlCOXRNUCs5RUV3N3hiTlE4NTdLVlE9PQ== # kubectl create -f ceph-secret.yaml secret/ceph-secret created # kubectl get secret ceph-secret NAME TYPE DATA AGE ceph-secret kubernetes.io/rbd 1 3s

3.创建PV(kubernetes)

# vim ceph-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: ceph-pv spec: capacity: storage: 1Gi accessModes: - ReadWriteOnce storageClassName: "rbd" rbd: monitors: - 192.168.100.116:6789 - 192.168.100.117:6789 - 192.168.100.118:6789 pool: rbd image: ceph-image user: admin secretRef: name: ceph-secret fsType: xfs readOnly: false persistentVolumeReclaimPolicy: Recycle # kubectl create -f ceph-pv.yaml persistentvolume/ceph-pv created # kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY ceph-pv 1Gi RWO Recycle STATUS CLAIM STORAGECLASS REASON AGE Available rbd 1m

4.创建PVC(kubernetes)

# vim ceph-claim.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ceph-claim spec: storageClassName: "rbd" accessModes: - ReadWriteOnce resources: requests: storage: 1Gi # kubectl create -f ceph-claim.yaml persistentvolumeclaim/ceph-claim created # kubectl get pvc ceph-claim NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE ceph-claim Bound ceph-pv 1Gi RWO rbd 20s # kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY ceph-pv 1Gi RWO Recycle STATUS CLAIM STORAGECLASS REASON AGE Bound default/ceph-claim rbd 1m

5.创建pod(kubernetes)

# vim ceph-pod1.yaml apiVersion: v1 kind: Pod metadata: name: ceph-pod1 spec: containers: - name: ceph-busybox image: busybox command: ["sleep", "60000"] volumeMounts: - name: ceph-vol1 mountPath: /usr/share/busybox readOnly: false volumes: - name: ceph-vol1 persistentVolumeClaim: claimName: ceph-claim # kubectl create -f ceph-pod1.yaml pod/ceph-pod1 created # kubectl get pod ceph-pod1 NAME READY STATUS RESTARTS AGE ceph-pod1 1/1 Running 0 2m # kubectl get pod ceph-pod1 -o wide NAME READY STATUS RESTARTS AGE IP NODE ceph-pod1 1/1 Running 0 2m 10.244.88.2 node3

6.测试

进入到该Pod中,向/usr/share/busybox目录写入一些数据,之后删除该Pod,再创建一个新的Pod,看之前的数据是否还存在。

# kubectl exec -it ceph-pod1 -- /bin/sh / # ls bin dev etc home proc root sys tmp usr var / # cd /usr/share/busybox/ /usr/share/busybox # ls /usr/share/busybox # echo 'Hello from Kubernetes storage' > k8s.txt /usr/share/busybox # cat k8s.txt Hello from Kubernetes storage /usr/share/busybox # exit # kubectl delete pod ceph-pod1 pod "ceph-pod1" deleted # kubectl apply -f ceph-pod1.yaml pod "ceph-pod1" created # kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE ceph-pod1 1/1 Running 0 15s 10.244.91.3 node02 # kubectl exec ceph-pod1 -- cat /usr/share/busybox/k8s.txt Hello from Kubernetes storage

八、配置动态PV

1.创建RBD pool(ceph)

# ceph osd pool create kube 128 pool 'kube' created

2.授权 kube 用户(ceph)

# ceph auth get-or-create client.kube mon 'allow r' osd 'allow class-read, allow rwx pool=kube' -o ceph.client.kube.keyring # ceph auth get client.kube exported keyring for client.kube [client.kube] key = AQB2cFxbYZtRBhAAi6xcvhEW7SYx3PlBY/0O0Q== caps mon = "allow r" caps osd = "allow class-read, allow rwx pool=kube"

注:

Ceph使用术语“capabilities”(caps)来描述授权经过身份验证的用户使用监视器、OSD和元数据服务器的功能。功能还可以根据应用程序标记限制对池中的数据,池中的命名空间或一组池的访问。Ceph管理用户在创建或更新用户时设置用户的功能。

Mon 权限: 包括 r 、 w 、 x 。

OSD 权限: 包括 r 、 w 、 x 、 class-read 、 class-write 。另外,还支持存储池和命名空间的配置。

更多详见:http://docs.ceph.com/docs/master/rados/operations/user-management/

3.创建 ceph secret(ceph+kubernetes)

# ceph auth get-key client.admin | base64 ##获取client.admin的keyring值,并用base64编码 QVFDOUgxdGJIalc4SWhBQTlCOXRNUCs5RUV3N3hiTlE4NTdLVlE9PQ== # ceph auth get-key client.kube | base64 ##获取client.kube的keyring值,并用base64编码 QVFCMmNGeGJZWnRSQmhBQWk2eGN2aEVXN1NZeDNQbEJZLzBPMFE9PQ== # vim ceph-kube-secret.yaml apiVersion: v1 kind: Namespace metadata: name: ceph --- apiVersion: v1 kind: Secret metadata: name: ceph-admin-secret namespace: ceph type: kubernetes.io/rbd data: key: QVFDOUgxdGJIalc4SWhBQTlCOXRNUCs5RUV3N3hiTlE4NTdLVlE9PQ== --- apiVersion: v1 kind: Secret metadata: name: ceph-kube-secret namespace: ceph type: kubernetes.io/rbd data: key: QVFCMmNGeGJZWnRSQmhBQWk2eGN2aEVXN1NZeDNQbEJZLzBPMFE9PQ== # kubectl create -f ceph-kube-secret.yaml namespace/ceph created secret/ceph-admin-secret created secret/ceph-kube-secret created # kubectl get secret -n ceph NAME TYPE DATA AGE ceph-admin-secret kubernetes.io/rbd 1 13s ceph-kube-secret kubernetes.io/rbd 1 13s default-token-tq2rp kubernetes.io/service-account-token 3 13s

4.创建动态RBD StorageClass(kubernetes)

# vim ceph-storageclass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-rbd annotations: storageclass.kubernetes.io/is-default-class: "true" provisioner: kubernetes.io/rbd parameters: monitors: 192.168.100.116:6789,192.168.100.117:6789,192.168.100.118:6789 adminId: admin adminSecretName: ceph-admin-secret adminSecretNamespace: ceph pool: kube userId: kube userSecretName: ceph-kube-secret fsType: xfs imageFormat: "2" imageFeatures: "layering" # kubectl create -f ceph-storageclass.yaml storageclass.storage.k8s.io/ceph-rbd created # kubectl get sc NAME PROVISIONER AGE ceph-rbd (default) kubernetes.io/rbd 10s

注:

storageclass.kubernetes.io/is-default-class:注释为true,标记为默认的StorageClass,注释的任何其他值或缺失都被解释为false。

monitors:Ceph监视器,逗号分隔。此参数必需。

adminId:Ceph客户端ID,能够在pool中创建images。默认为“admin”。

adminSecretNamespace:adminSecret的namespace。默认为“default”。

adminSecret:adminId的secret。此参数必需。提供的secret必须具有“kubernetes.io/rbd”类型。

pool:Ceph RBD池。默认为“rbd”。

userId:Ceph客户端ID,用于映射RBD image。默认值与adminId相同。

userSecretName:用于userId映射RBD image的Ceph Secret的名称。它必须与PVC存在于同一namespace中。此参数必需。提供的secret必须具有“kubernetes.io/rbd”类型,例如以这种方式创建:

kubectl create secret generic ceph-secret --type="kubernetes.io/rbd" \ --from-literal=key='QVFEQ1pMdFhPUnQrSmhBQUFYaERWNHJsZ3BsMmNjcDR6RFZST0E9PQ==' \ --namespace=kube-system

fsType:kubernetes支持的fsType。默认值:"ext4"。

imageFormat:Ceph RBD image格式,“1”或“2”。默认值为“1”。

imageFeatures:此参数是可选的,只有在设置imageFormat为“2”时才能使用。目前仅支持的功能为layering。默认为“”,并且未开启任何功能。

默认的StorageClass标记为(default)

详见:https://kubernetes.io/docs/concepts/storage/storage-classes/#ceph-rbd

5.创建Persistent Volume Claim(kubernetes)

动态卷配置的实现基于StorageClass API组中的API对象storage.k8s.io。

集群管理员可以 StorageClass根据需要定义任意数量的对象,每个对象都指定一个卷插件(也称为 配置器),用于配置卷以及在配置时传递给该配置器的参数集。集群管理员可以在集群中定义和公开多种存储(来自相同或不同的存储系统),每种存储都具有一组自定义参数。此设计还确保最终用户不必担心如何配置存储的复杂性和细微差别,但仍可以从多个存储选项中进行选择。

用户通过在其中包含存储类来请求动态调配存储PersistentVolumeClaim。

# vim ceph-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ceph-pvc namespace: ceph spec: storageClassName: ceph-rbd accessModes: - ReadOnlyMany resources: requests: storage: 1Gi # kubectl create -f ceph-pvc.yaml persistentvolumeclaim/ceph-pvc created # kubectl get pvc -n ceph NAME STATUS VOLUME ceph-pvc Bound pvc-e55fdebe-9487-11e8-b987-000c29e75f2a CAPACITY ACCESS MODES STORAGECLASS AGE 1Gi ROX ceph-rbd 5s

6.创建Pod并测试

# vim ceph-pod2.yaml apiVersion: v1 kind: Pod metadata: name: ceph-pod2 namespace: ceph spec: containers: - name: ceph-busybox image: busybox command: ["sleep", "60000"] volumeMounts: - name: ceph-vol1 mountPath: /usr/share/busybox readOnly: false volumes: - name: ceph-vol1 persistentVolumeClaim: claimName: ceph-pvc # kubectl create -f ceph-pod2.yaml pod/ceph-pod2 created # kubectl -n ceph get pod ceph-pod2 -o wide NAME READY STATUS RESTARTS AGE IP NODE ceph-pod2 1/1 Running 0 3m 10.244.88.2 node03 # kubectl -n ceph exec -it ceph-pod2 -- /bin/sh / # echo 'Ceph from Kubernetes storage' > /usr/share/busybox/ceph.txt / # exit # kubectl -n ceph delete pod ceph-pod2 pod "ceph-pod2" deleted # kubectl apply -f ceph-pod2.yaml pod/ceph-pod2 created # kubectl -n ceph get pod ceph-pod2 -o wide NAME READY STATUS RESTARTS AGE IP NODE ceph-pod2 1/1 Running 0 2m 10.244.88.2 node03 # kubectl -n ceph exec ceph-pod2 -- cat /usr/share/busybox/ceph.txt Ceph from Kubernetes storage

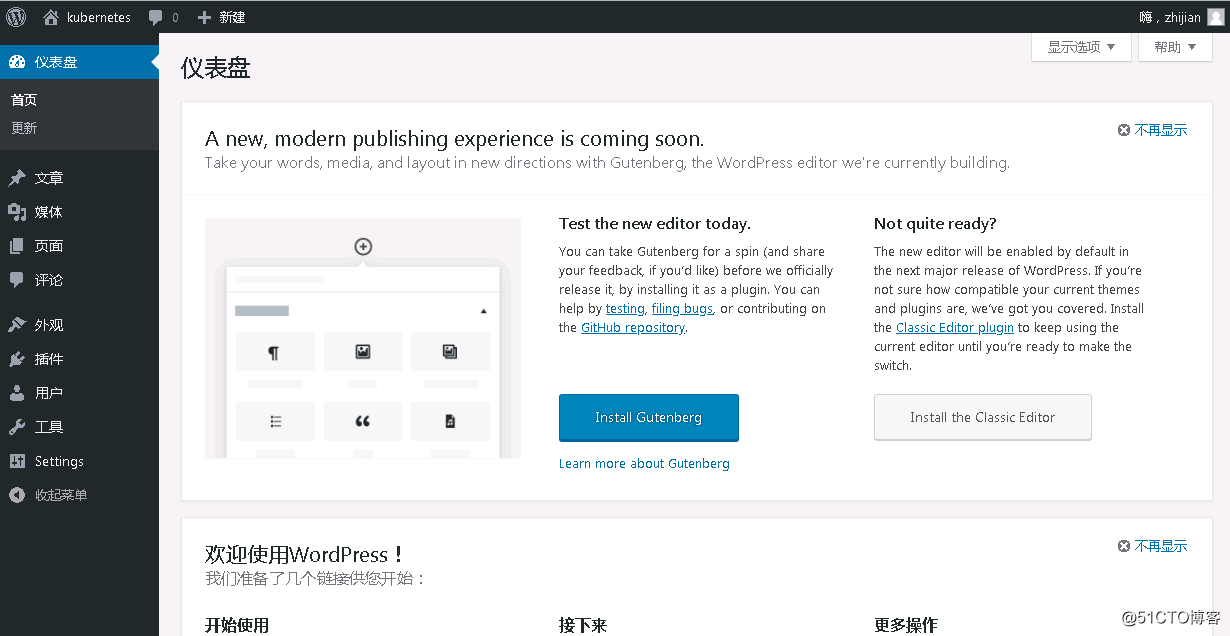

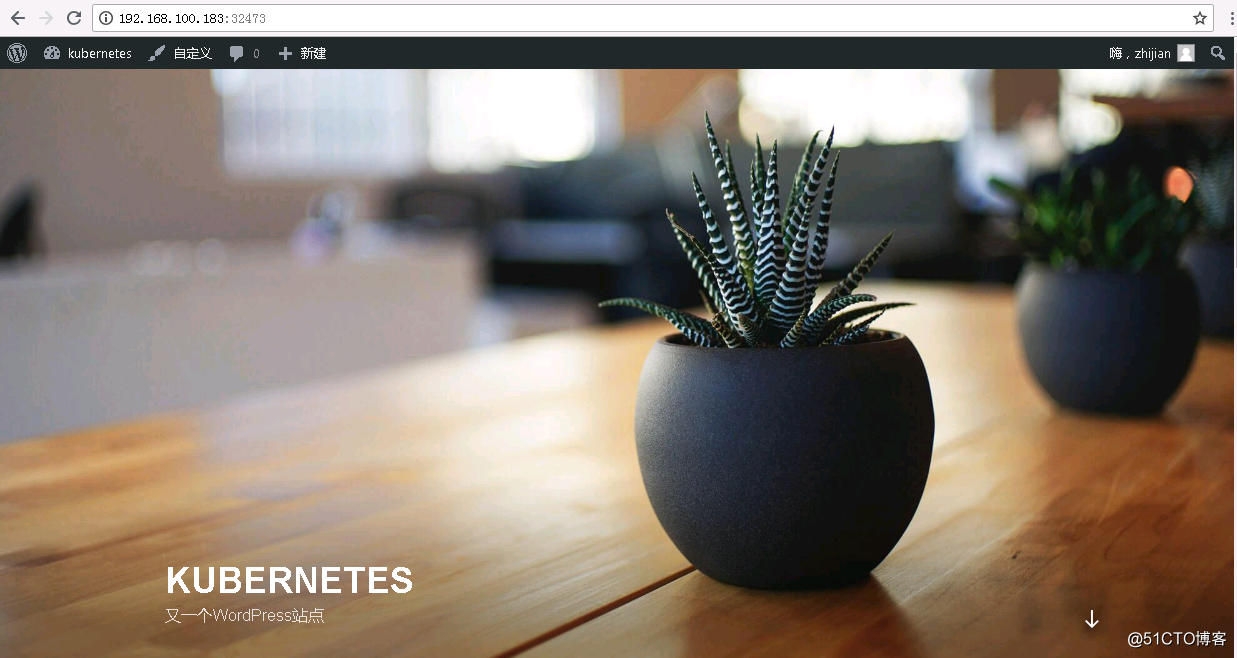

九、使用持久卷部署WordPress和MariaDB

该小节详见:https://kubernetes.io/docs/tutorials/stateful-application/mysql-wordpress-persistent-volume/

1.为MariaDB密码创建一个Secret

# kubectl -n ceph create secret generic mariadb-pass --from-literal=password=zhijian secret/mariadb-pass created # kubectl -n ceph get secret mariadb-pass NAME TYPE DATA AGE mariadb-pass Opaque 1 37s

2.部署MariaDB

MariaDB容器在PersistentVolume上挂载 /var/lib/mysql。

设置MYSQL_ROOT_PASSWORD环境变量从Secret读取数据库密码。

# vim mariadb.yaml apiVersion: v1 kind: Service metadata: name: wordpress-mariadb namespace: ceph labels: app: wordpress spec: ports: - port: 3306 selector: app: wordpress tier: mariadb clusterIP: None --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mariadb-pv-claim namespace: ceph labels: app: wordpress spec: storageClassName: ceph-rbd accessModes: - ReadWriteOnce resources: requests: storage: 2Gi --- apiVersion: apps/v1 kind: Deployment metadata: name: wordpress-mariadb namespace: ceph labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: mariadb strategy: type: Recreate template: metadata: labels: app: wordpress tier: mariadb spec: containers: - image: 192.168.100.100/library/mariadb:5.5 name: mariadb env: - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mariadb-pass key: password ports: - containerPort: 3306 name: mariadb volumeMounts: - name: mariadb-persistent-storage mountPath: /var/lib/mysql volumes: - name: mariadb-persistent-storage persistentVolumeClaim: claimName: mariadb-pv-claim # kubectl create -f mariadb.yaml service/wordpress-mariadb created persistentvolumeclaim/mariadb-pv-claim created deployment.apps/wordpress-mariadb created # kubectl -n ceph get pvc ##查看PVC NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mariadb-pv-claim Bound pvc-33dd4ae7-9bb0-11e8-b987-000c29e75f2a 2Gi RWO ceph-rbd 2m # kubectl -n ceph get pod ##查看Pod NAME READY STATUS RESTARTS AGE wordpress-mariadb-f4d44db9c-fqchx 1/1 Running 0 1m

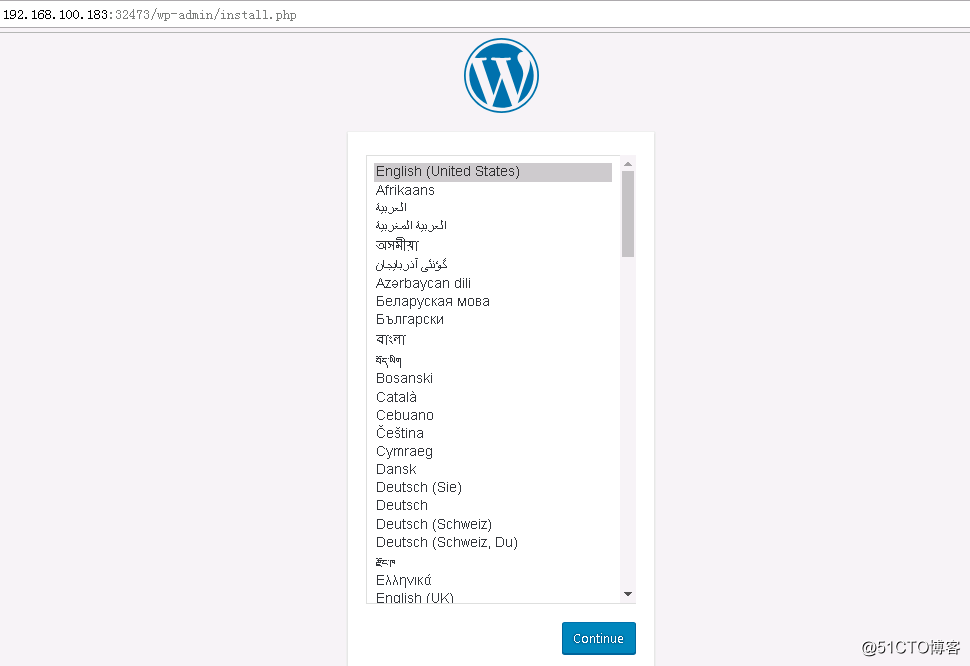

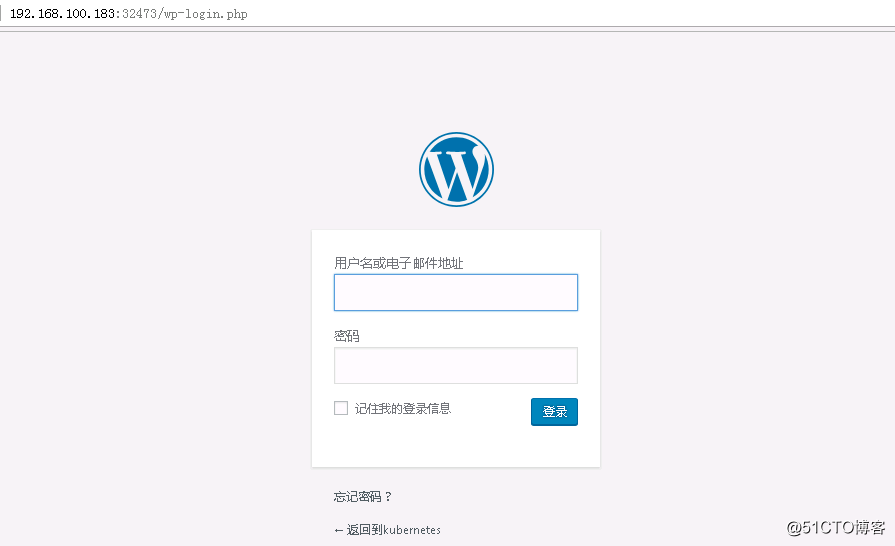

3.部署WordPress

# vim wordpress.yaml apiVersion: v1 kind: Service metadata: name: wordpress namespace: ceph labels: app: wordpress spec: ports: - port: 80 selector: app: wordpress tier: frontend type: LoadBalancer --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: wp-pv-claim namespace: ceph labels: app: wordpress spec: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi --- apiVersion: apps/v1 kind: Deployment metadata: name: wordpress namespace: ceph labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: frontend strategy: type: Recreate template: metadata: labels: app: wordpress tier: frontend spec: containers: - image: 192.168.100.100/library/wordpress:4.9.8-apache name: wordpress env: - name: WORDPRESS_DB_HOST value: wordpress-mariadb - name: WORDPRESS_DB_PASSWORD valueFrom: secretKeyRef: name: mariadb-pass key: password ports: - containerPort: 80 name: wordpress volumeMounts: - name: wordpress-persistent-storage mountPath: /var/www/html volumes: - name: wordpress-persistent-storage persistentVolumeClaim: claimName: wp-pv-claim # kubectl create -f wordpress.yaml service/wordpress created persistentvolumeclaim/wp-pv-claim created deployment.apps/wordpress created # kubectl -n ceph get pvc wp-pv-claim ##查看PVC NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE wp-pv-claim Bound pvc-334b3bd9-9bde-11e8-b987-000c29e75f2a 2Gi RWO ceph-rbd 46s # kubectl -n ceph get pod ##查看Pod NAME READY STATUS RESTARTS AGE wordpress-5c4ffdcb85-6ftfx 1/1 Running 0 1m wordpress-mariadb-f4d44db9c-fqchx 1/1 Running 0 5m # kubectl -n ceph get services wordpress ##查看Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE wordpress LoadBalancer 10.244.235.175 <pending> 80:32473/TCP 4m

注:本篇文章参考了该篇文章,感谢:

标签:width inf 集群 amp share pmd min 分享 volume

原文地址:http://blog.51cto.com/wangzhijian/2157349