标签:val ade firewalld 集群 nta inux one 解压 swap

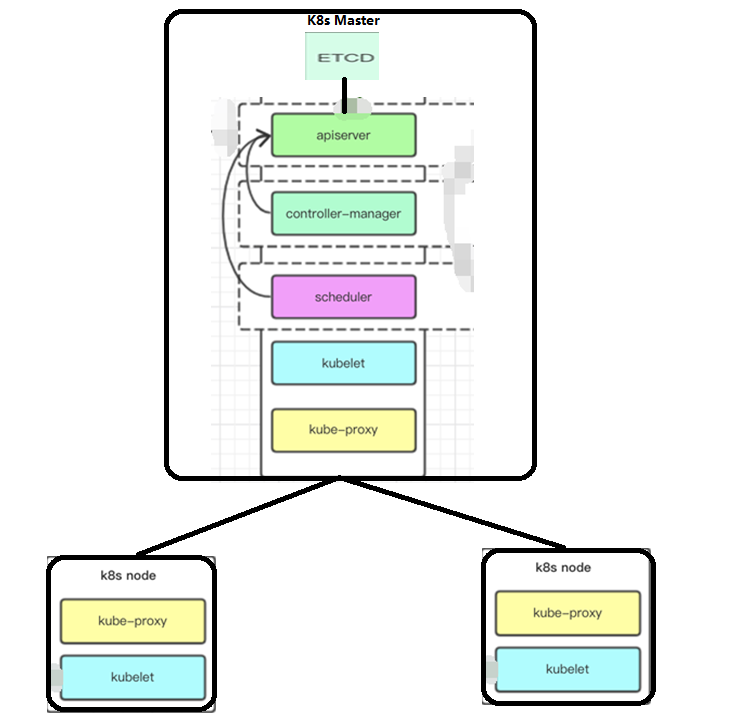

这里我用了三台机器,一台master,两台node,先从小的开始吧,一步一步的搭建熟悉之后,我们再来搭建高可用的kubernetes架构。Master和Node上都需要安装docker服务

实验环境:

Centos7.2 minimal 64位服务器IP:

Master: 192.168.1.89

Node: 192.168.1.90

Node1: 192.168.1.91主机名:

Master: master

Node: node

Node1: node1软件版本:

k8s组件版本:v1.11.3

docker版本:ce-18.03.1

etcd版本:v3.3.9

flannel版本:0.7.1-4Master节点要安装的组件有:

1、docker

2、etcd

3、kube-apiserver

4、kubectl

5、kube-scheduler

6、kube-controller-manager

7、kubelet

8、kube-proxyNode节点要安装的组件有:

1、Calico/Flannel/Open vSwitch/Romana/Weave等等(网络解决方案,k8s有好几个网络解决方案)

官方说明文章:https://kubernetes.io/docs/setup/scratch/#network

2、docker

3、kubelet

4、kube-proxy网上有说要关闭swap分区的操作,否则报错,由于我的主机没有设置swap分区,我也不清楚如果不关闭swap会不会报错,所以这里我就不写上关闭swap分区的操作了

环境准备需要master和node都操作

master上操作:

hostnamectl set-hostname master

bashnode上操作:

hostnamectl set-hostname node

bashnode1上操作:

hostnamectl set-hostname node1

bashmaster和node同样的操作

echo "192.168.1.89 master" >> /etc/hosts

echo "192.168.1.90 node" >> /etc/hosts

echo "192.168.1.91 node1" >> /etc/hostsmaster和node同样的操作

yum -y install ntp

systemctl enable ntpd

systemctl start ntpd

ntpdate -u cn.pool.ntp.org

hwclock

timedatectl set-timezone Asia/ShanghaiKubernetes主要有两种安全模式选择,官方说明地址:

https://kubernetes.io/docs/setup/scratch/#security-models1)使用防火墙来提高安全性

2)这更容易设置1)使用带有证书的https和用户凭据

2)这是推荐的方法

3)配置证书可能会很棘手为了保证线上集群的信任和数据安全,节点之间使用TLS证书来互相通信,如果需要使用https方法,则需要准备证书和用户凭据

生成证书有几种方式:

1、easyrsa

2、openssl

3、cfssl官方说明地址:

https://kubernetes.io/docs/concepts/cluster-administration/certificates/使用证书的组件如下:

1、etcd #使用ca.pem、etcd-key.pem、etcd.pem

2、kube-apiserver: #使用ca.pem、api-key.pem、api.pem

3、kubelet #使用ca.pem

4、kube-proxy #使用ca.pem、proxy-key.pem、proxy.pem

5、kubectl #使用ca.pem、admin-key.pem、admin.pem

6、kube-controller-manager #使用ca.pem、ca-key.pem上边给出了官方的安装地址,你也可以选择别的工具,这里我选择cfssl

注意:

证书生成都在master上执行,证书只需要创建一次即可,以后在向集群中添加新节点时只要将/etc/kubernetes/目录下的证书拷贝到新节点上就行。

下载、解压并准备命令行工具

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o cfssl-certinfo

chmod +x cfssl

chmod +x cfssljson

chmod +x cfssl-certinfo

mv cfssl* /usr/bin/mkdir -p /root/sslcd /root/ssl

cfssl print-defaults config > config.json

cfssl print-defaults csr > csr.json[root@master ssl]# ll

total 8

-rw-r--r--. 1 root root 567 Sep 17 09:58 config.json

-rw-r--r--. 1 root root 287 Sep 17 09:58 csr.json(有效期我设置了87600h,10年)

vim ca-config.json{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}参数说明:

1)ca-config.json:可以定义多个profiles,分别制定不同的过期时间、使用场景等参数,后续在签名证书时使用某个peofile

2)signing:表示该证书可用于签名其它证书

3)server auth:表示client可以用该CA对server提供的证书进行验证

4)client auth:表示server用该CA对client提供的证书进行验证vim ca-csr.json{

"CN": "ca",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "GuangXi",

"L": "NanNing",

"O": "K8S",

"OU": "K8S-Security"

}]

}cfssl gencert -initca ca-csr.json | cfssljson -bare ca[root@master ssl]# ll ca*

-rw-r--r--. 1 root root 285 Sep 17 10:41 ca-config.json

-rw-r--r--. 1 root root 1009 Sep 17 10:41 ca.csr

-rw-r--r--. 1 root root 194 Sep 17 10:41 ca-csr.json

-rw-------. 1 root root 1679 Sep 17 10:41 ca-key.pem

-rw-r--r--. 1 root root 1375 Sep 17 10:41 ca.pemcd /root/ssl/

vim apiserver-csr.json{

"CN": "apiserver",

"hosts": [

"127.0.0.1",

"localhost",

"192.168.1.89",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "GuangXi",

"L": "NanNing",

"O": "K8S",

"OU": "K8S-Security"

}]

}上边的:

localhost字段代表Master_IP

192.168.1.89代表Master_Cluster_IP

如果有多个master,都写上master的IP即可cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver[root@master ssl]# ll apiserver*

-rw-r--r--. 1 root root 1257 Sep 17 11:32 apiserver.csr

-rw-r--r--. 1 root root 422 Sep 17 11:28 apiserver-csr.json

-rw-------. 1 root root 1675 Sep 17 11:32 apiserver-key.pem

-rw-r--r--. 1 root root 1635 Sep 17 11:32 apiserver.pemcd /root/ssl/

vim etcd-csr.json{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"localhost",

"192.168.1.89",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "GuangXi",

"L": "NanNing",

"O": "K8S",

"OU": "K8S-Security"

}]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd[root@master ssl]# ll etcd*

-rw-r--r--. 1 root root 1257 Sep 17 10:55 etcd.csr

-rw-r--r--. 1 root root 421 Sep 17 10:55 etcd-csr.json

-rw-------. 1 root root 1679 Sep 17 10:55 etcd-key.pem

-rw-r--r--. 1 root root 1635 Sep 17 10:55 etcd.pemcd /root/ssl/

vim admin-csr.json{

"CN": "kubectl",

"hosts": [

"127.0.0.1",

"localhost",

"192.168.1.89",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "GuangXi",

"L": "NanNing",

"O": "system:masters",

"OU": "K8S-Security"

}]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin[root@master ssl]# ll admin*

-rw-r--r--. 1 root root 1017 Sep 17 11:41 admin.csr

-rw-r--r--. 1 root root 217 Sep 17 11:40 admin-csr.json

-rw-------. 1 root root 1675 Sep 17 11:41 admin-key.pem

-rw-r--r--. 1 root root 1419 Sep 17 11:41 admin.pemcd /root/ssl/

vim proxy-csr.json{

"CN": "proxy",

"hosts": [

"127.0.0.1",

"localhost",

"192.168.1.89",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "GuangXi",

"L": "NanNing",

"O": "K8S",

"OU": "K8S-Security"

}]

}cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes proxy-csr.json | cfssljson -bare proxy[root@master ssl]# ll proxy*

-rw-r--r--. 1 root root 1005 Sep 17 11:46 proxy.csr

-rw-r--r--. 1 root root 206 Sep 17 11:45 proxy-csr.json

-rw-------. 1 root root 1679 Sep 17 11:46 proxy-key.pem

-rw-r--r--. 1 root root 1403 Sep 17 11:46 proxy.pem将生成的证书和密钥文件(后缀为.pem的文件)拷贝到/etc/kubernetes/ssl目录下备用

mkdir -p /etc/kubernetes/ssl

cp /root/ssl/*.pem /etc/kubernetes/ssl/官方安装文章:

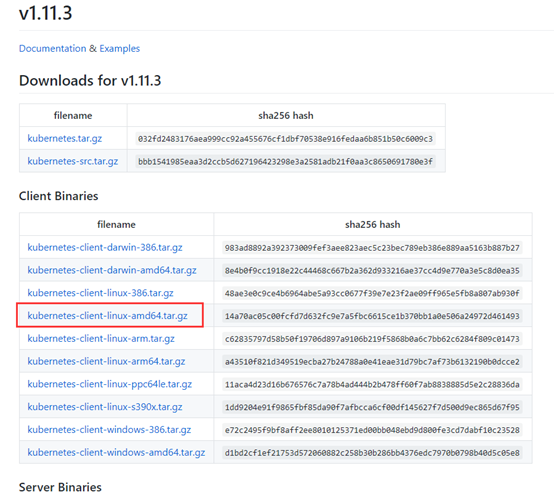

https://kubernetes.io/docs/tasks/tools/install-kubectl/#install-kubectl-binary-using-curl可以按照官方的安装方式,这里我选择直接下载二进制的方式,其实都是一样的,如下

下载地址:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.11.md#v1113命令行下载:

wget https://dl.k8s.io/v1.11.3/kubernetes-client-linux-amd64.tar.gztar -zxvf kubernetes-client-linux-amd64.tar.gz

cp kubernetes/client/bin/kubectl /usr/bin/为了让kubectl能够查找和访问Kubernetes集群,它需要一个kubeconfig文件,kubeconfig文件用于组织关于集群、用户、命名空间和认证机制的信息。命令行工具kubectl从kubeconfig文件中得到它要选择的集群以及跟集群API Server交互的信息。

官方设置文章:

https://k8smeetup.github.io/docs/tasks/access-application-cluster/configure-access-multiple-clusters/官方的文章里是单独创建一个目录,然后写好集群、用户和上下文。下边我是直接用命令生成,生成的配置信息默认保存到~/.kube/config文件中,两种方法达到的效果一样,随你们选择

(--server后边跟的是kube-apiserver的地址和端口,虽然这里我们还没有部署apiserver)

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.1.89:6443kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/etc/kubernetes/ssl/admin-key.pemkubectl config set-context kubernetes --cluster=kubernetes --user=adminkubectl config use-context kubernetes[root@master ~]# kubectl config viewapiVersion: v1

clusters:

- cluster:

certificate-authority-data: REDACTED

server: https://192.168.1.89:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: admin

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED官方地址:

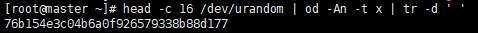

https://kubernetes.io/cn/docs/admin/kubelet-tls-bootstrapping/cd /root/

head -c 16 /dev/urandom | od -An -t x | tr -d ‘ ‘

vim token.csv76b154e3c04b6a0f926579338b88d177,kubelet-bootstrap,10001,"system:kubelet-bootstrap"cp token.csv /etc/kubernetes/如果后续重新生成了Token,则需要:

1、更新token.csv文件,分发到所有机器 (master和node)的/etc/kubernetes/目录下,分发到node节点上非必需;

2、重新生成bootstrap.kubeconfig文件,分发到所有node机器的/etc/kubernetes/目录下;

3、重启kube-apiserver和kubelet进程;

4、重新approve kubelet的csr请求;cd /etc/kubernetes/kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.1.89:6443 --kubeconfig=bootstrap.kubeconfigkubectl config set-credentials kubelet-bootstrap --token=76b154e3c04b6a0f926579338b88d177 --kubeconfig=bootstrap.kubeconfigkubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfigkubectl config use-context default --kubeconfig=bootstrap.kubeconfig注意:

1、--embed-certs 为 true 时表示将 certificate-authority 证书写入到生成的 bootstrap.kubeconfig 文件中;

2、设置客户端认证参数时没有指定秘钥和证书,后续由kube-apiserver自动生成;cd /etc/kubernetes/kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.1.89:6443 --kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/proxy.pem --client-key=/etc/kubernetes/ssl/proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfigkubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig注意:

设置集群参数和客户端认证参数时--embed-certs都为true,这会将certificate-authority、client-certificate和client-key指向的证书文件内容写入到生成的kube-proxy.kubeconfig文件中

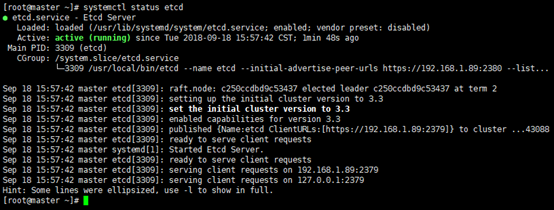

yum或者二进制包安装都可以,这里我选择二进制包安装的方式,yum安装的话直接yum -y install etcd即可,也可以源码安装,具体可以参考这篇文章

https://blog.csdn.net/varyall/article/details/79128181ETCD官网下载地址:

https://github.com/etcd-io/etcd/releasestar -zxvf etcd-v3.3.9-linux-amd64.tar.gzmv etcd-v3.3.9-linux-amd64 /usr/local/etcdln -s /usr/local/etcd/etcd* /usr/local/bin/chmod +x /usr/local/bin/etcd*[root@master ~]# etcd --versionetcd Version: 3.3.9

Git SHA: fca8add78

Go Version: go1.10.3

Go OS/Arch: linux/amd64vim /usr/lib/systemd/system/etcd.service[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd --name ${ETCD_NAME} --initial-advertise-peer-urls ${ETCD_INITIAL_ADVERTISE_PEER_URLS} --listen-peer-urls ${ETCD_LISTEN_PEER_URLS} --listen-client-urls ${ETCD_LISTEN_CLIENT_URLS} --advertise-client-urls ${ETCD_ADVERTISE_CLIENT_URLS} --initial-cluster-token ${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster ${ETCD_INITIAL_CLUSTER} --initial-cluster-state new --data-dir=${ETCD_DATA_DIR}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetmkdir -p /etc/etcd

vim /etc/etcd/etcd.conf# [member]

ETCD_NAME=etcd

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_WAL_DIR="/var/lib/etcd/wal"

ETCD_SNAPSHOT_COUNT="100"

ETCD_HEARTBEAT_INTERVAL="100"

ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="https://192.168.1.89:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.89:2379,https://127.0.0.1:2379"

ETCD_MAX_SNAPSHOTS="5"

ETCD_MAX_WALS="5"

#ETCD_CORS=""

# [cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.89:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd=https://192.168.1.89:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.89:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

# [proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

# [security]

ETCD_CERT_FILE="/etc/kubernetes/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_TRUSTED_CA_FILE="/etc/kubernetes/ssl/ca.pem"

ETCD_AUTO_TLS="true"

ETCD_PEER_CERT_FILE="/etc/kubernetes/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/kubernetes/ssl/etcd-key.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_TRUSTED_CA_FILE="/etc/kubernetes/ssl/ca.pem"

ETCD_PEER_AUTO_TLS="true"systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

firewall-cmd --add-port=2379/tcp --permanent

firewall-cmd --add-port=2380/tcp --permanent

systemctl restart firewalld[root@master ~]# netstat -luntpActive Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 12869/etcd

tcp 0 0 192.168.1.89:2379 0.0.0.0:* LISTEN 12869/etcd

tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 12869/etcd

tcp 0 0 192.168.1.89:2380 0.0.0.0:* LISTEN 12869/etcd

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1299/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2456/master

tcp6 0 0 :::22 :::* LISTEN 1299/sshd

tcp6 0 0 ::1:25 :::* LISTEN 2456/master

udp 0 0 192.168.1.89:123 0.0.0.0:* 2668/ntpd

udp 0 0 127.0.0.1:123 0.0.0.0:* 2668/ntpd

udp 0 0 0.0.0.0:123 0.0.0.0:* 2668/ntpd

udp6 0 0 fe80::20c:29ff:fec7:123 :::* 2668/ntpd

udp6 0 0 ::1:123 :::* 2668/ntpd

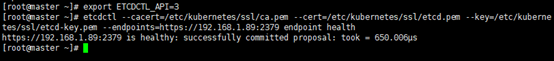

udp6 0 0 :::123 :::* 2668/ntpd [root@master ~]# export ETCDCTL_API=3

[root@master ~]# etcdctl --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem --endpoints=https://192.168.1.89:2379 endpoint healthhttps://192.168.1.89:2379 is healthy: successfully committed proposal: took = 650.006μs

这应该是全文最简单的安装步骤了,之前也有写过docker的安装方法,本来不想再叙述的,想了想,还是写一写吧,要不然你们重新去翻一遍之前的文章也不舒服。这里我使用yum安装。

yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoyum list docker-ce --showduplicates | sort -r* updates: mirrors.163.com

Loading mirror speeds from cached hostfile

Loaded plugins: fastestmirror

* extras: mirrors.163.com

* epel: mirror01.idc.hinet.net

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

* base: mirrors.163.com

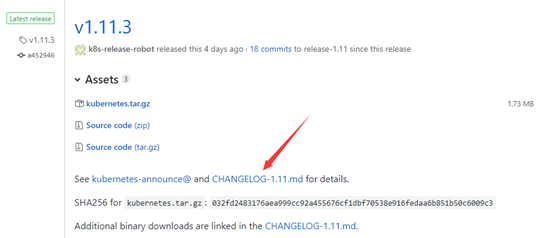

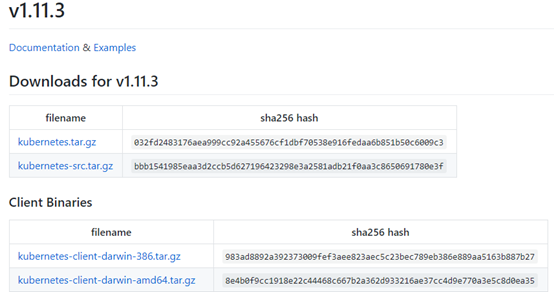

Available Packagesyum -y install docker-ce-18.03.1.ce-1.el7.centosgpasswd -a root dockersystemctl start dockersystemctl enable dockerhttps://github.com/kubernetes/kubernetes/releases/tag/v1.11.3

上图中kubernetes.tar.gz内包含了kubernetes的服务程序文件、文档和示例,而kubernetes-src.tar.gz内则包含了全部源代码。这里我选择了下边的Server Binaries中的文件,其中包含了kubernetes需要运行的全部服务程序文件

也可以参考官方的说明来做:

https://kubernetes.io/docs/setup/scratch/#downloading-and-extracting-kubernetes-binaries命令行下载:

wget https://dl.k8s.io/v1.11.3/kubernetes-server-linux-amd64.tar.gztar -zxvf kubernetes-server-linux-amd64.tar.gz

mv kubernetes /usr/local/

ln -s /usr/local/kubernetes/server/bin/hyperkube /usr/bin/

ln -s /usr/local/kubernetes/server/bin/kube-apiserver /usr/bin/

ln -s /usr/local/kubernetes/server/bin/kube-scheduler /usr/bin/

ln -s /usr/local/kubernetes/server/bin/kubelet /usr/bin/

ln -s /usr/local/kubernetes/server/bin/kube-controller-manager /usr/bin/

ln -s /usr/local/kubernetes/server/bin/kube-proxy /usr/bin/vim /usr/lib/systemd/system/kube-apiserver.service[Unit]

Description=Kubernetes API Service

After=network.target

After=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_ETCD_SERVERS $KUBE_API_ADDRESS $KUBE_API_PORT $KUBELET_PORT $KUBE_ALLOW_PRIV $KUBE_SERVICE_ADDRESSES $KUBE_ADMISSION_CONTROL $KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetvim /etc/kubernetes/config###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

#KUBE_MASTER="--master=http://sz-pg-oam-docker-test-001.tendcloud.com:8080"

KUBE_MASTER="--master=http://192.168.1.89:8080"把IP地址改为你自己的IP地址,该配置文件同时被kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy使用

vim /etc/kubernetes/apiserver###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--advertise-address=0.0.0.0 --insecure-bind-address=0.0.0.0 --bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--insecure-port=8080 --secure-port=6443"

# Port minions listen on

# KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=https://192.168.1.89:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.0.0.0/24"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction"

# Add your own!

KUBE_API_ARGS="--authorization-mode=RBAC,Node --endpoint-reconciler-type=lease --runtime-config=batch/v2alpha1=true --anonymous-auth=false --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/etc/kubernetes/ssl/apiserver.pem --tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --etcd-quorum-read=true --storage-backend=etcd3 --etcd-cafile=/etc/kubernetes/ssl/ca.pem --etcd-certfile=/etc/kubernetes/ssl/etcd.pem --etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem --enable-swagger-ui=true --apiserver-count=3 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/log/kube-audit/audit.log --event-ttl=1h "把IP地址改为你的,证书和密钥路径改为你的即可

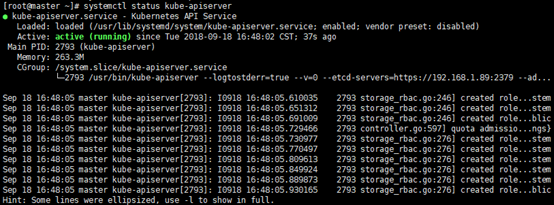

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

firewall-cmd --add-port=8080/tcp --permanent

firewall-cmd --add-port=6443/tcp --permanent

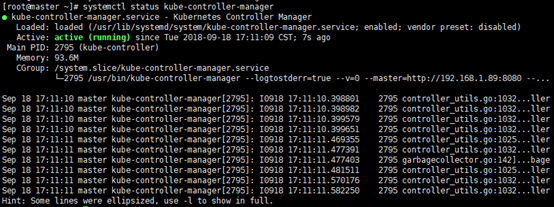

systemctl restart firewalldvim /usr/lib/systemd/system/kube-controller-manager.service[Unit]

Description=Kubernetes Controller Manager

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetvim /etc/kubernetes/controller-manager###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--address=0.0.0.0 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --leader-elect=true --node-monitor-grace-period=40s --node-monitor-period=5s --pod-eviction-timeout=60s"systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

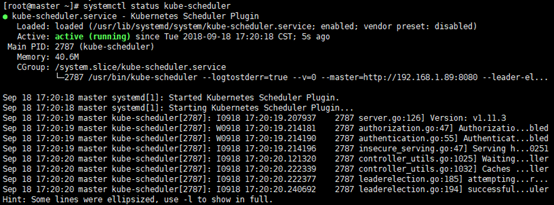

vim /usr/lib/systemd/system/kube-scheduler.service[Unit]

Description=Kubernetes Scheduler Plugin

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetvim /etc/kubernetes/scheduler###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=0.0.0.0"systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

提示:kube-apiserver是主要服务,如果apiserver启动失败其它的也会失败

[root@master ~]# kubectl get csNAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"} 没有异常即为Master节点安装成功!!!!

好了,到此master节点已经全部安装完成,并成功运行,接下来开始到node节点的安装了

cp /root/.kube/config /etc/kubernetes/kubelet.kubeconfigyum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoyum list docker-ce --showduplicates | sort -r* updates: mirrors.163.com

Loading mirror speeds from cached hostfile

Loaded plugins: fastestmirror

* extras: mirrors.163.com

* epel: mirror01.idc.hinet.net

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

* base: mirrors.163.com

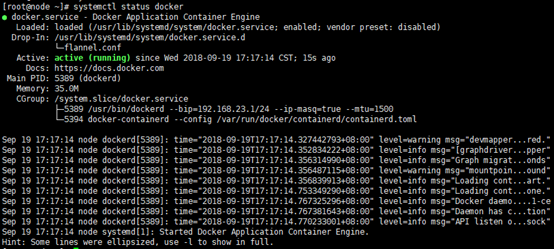

Available Packagesyum -y install docker-ce-18.03.1.ce-1.el7.centosgpasswd -a root dockersystemctl start dockersystemctl enable dockervim /usr/lib/systemd/system/docker.service[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service flanneld.service

Wants=network-online.target

Requires=flanneld.service

[Service]

Type=notify

EnvironmentFile=/run/flannel/docker

EnvironmentFile=/run/flannel/subnet.env

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd $DOCKER_OPT_BIP $DOCKER_OPT_IPMASQ $DOCKER_OPT_MTU $DOCKER_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

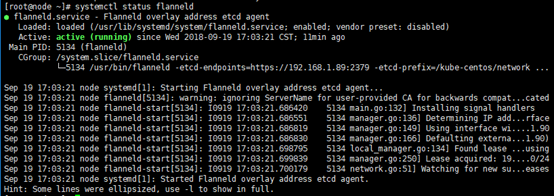

WantedBy=multi-user.target所有的node节点都需要安装网络插件才能让所有的pod加入到同一个局域网中,这里我选择的是flannel网络插件,当然,你们也可以选择别的插件,去官网学习学习就可以

建议直接使用yum安装flannel,默认安装的版本的是0.7.1的,除非对版本有特殊需求,可以二进制安装,可以参考这篇文章:http://blog.51cto.com/tryingstuff/2121707

[root@node ~]# yum list flannel | sort -r * updates: mirrors.cn99.com

Loading mirror speeds from cached hostfile

Loaded plugins: fastestmirror

flannel.x86_64 0.7.1-4.el7 extras

* extras: mirrors.aliyun.com

* epel: mirrors.aliyun.com

* base: mirrors.shu.edu.cn

Available Packagesyum -y install flannelvim /usr/lib/systemd/system/flanneld.service[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/sysconfig/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/bin/flanneld-start -etcd-endpoints=${FLANNEL_ETCD_ENDPOINTS} -etcd-prefix=${FLANNEL_ETCD_PREFIX} $FLANNEL_OPTIONS

ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

WantedBy=docker.service参数说明:

1)Flannel网络必须在宿主机网络能对外(其它node节点)正常通信的情况下启动才有意义,所以这里定义 After=network.target

2)只有当Flannel 网络启动之后,才能创建一个与其它节点不会冲突的网络,而docker的网络需要和fannel 网络相同才能保证跨主机通信,所以docker必须要在flannel网络创建后才能启动,这里定义 Before=docker.service

3)在 /etc/kubernetes/flanneld 文件中,我们会指定flannel相关的启动参数,这里由于需要指定etcd集群,会有一部分不通用的参数,所以单独定义。

4)启动之后,我们需要使用fannel自带的脚本/usr/libexec/flannel/mk-docker-opts.sh,创建一个docker使用的启动参数,这个参数就包含配置docker0网卡的网段。vim /etc/sysconfig/flanneld# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="https://192.168.1.89:2379" ##etcd集群的地址,多个用英文逗号隔开

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/kube-centos/network"

# Any additional options that you want to pass

FLANNEL_OPTIONS="-etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/etcd.pem -etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem"etcdctl --endpoints=https://192.168.1.89:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem mkdir /kube-centos/networketcdctl --endpoints=https://192.168.1.89:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem mk /kube-centos/network/config ‘{"Network":"192.168.0.0/16","SubnetLen":24,"Backend":{"Type":"host-gw"}}‘systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl status flanneld

systemctl restart docker

systemctl status docker

[root@master ~]# etcdctl --endpoints=https://192.168.1.89:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem ls /kube-centos/network/subnets/kube-centos/network/subnets/192.168.23.0-24[root@master ~]# etcdctl --endpoints=https://192.168.1.89:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem get /kube-centos/network/config{"Network":"192.168.0.0/16","SubnetLen":24,"Backend":{"Type":"host-gw"}}[root@master ~]# etcdctl --endpoints=https://192.168.1.89:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem get /kube-centos/network/subnets/192.168.23.0-24{"PublicIP":"192.168.1.90","BackendType":"host-gw"}注意:每台node节点上都要安装flannel,master节点上可以不装

之前我们已经下载过kubernetes-server-linux-amd64.tar.gz这个包,这个包里包含了所有Kubernetes需要运行的组件,我们把这个包上传到服务器,安装我们需要的组件即可

tar -zxvf kubernetes-server-linux-amd64.tar.gz

mv kubernetes /usr/local/

ln -s /usr/local/kubernetes/server/bin/kubelet /usr/bin/vim /usr/lib/systemd/system/kubelet.service[Unit]

Description=Kubernetes Kubelet Server

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBELET_API_SERVER $KUBELET_ADDRESS $KUBELET_PORT $KUBELET_HOSTNAME $KUBE_ALLOW_PRIV $KUBELET_POD_INFRA_CONTAINER $KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.targetvim /etc/kubernetes/kubelet###

## kubernetes kubelet (minion) config

#

## The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

#

## The port for the info server to serve on

KUBELET_PORT="--port=10250"

#

## You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.1.90"

#

## location of the api-server

## COMMENT THIS ON KUBERNETES 1.8+

#KUBELET_API_SERVER=""

#

## Add your own!

KUBELET_ARGS="--cgroup-driver=cgroupfs --experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --cert-dir=/etc/kubernetes/ssl --cluster-domain=cluster.local --hairpin-mode promiscuous-bridge --serialize-image-pulls=false"systemctl daemon-reload

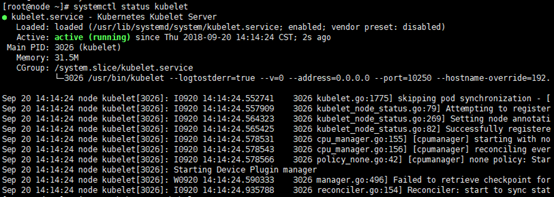

systemctl enable kubelet

systemctl start kubelet

systemctl status kubelet

firewall-cmd --add-port=10250/tcp --permanent

systemctl restart firewalld上一步已经解包安装了所有文件,这里做好软链接即可

ln -s /usr/local/kubernetes/server/bin/kube-proxy /usr/bin/vim /usr/lib/systemd/system/kube-proxy.service[Unit]

Description=Kubernetes Kube-Proxy Server

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetvim /etc/kubernetes/proxy###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--bind-address=0.0.0.0 --hostname-override=192.168.1.90 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig --masquerade-all"systemctl daemon-reload

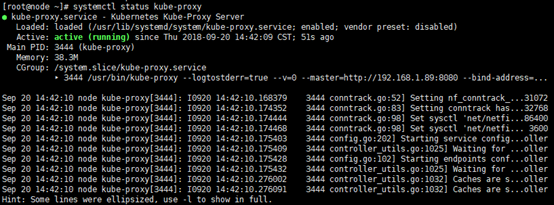

systemctl enable kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy

这里省略了,如果需要创建多个node节点,直接按照上面的Node部署流程重复即可

到master节点上验证安装好的两个node节点

[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSION

192.168.1.90 Ready <none> 1h v1.11.3

192.168.1.91 Ready <none> 2m v1.11.3状态为Ready即为节点加入成功!!

标签:val ade firewalld 集群 nta inux one 解压 swap

原文地址:https://www.cnblogs.com/93bok/p/9686147.html