标签:count 组件 first 证书过期 第三方 table sign dev nvm

零基础环境软件版本

本篇主要针对Kubernetes部署Prometheus相关配置介绍,自己编写prometheus 的相关yaml。

另外还有开源的方案:prometheus-operator/kube-prometheus。

通过node_exporter获取,node_exporter就是抓取用于采集服务器节点的各种运行指标,目前node_exporter几乎支持所有常见的监控点,比如cpu、distats、loadavg、meminfo、netstat等,详细的监控列表可以参考github repo

这里使用DeamonSet控制器来部署该服务,这样每一个节点都会运行一个Pod,如果我们从集群中删除或添加节点后,也会进行自动扩展

<!--more-->

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: node-exporter

name: node-exporter-9400

namespace: default

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

containers:

- args:

- --web.listen-address=0.0.0.0:9400

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

image: harbor.foxchan.com/prom/node-exporter:v1.0.1

imagePullPolicy: IfNotPresent

name: node-exporter-9400

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

dnsPolicy: Default

hostNetwork: true

hostPID: true

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /proc

type: ""

name: proc

- hostPath:

path: /sys

type: ""

name: sys

- hostPath:

path: /

type: ""

name: root

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

updateStrategy:

type: OnDelete01-prom-rbac.yaml

和别人的配置不同在于,添加了ingress等权限,便于后期安装

kube-state-metric等第三方插件,因为权限不足无法获取metric的问题

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- nodes/proxy

- nodes/metrics

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

You have mail in /var/spool/mail/root02-prom-cm.yaml

和官方yaml 不一样的地方在于,修改了node 和cadvisor的metric 地址,避免频繁请求

apiserver造成apiserver压力过大

kind: ConfigMap

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_timeout: 10s

rule_files:

- /prometheus-rules/*.rules.yml

scrape_configs:

- job_name: ‘kubernetes-apiservers‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: ‘kubernetes-controller-manager‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-controller-manager;https-metrics

- job_name: ‘kubernetes-scheduler‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-scheduler;https-metrics

- job_name: ‘kubernetes-nodes‘

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: ‘kubernetes-cadvisor‘

metrics_path: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

metric_relabel_configs:

- source_labels: [instance]

regex: (.+)

target_label: node

replacement: $1

action: replace

- job_name: ‘kubernetes-service-endpoints‘

scrape_interval: 1m

scrape_timeout: 10s

metrics_path: /metrics

scheme: http

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

regex: "true"

action: keep

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_metric_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: ‘kubernetes-services‘

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_should_be_probed]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.default.svc.cluster.local:9115

- source_labels: [__param_target]

target_label: instance

action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-ingresses‘

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_should_be_probed]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.default.svc.cluster.local:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-pods‘

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_should_be_scraped]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_metric_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name03-prom-deploy.yaml

添加了

node selector和hostnetwork

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-system

labels:

app: prometheus

spec:

ports:

- name: web

port: 9090

protocol: TCP

targetPort: web

selector:

app: prometheus

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

serviceAccount: prometheus

initContainers:

- name: "init-chown-data"

image: "harbor.foxchan.com/sys/busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/prometheus/data"]

volumeMounts:

- name: prometheusdata

mountPath: /prometheus/data

subPath: ""

volumes:

- hostPath:

path: /data/prometheus

name: prometheusdata

containers:

- name: prometheus

image: harbor.foxchan.com/prom/prometheus:v2.21.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9090

name: web

protocol: TCP

args:

- ‘--config.file=/etc/prometheus/prometheus.yml‘

- ‘--web.listen-address=:9090‘

- ‘--web.enable-admin-api‘

- ‘--web.enable-lifecycle‘

- ‘--log.level=info‘

- ‘--storage.tsdb.path=/prometheus/data‘

- ‘--storage.tsdb.retention=15d‘

volumeMounts:

- name: config-volume

mountPath: /etc/prometheus

- name: prometheusdata

mountPath: /prometheus/data

- mountPath: /prometheus-rules

name: prometheus-rules-all

volumes:

- name: config-volume

configMap:

name: prometheus-gs

- hostPath:

path: /data/prometheus

name: prometheusdata

- name: prometheus-rules-all

projected:

defaultMode: 420

sources:

- configMap:

name: prometheus-k8s-rules

- configMap:

name: prometheus-nodeexporter-rules

dnsPolicy: ClusterFirst

nodeSelector:

monitor: prometheus-gsprom-node-rules-cm.yaml

apiVersion: v1

data:

node-exporter.rules.yml: |

groups:

- name: node-exporter.rules

rules:

- expr: |

count without (cpu) (

count without (mode) (

node_cpu_seconds_total{job="node-exporter"}

)

)

record: instance:node_num_cpu:sum

- expr: |

1 - avg without (cpu, mode) (

rate(node_cpu_seconds_total{job="node-exporter", mode="idle"}[1m])

)

record: instance:node_cpu_utilisation:rate1m

- expr: |

(

node_load1{job="node-exporter"}

/

instance:node_num_cpu:sum{job="node-exporter"}

)

record: instance:node_load1_per_cpu:ratio

- expr: |

1 - (

node_memory_MemAvailable_bytes{job="node-exporter"}

/

node_memory_MemTotal_bytes{job="node-exporter"}

)

record: instance:node_memory_utilisation:ratio

- expr: |

rate(node_vmstat_pgmajfault{job="node-exporter"}[1m])

record: instance:node_vmstat_pgmajfault:rate1m

- expr: |

rate(node_disk_io_time_seconds_total{job="node-exporter", device=~"nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|dasd.+"}[1m])

record: instance_device:node_disk_io_time_seconds:rate1m

- expr: |

rate(node_disk_io_time_weighted_seconds_total{job="node-exporter", device=~"nvme.+|rbd.+|sd.+|vd.+|xvd.+|dm-.+|dasd.+"}[1m])

record: instance_device:node_disk_io_time_weighted_seconds:rate1m

- expr: |

sum without (device) (

rate(node_network_receive_bytes_total{job="node-exporter", device!="lo"}[1m])

)

record: instance:node_network_receive_bytes_excluding_lo:rate1m

- expr: |

sum without (device) (

rate(node_network_transmit_bytes_total{job="node-exporter", device!="lo"}[1m])

)

record: instance:node_network_transmit_bytes_excluding_lo:rate1m

- expr: |

sum without (device) (

rate(node_network_receive_drop_total{job="node-exporter", device!="lo"}[1m])

)

record: instance:node_network_receive_drop_excluding_lo:rate1m

- expr: |

sum without (device) (

rate(node_network_transmit_drop_total{job="node-exporter", device!="lo"}[1m])

)

record: instance:node_network_transmit_drop_excluding_lo:rate1m

kind: ConfigMap

metadata:

name: prometheus-nodeexporter-rules

namespace: kube-systemprom-k8s-rules-cm.yaml

其余的rules 因为太大,放到git仓库

已经有了cadvisor,几乎容器运行的所有指标都能拿到,但是下面这种情况却无能为力:

而这些则是kube-state-metrics提供的内容,它基于client-go开发,轮询Kubernetes API,并将Kubernetes的结构化信息转换为metrics。

kube-state-metrics提供的指标,按照阶段分为三种类别:

kube-state-metrics 和kubernetes 版本对应列表

| kube-state-metrics | Kubernetes 1.15 | Kubernetes 1.16 | Kubernetes 1.17 | Kubernetes 1.18 | Kubernetes 1.19 |

|---|---|---|---|---|---|

| v1.8.0 | ? | - | - | - | - |

| v1.9.7 | - | ? | - | - | - |

| v2.0.0-alpha.1 | - | - | ? | ? | ? |

| master | - | - | ? | ? | ? |

? Fully supported version range.- The Kubernetes cluster has features the client-go library can‘t use (additional API objects, deprecated APIs, etc).创建rbac

01-kube-state-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- apps

resourceNames:

- kube-state-metrics

resources:

- statefulsets

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- get

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

- ingresses

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- get

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectacce***eviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system创建sts

02-kube-state-sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

serviceName: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

spec:

containers:

- args:

- --pod=$(POD_NAME)

- --pod-namespace=$(POD_NAMESPACE)

env:

- name: POD_NAME

value: ""

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

value: ""

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: harbor.foxchan.com/coreos/kube-state-metrics:v2.0.0-alpha.1

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

securityContext:

runAsUser: 65534

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

volumeClaimTemplates: []

---

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: ‘true‘

prometheus.io/port: "8080"

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.0.0-alpha

name: kube-state-metrics

namespace: kube-system

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics高版本k8s 默认使用https,kube-controller-manager端口10257 kube-scheduler端口10259

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager

namespace: kube-system

labels:

component: kube-controller-manager # 与pod中的labels匹配,便于自动生成endpoints

spec:

selector:

component: kube-controller-manager

type: ClusterIP

clusterIP: None

ports:

- name: https-metrics

port: 10257

protocol: TCP

targetPort: 10257

---

apiVersion: v1

kind: Service

metadata:

name: kube-scheduler

namespace: kube-system

labels:

component: kube-scheduler # 与pod中的labels匹配,便于自动生成endpoints

spec:

selector:

component: kube-scheduler

type: ClusterIP

clusterIP: None

ports:

- name: https-metrics

port: 10259

targetPort: 10259

protocol: TCP默认情况下,kube-controller-manager和kube-scheduler绑定IP为127.0.0.1,需要改监听地址。

metrics的访问需要证书验证,需要取消证书验证。

修改kube-controller-manager.yaml 和 kube-scheduler.yaml

--bind-address=0.0.0.0

--authorization-always-allow-paths=/healthz,/metrics - job_name: ‘kubernetes-controller-manager‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-controller-manager;https-metrics

- job_name: ‘kubernetes-scheduler‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-scheduler;https-metrics官方提供的 exporter 之一,可以提供 http、dns、tcp、icmp 的监控数据采集

Blackbox_exporter 应用场景

yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: ‘true‘

labels:

app: blackbox-exporter

name: blackbox-exporter

spec:

ports:

- name: blackbox

port: 9115

protocol: TCP

selector:

app: blackbox-exporter

type: ClusterIP

---

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 30s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,302,301,401,404]

method: GET

preferred_ip_protocol: "ip4"

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

containers:

- image: harbor.foxchan.com/prom/blackbox-exporter:v0.16.0

imagePullPolicy: IfNotPresent

name: blackbox-exporter

ports:

- name: blackbox-port

containerPort: 9115

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=debug

- --web.listen-address=:9115

volumes:

- name: config

configMap:

name: blackbox-exporter

nodeSelector:

monitorexport: "blackbox"

tolerations:

- key: "node-role.kubernetes.io/master"

effect: "NoSchedule"场景:由于采集的数据量巨大,所以部署了多台prometheus server服务器。根据业务领域(k8s集群)分片采集,减轻prometheus server单节点的压力。

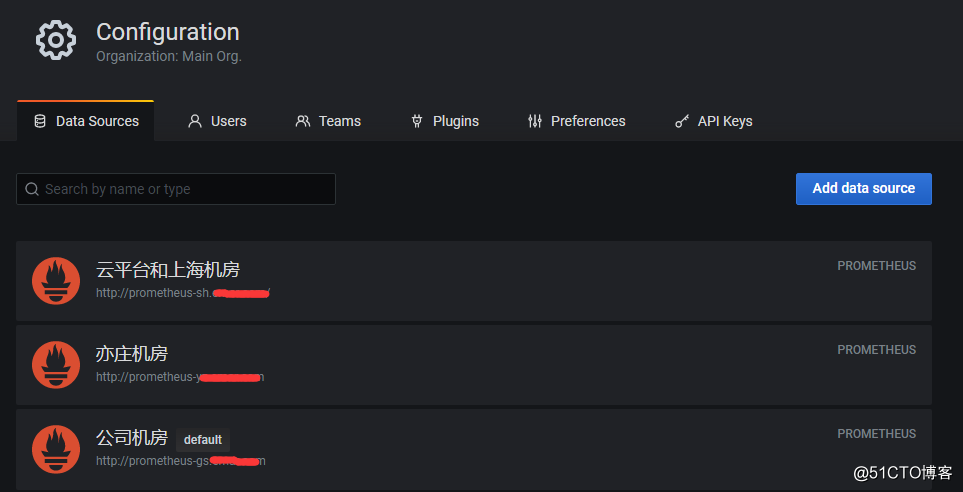

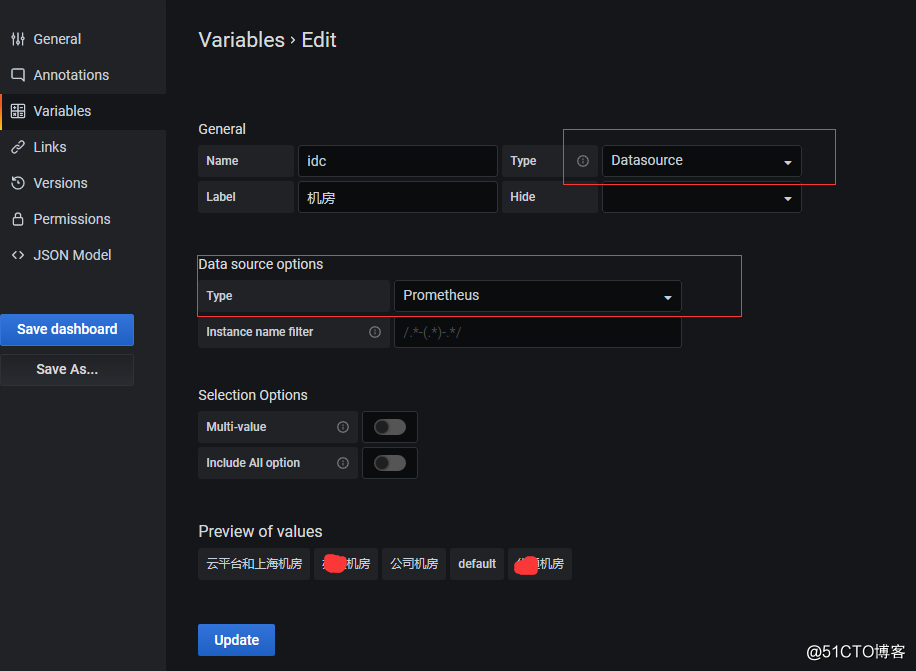

截图中的Prometheus就是我不同机房的数据源

标签:count 组件 first 证书过期 第三方 table sign dev nvm

原文地址:https://blog.51cto.com/foxhound/2543109