标签:过程 bar params tensor 维度 info type star and

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from keras.layers import Input, Dense, Dropout, Activation,Conv2D,MaxPool2D,Flatten

from keras.datasets import mnist

from keras.models import Model

from tensorflow.python.keras.utils.np_utils import to_categorical

from keras.callbacks import TensorBoard

%load_ext tensorboard

%tensorboard --logdir ‘./log/train‘

# 加载一次后,如果要重新加载,就需要使用reload方法

%reload_ext tensorboard

%tensorboard --logdir ‘./log/train‘

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# 数据可视化

def image(data,row,col):

images=data[:col*row]

fig,axs=plt.subplots(row,col)

idx = 0

for i in range(row):

for j in range(col):

axs[i, j].imshow(images[idx, :, :], cmap=‘gray‘)

axs[i, j].axis(‘off‘)

idx += 1

plt.show()

image(x_train,5,5)

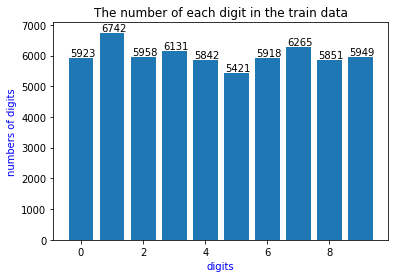

temp=np.unique(y_train)

y_train_numbers = []

for v in temp:

y_train_numbers.append(np.sum(y_train==v))

plt.title("The number of each digit in the train data")

plt.bar(temp,y_train_numbers)

plt.xlabel(‘digits‘, color=‘b‘)

plt.ylabel(‘numbers of digits‘, color=‘b‘)

for x, y in zip(range(len(y_train_numbers)), y_train_numbers):

plt.text(x + 0.05, y + 0.05, ‘%.2d‘ % y, ha=‘center‘, va=‘bottom‘)

plt.show()

# 数据标准化

def normalize(x):

x = x.astype(np.float32) / 255.0

return x

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1).astype(‘float32‘)

x_test = x_test.reshape(x_test.shape[0], 28, 28, 1).astype(‘float32‘)

x_train = normalize(x_train)

x_test = normalize(x_test)

# 创建模型

(60000, 28, 28)

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dense, Dropout, Flatten

%load_ext tensorboard

#使用tensorboard 扩展

%tensorboard --logdir logs

#定位tensorboard读取的文件目录

# logs是存放tensorboard文件的目录

def model_1():

# 创建模型,输入28*28*1个神经元,输出10个神经元

model = Sequential()

model.add(Conv2D(filters=8, kernel_size=(5, 5), padding=‘same‘,

input_shape=(28, 28, 1), activation=‘relu‘))

# 第一个池化层

model.add(MaxPooling2D(pool_size=(2, 2))) # 出来的特征图为14*14大小

# 第二个卷积层 16个滤波器(卷积核),卷积窗口大小为5*5

# 经过第二个卷积层后,有16个feature map,每个feature map为14*14

model.add(Conv2D(filters=16, kernel_size=(5,5), padding=‘same‘,

activation=‘relu‘))

model.add(MaxPooling2D(pool_size=(2, 2))) # 出来的特征图为7*7大小

# 平坦层,把第二个池化层的输出扁平化为1维 16*7*7

model.add(Flatten())

# 第一个全连接层

model.add(Dense(100, activation=‘relu‘))

# Dropout,用来放弃一些权值,防止过拟合

model.add(Dropout(0.25))

# 第二个全连接层,由于是输出层,所以使用softmax做激活函数

model.add(Dense(10, activation=‘softmax‘))

# 打印模型

return model

model_demo1=model_1()

model_demo1.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_2 (Conv2D) (None, 28, 28, 8) 208

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 14, 14, 8) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 14, 14, 16) 3216

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 7, 7, 16) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 784) 0

_________________________________________________________________

dense_2 (Dense) (None, 100) 78500

_________________________________________________________________

dropout_1 (Dropout) (None, 100) 0

_________________________________________________________________

dense_3 (Dense) (None, 10) 1010

=================================================================

Total params: 82,934

Trainable params: 82,934

Non-trainable params: 0

_________________________________________________________________

batch_size=200

# 使用tensorboard可视化训练过程

# logs是存放tensorboard文件的目录

model_demo1.compile(loss=‘sparse_categorical_crossentropy‘,

optimizer=‘adam‘, metrics=[‘acc‘])

from tensorflow.keras.callbacks import TensorBoard

tbCallBack = TensorBoard(log_dir=‘./log‘, histogram_freq=1,

write_graph=True,

write_grads=True,

batch_size=batch_size,

write_images=True)

WARNING:tensorflow:`write_grads` will be ignored in TensorFlow 2.0 for the `TensorBoard` Callback.

WARNING:tensorflow:`batch_size` is no longer needed in the `TensorBoard` Callback and will be ignored in TensorFlow 2.0.

# 开始训练

history = model_demo1.fit(x=x_train, y=y_train, validation_split=0.2,

epochs=1, batch_size=200, verbose=1

,validation_data=(x_test, y_test)

,callbacks=[tbCallBack])

240/240 [==============================] - 32s 132ms/step - loss: 0.4900 - acc: 0.8523 - val_loss: 0.1351 - val_acc: 0.9604

%load_ext tensorboard

%tensorboard --logdir ‘./log‘

#定位tensorboard读取的文件目录

# logs是存放tensorboard文件的目录

The tensorboard extension is already loaded. To reload it, use:

%reload_ext tensorboard

Reusing TensorBoard on port 6008 (pid 975), started 0:13:42 ago. (Use ‘!kill 975‘ to kill it.)

<IPython.core.display.Javascript object>

x_train.shape

(60000, 28, 28, 1)

import tensorflow as tf

# 使用LeNet网络

def model_LeNet():

model = tf.keras.Sequential()

#28*28->14*14

model.add(tf.keras.layers.MaxPool2D((2,2),input_shape=(28,28,1)))

#卷积层:16个卷积核,尺寸为5*5,参数个数16*(5*5*6+1)=2416,维度变为(10,10,16)

model.add(tf.keras.layers.Conv2D(16,(5,5),activation=‘relu‘))

#池化层,维度变化为(5,5,16)

model.add(tf.keras.layers.MaxPool2D((2,2)))

#拉直,维度(400,)

model.add(tf.keras.layers.Flatten())

#全连接层,参数量400*120+120=48120

model.add(tf.keras.layers.Dense(120,activation=‘relu‘))

#全连接层,参数个数84*120+84=10164

model.add(tf.keras.layers.Dense(84,activation=‘relu‘))

#全连接层,参数个数10*120+10=130,

model.add(tf.keras.layers.Dense(10,activation=‘softmax‘))

return model

model_demo2=model_LeNet()

model_demo2.compile(loss=‘sparse_categorical_crossentropy‘,

optimizer=‘adam‘, metrics=[‘acc‘])

from tensorflow.keras.callbacks import TensorBoard

tbCallBack = TensorBoard(log_dir=‘./log‘, histogram_freq=1,

write_graph=True,

write_grads=True,

batch_size=batch_size,

write_images=True)

# 开始训练

history = model_demo2.fit(x=x_train, y=y_train, validation_split=0.2,

epochs=1, batch_size=200, verbose=1

,validation_data=(x_test, y_test)

,callbacks=[tbCallBack])

WARNING:tensorflow:`write_grads` will be ignored in TensorFlow 2.0 for the `TensorBoard` Callback.

WARNING:tensorflow:`batch_size` is no longer needed in the `TensorBoard` Callback and will be ignored in TensorFlow 2.0.

240/240 [==============================] - 6s 24ms/step - loss: 0.4880 - acc: 0.8655 - val_loss: 0.1780 - val_acc: 0.9462

%load_ext tensorboard

%tensorboard --logdir ‘./log‘

#定位tensorboard读取的文件目录

# logs是存放tensorboard文件的目录

The tensorboard extension is already loaded. To reload it, use:

%reload_ext tensorboard

Reusing TensorBoard on port 6008 (pid 975), started 0:28:04 ago. (Use ‘!kill 975‘ to kill it.)

<IPython.core.display.Javascript object>

x_train[0].shape

(28, 28, 1)

def lenet():

# 输入需要为32*32

model=keras.Sequential()

model.add(keras.layers.Conv2D(6,(1,1),activation=‘relu‘,input_shape=(28,28,1)))

# 28*28*6

#池化层,没有参数,维度变化为(14,14,6)

model.add(tf.keras.layers.MaxPool2D((2,2)))

#卷积层:16个卷积核,尺寸为5*5,参数个数16*(5*5*6+1)=2416,维度变为(10,10,16)

model.add(tf.keras.layers.Conv2D(16,(5,5),activation=‘relu‘))

#池化层,维度变化为(5,5,16)

model.add(tf.keras.layers.MaxPool2D((2,2)))

#拉直,维度(400,)

model.add(tf.keras.layers.Flatten())

#全连接层,参数量400*120+120=48120

model.add(tf.keras.layers.Dense(120,activation=‘relu‘))

#全连接层,参数个数84*120+84=10164

model.add(tf.keras.layers.Dense(84,activation=‘relu‘))

#全连接层,参数个数10*120+10=130,

model.add(tf.keras.layers.Dense(10,activation=‘softmax‘))

model.summary()

return model

标签:过程 bar params tensor 维度 info type star and

原文地址:https://www.cnblogs.com/hufeng2021/p/14932293.html