标签:

装好caffe之后,下面我们来跑它自带的第一个例子,在mnist进行实验,参见http://caffe.berkeleyvision.org/gathered/examples/mnist.html

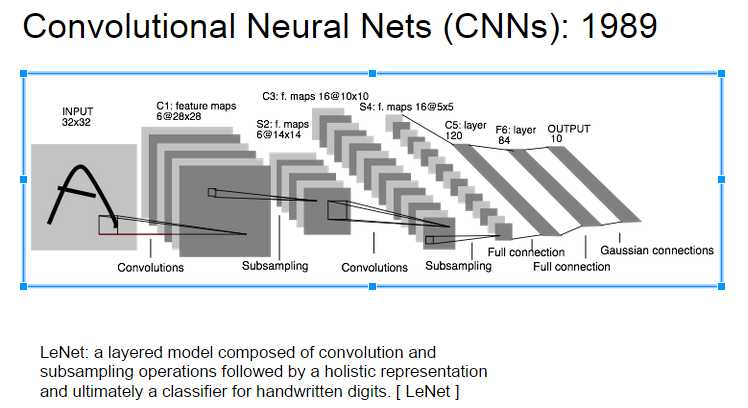

(1)caffe在mnist自带的是使用leNet的网络结构。

1.简介:

LeNet论文是Yan LeCun在1989年的论文Gradient-Based Learning Applied to Document Recognition

http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf,这是CNN第一篇经典之作.

minst是手写字体库,CNN的LeNet的广泛应用于美国的支票手写字体识别。

2.LeNet网络结构

(2)在mnist 进行实验

均在caffe根目录下执行

lenet_train_test.prototxt内容如下

name: "LeNet"

2 layers {

3 name: "mnist"

4 type: DATA

5 top: "data"

6 top: "label"

7 data_param {

8 source: "examples/mnist/mnist_train_lmdb"

9 backend: LMDB

10 batch_size: 64

11 }

12 transform_param {

13 scale: 0.00390625

14 }

15 include: { phase: TRAIN }

16 }

17 layers {

18 name: "mnist"

19 type: DATA

20 top: "data"

21 top: "label"

22 data_param {

23 source: "examples/mnist/mnist_test_lmdb"

24 backend: LMDB

25 batch_size: 100

26 }

27 transform_param {

28 scale: 0.00390625

29 }

30 include: { phase: TEST }

31 }

32

33 layers {

34 name: "conv1"

35 type: CONVOLUTION

36 bottom: "data"

37 top: "conv1"

38 blobs_lr: 1

39 blobs_lr: 2

40 convolution_param {

41 num_output: 20

42 kernel_size: 5

43 stride: 1

44 weight_filler {

45 type: "xavier"

46 }

47 bias_filler {

48 type: "constant"

49 }

50 }

51 }

52 layers {

53 name: "pool1"

54 type: POOLING

55 bottom: "conv1"

56 top: "pool1"

57 pooling_param {

58 pool: MAX

59 kernel_size: 2

60 stride: 2

61 }

62 }

63 layers {

64 name: "conv2"

65 type: CONVOLUTION

66 bottom: "pool1"

67 top: "conv2"

68 blobs_lr: 1

69 blobs_lr: 2

70 convolution_param {

71 num_output: 50

72 kernel_size: 5

73 stride: 1

74 weight_filler {

75 type: "xavier"

76 }

77 bias_filler {

78 type: "constant"

79 }

80 }

81 }

82 layers {

83 name: "pool2"

84 type: POOLING

85 bottom: "conv2"

86 top: "pool2"

87 pooling_param {

88 pool: MAX

89 kernel_size: 2

90 stride: 2

91 }

92 }

93 layers {

94 name: "ip1"

95 type: INNER_PRODUCT

96 bottom: "pool2"

97 top: "ip1"

98 blobs_lr: 1

99 blobs_lr: 2

100 inner_product_param {

101 num_output: 500

102 weight_filler {

103 type: "xavier"

104 }

105 bias_filler {

106 type: "constant"

107 }

108 }

109 }

110 layers {

111 name: "relu1"

112 type: RELU

113 bottom: "ip1"

114 top: "ip1"

115 }

116 layers {

117 name: "ip2"

118 type: INNER_PRODUCT

119 bottom: "ip1"

120 top: "ip2"

121 blobs_lr: 1

122 blobs_lr: 2

123 inner_product_param {

124 num_output: 10

125 weight_filler {

126 type: "xavier"

127 }

128 bias_filler {

129 type: "constant"

130 }

131 }

132 }

133 layers {

134 name: "accuracy"

135 type: ACCURACY

136 bottom: "ip2"

137 bottom: "label"

138 top: "accuracy"

139 include: { phase: TEST }

140 }

141 layers {

142 name: "loss"

143 type: SOFTMAX_LOSS

144 bottom: "ip2"

145 bottom: "label"

146 top: "loss"

147 }

name: "LeNet"

2 layers {

3 name: "mnist"

4 type: DATA

5 top: "data"

6 top: "label"

7 data_param {

8 source: "examples/mnist/mnist_train_lmdb"

9 backend: LMDB

10 batch_size: 64

11 }

12 transform_param {

13 scale: 0.00390625

14 }

15 include: { phase: TRAIN }

16 }

17 layers {

18 name: "mnist"

19 type: DATA

20 top: "data"

21 top: "label"

22 data_param {

23 source: "examples/mnist/mnist_test_lmdb"

24 backend: LMDB

25 batch_size: 100

26 }

27 transform_param {

28 scale: 0.00390625

29 }

30 include: { phase: TEST }

31 }

32

33 layers {

34 name: "conv1"

35 type: CONVOLUTION

36 bottom: "data"

37 top: "conv1"

38 blobs_lr: 1

39 blobs_lr: 2

40 convolution_param {

41 num_output: 20

42 kernel_size: 5

43 stride: 1

44 weight_filler {

45 type: "xavier"

46 }

47 bias_filler {

48 type: "constant"

49 }

50 }

51 }

52 layers {

53 name: "pool1"

54 type: POOLING

55 bottom: "conv1"

56 top: "pool1"

57 pooling_param {

58 pool: MAX

59 kernel_size: 2

60 stride: 2

61 }

62 }

63 layers {

64 name: "conv2"

65 type: CONVOLUTION

66 bottom: "pool1"

67 top: "conv2"

68 blobs_lr: 1

69 blobs_lr: 2

70 convolution_param {

71 num_output: 50

72 kernel_size: 5

73 stride: 1

74 weight_filler {

75 type: "xavier"

76 }

77 bias_filler {

78 type: "constant"

79 }

80 }

81 }

82 layers {

83 name: "pool2"

84 type: POOLING

85 bottom: "conv2"

86 top: "pool2"

87 pooling_param {

88 pool: MAX

89 kernel_size: 2

90 stride: 2

91 }

92 }

93 layers {

94 name: "ip1"

95 type: INNER_PRODUCT

96 bottom: "pool2"

97 top: "ip1"

98 blobs_lr: 1

99 blobs_lr: 2

100 inner_product_param {

101 num_output: 500

102 weight_filler {

103 type: "xavier"

104 }

105 bias_filler {

106 type: "constant"

107 }

108 }

109 }

110 layers {

111 name: "relu1"

112 type: RELU

113 bottom: "ip1"

114 top: "ip1"

115 }

116 layers {

117 name: "ip2"

118 type: INNER_PRODUCT

119 bottom: "ip1"

120 top: "ip2"

121 blobs_lr: 1

122 blobs_lr: 2

123 inner_product_param {

124 num_output: 10

125 weight_filler {

126 type: "xavier"

127 }

128 bias_filler {

129 type: "constant"

130 }

131 }

132 }

133 layers {

134 name: "accuracy"

135 type: ACCURACY

136 bottom: "ip2"

137 bottom: "label"

138 top: "accuracy"

139 include: { phase: TEST }

140 }

141 layers {

142 name: "loss"

143 type: SOFTMAX_LOSS

144 bottom: "ip2"

145 bottom: "label"

146 top: "loss"

147 }

train_lenet.sh内容为

1 #!/usr/bin/env sh

2

3 ./build/tools/caffe train --solver=examples/mnist/lenet_solver.prototxt

它其实使用的是在lenet_solver.prototxt中定义的解决方案

lenet_solver.prototxt内容是

1 # The train/test net protocol buffer definition 2 net: "examples/mnist/lenet_train_test.prototxt" 3 # test_iter specifies how many forward passes the test should carry out. 4 # In the case of MNIST, we have test batch size 100 and 100 test iterations, 5 # covering the full 10,000 testing images. 6 test_iter: 100 7 # Carry out testing every 500 training iterations. 8 test_interval: 500 9 # The base learning rate, momentum and the weight decay of the network. 10 base_lr: 0.01 11 momentum: 0.9 12 weight_decay: 0.0005 13 # The learning rate policy 14 lr_policy: "inv" 15 gamma: 0.0001 16 power: 0.75 17 # Display every 100 iterations 18 display: 100 19 # The maximum number of iterations 20 max_iter: 10000 21 # snapshot intermediate results 22 snapshot: 5000 23 snapshot_prefix: "examples/mnist/lenet" 24 # solver mode: CPU or GPU 25 solver_mode: GPU

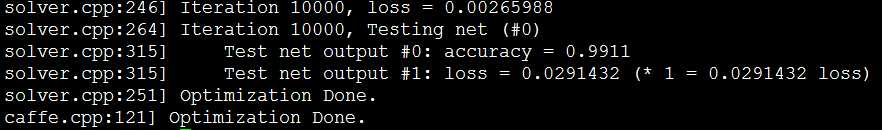

经过一系列迭代iteration之后,在mnist上识别正确率能达到99.11%

标签:

原文地址:http://www.cnblogs.com/cookcoder-mr/p/4452119.html