标签:

1.radial basis function

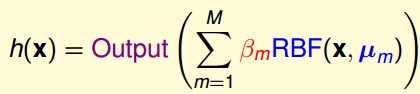

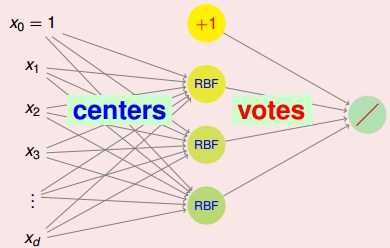

RBF表示某种距离,$\mu_m$为中心点,相当于将点$x$到中心点的某种距离作为特征转换

Output方法可以根据需求任意选取(比如使用SVM,logistic regression等)

关键在于:中心点选取,距离函数选择

2.使用kmean选取中心点,后使用logistic regression

import numpy as np from sklearn.cluster import KMeans from sklearn.linear_model import LogisticRegression from matplotlib import pyplot as plt from sklearn import cross_validation from sklearn.base import BaseEstimator class KMeansRBF: def __init__(self,n_clusters,beta,C): self.n_clusters=n_clusters self.beta=beta self.C=C def fit(self,X,y): km = KMeans(n_clusters=self.n_clusters); km.fit(X) ct = km.cluster_centers_ self.ct = ct G = self._nFeature(ct, X) lg=LogisticRegression(C=self.C) lg.fit(G,y) self.lg=lg def predict(self,X): G = self._nFeature(self.ct, X) return self.lg.predict(G) def _nFeature(self,cts,X): G = np.zeros((X.shape[0],cts.shape[0])) for xi,x in enumerate(X): for ci,c in enumerate(cts): G[xi,ci] = self._kernal(x, c) return G def _kernal(self,x1,x2): x = x1-x2; return np.exp(-self.beta*np.dot(np.transpose(x),x)) def predict_proba(self,X): G = self._nFeature(self.ct, X) return self.lg.predict_proba(G) def get_params(self, deep=True): return {‘n_clusters‘:self.n_clusters,‘beta‘:self.beta,‘C‘:self.C} def set_params(self, **parameters): for parameter, value in parameters.items(): setattr(self, parameter, value) def plot(self,X,y): pos = np.where(y==1) neg = np.where(y==-1) x1 = X[pos[0],:] x2 = X[neg[0],:] plt.figure() plt.plot(x1[:,0],x1[:,1],‘o‘) plt.plot(x2[:,0],x2[:,1],‘o‘) plt.plot(self.ct[0,0],self.ct[0,1],‘ro‘) plt.plot(self.ct[1,0],self.ct[1,1],‘ro‘) xmax = np.max(X[:,0])+5 xmin = np.min(X[:,0])-5 ymax = np.max(X[:,1])+5 ymin = np.min(X[:,1])-5 numx = int((xmax-xmin)*10) numy = int((ymax-ymin)*10) total = numx*numy; lx = np.linspace(xmin,xmax,numx) ly = np.linspace(ymin,ymax,numy) mgrid = np.meshgrid(lx,ly) px = np.hstack((mgrid[0].reshape(total,1),mgrid[1].reshape(total,1))) pre=self.predict_proba(px) ind = np.where(abs(pre[:,1]-pre[:,0])<0.01) px=px[ind] plt.plot(px[:,0],px[:,1],‘yo‘) plt.show() if __name__ == ‘__main__‘: x1=np.random.normal(10, 6.0, (80,2)) x2=np.random.normal(-10, 6.0, (80,2)) X = np.vstack((x1,x2)) y = np.zeros((160,1)) y[range(0,80),0]=y[range(0,80),0]+1 y[range(80,160),0]=y[range(80,160),0]-1 y=np.ravel(y) betas = np.linspace(0.001,0.1,100) k = range(100) score = np.zeros((100,1),‘float‘) bestbeta = 0.001; maxscore = -1; for i,beta in enumerate(betas): krbf = KMeansRBF(2,beta,1) scores =cross_validation.cross_val_score(krbf,X,y,scoring="accuracy",cv=5) score[i,0]=scores.mean() if score[i,0]>maxscore: maxscore=score[i,0] bestbeta = beta plt.figure() plt.plot(k,score,‘b-‘) plt.show() print bestbeta; krbf = KMeansRBF(2,bestbeta,1) krbf.fit(X, y) krbf.plot(X,y)

标签:

原文地址:http://www.cnblogs.com/porco/p/4516732.html