标签:

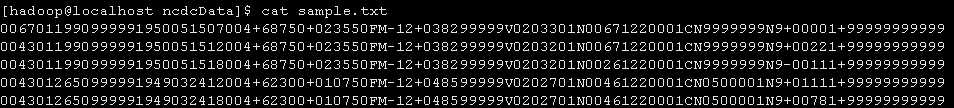

0067011990999991950051507004+68750+023550FM-12+038299999V0203301N00671220001CN9999999N9+00001+999999999990043011990999991950051512004+68750+023550FM-12+038299999V0203201N00671220001CN9999999N9+00221+999999999990043011990999991950051518004+68750+023550FM-12+038299999V0203201N00261220001CN9999999N9-00111+999999999990043012650999991949032412004+62300+010750FM-12+048599999V0202701N00461220001CN0500001N9+01111+999999999990043012650999991949032418004+62300+010750FM-12+048599999V0202701N00461220001CN0500001N9+00781+99999999999

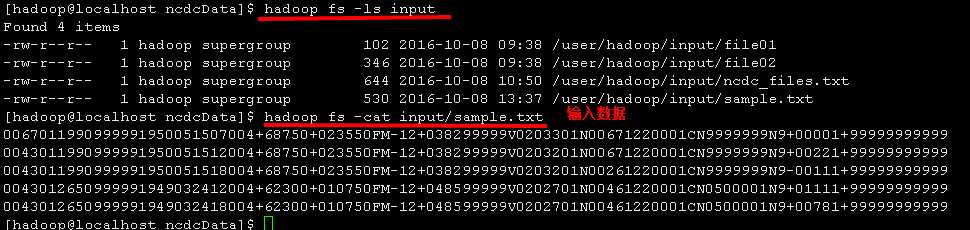

hadoop fs -put /home/hadoop/ncdcData/sample.txt input

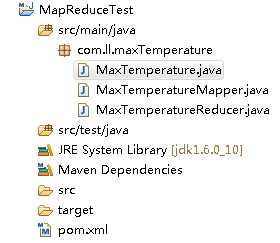

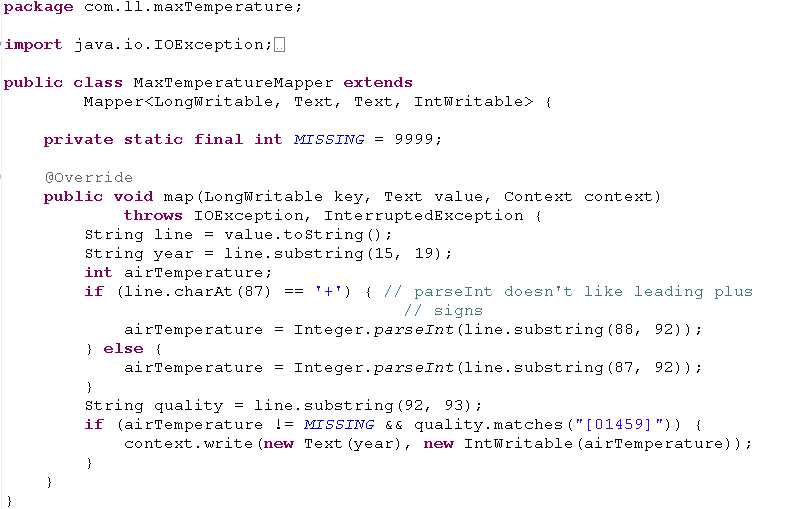

package com.ll.maxTemperature;import java.io.IOException;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.LongWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Mapper;public class MaxTemperatureMapper extendsMapper<LongWritable, Text, Text, IntWritable> {private static final int MISSING = 9999;@Overridepublic void map(LongWritable key, Text value, Context context)throws IOException, InterruptedException {String line = value.toString();String year = line.substring(15, 19);int airTemperature;if (line.charAt(87) == ‘+‘) { // parseInt doesn‘t like leading plus// signsairTemperature = Integer.parseInt(line.substring(88, 92));} else {airTemperature = Integer.parseInt(line.substring(87, 92));}String quality = line.substring(92, 93);if (airTemperature != MISSING && quality.matches("[01459]")) {context.write(new Text(year), new IntWritable(airTemperature));}}}// ^^ MaxTemperatureMapper

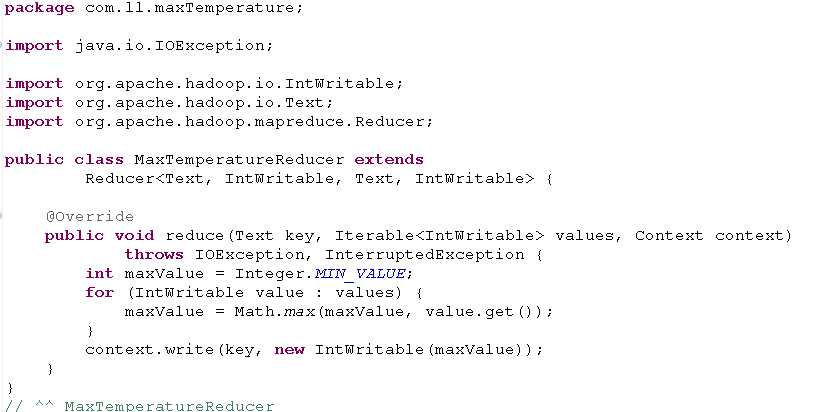

package com.ll.maxTemperature;import java.io.IOException;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Reducer;public class MaxTemperatureReducer extendsReducer<Text, IntWritable, Text, IntWritable> {@Overridepublic void reduce(Text key, Iterable<IntWritable> values, Context context)throws IOException, InterruptedException {int maxValue = Integer.MIN_VALUE;for (IntWritable value : values) {maxValue = Math.max(maxValue, value.get());}context.write(key, new IntWritable(maxValue));}}// ^^ MaxTemperatureReducer

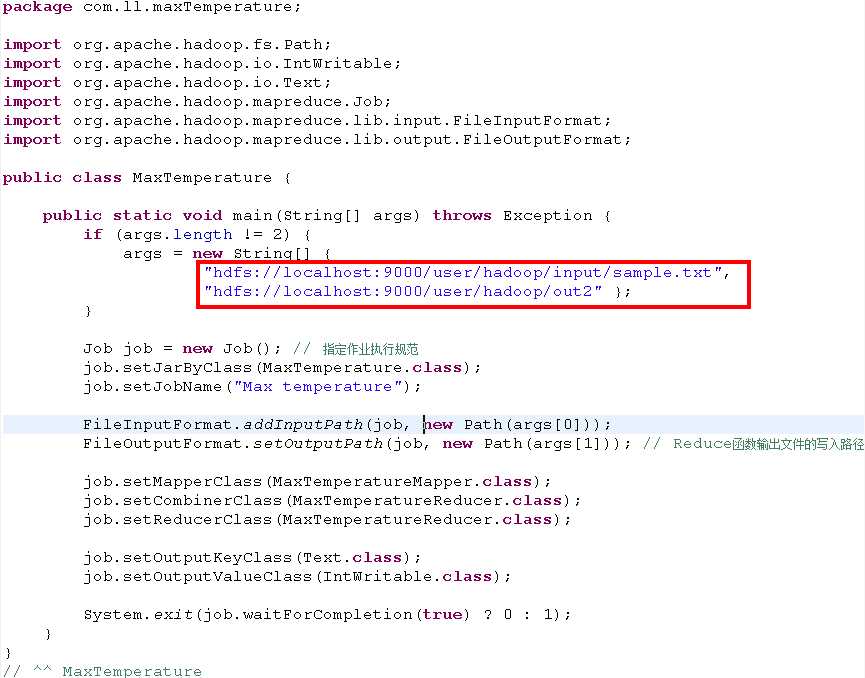

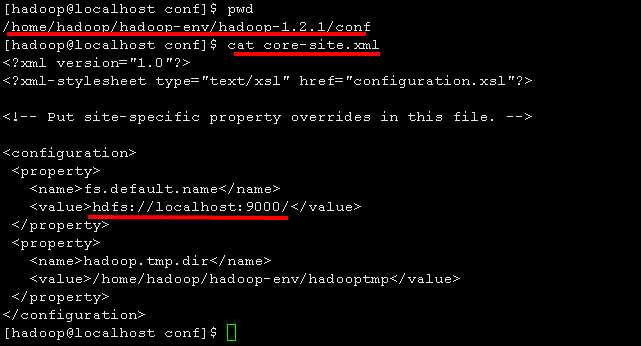

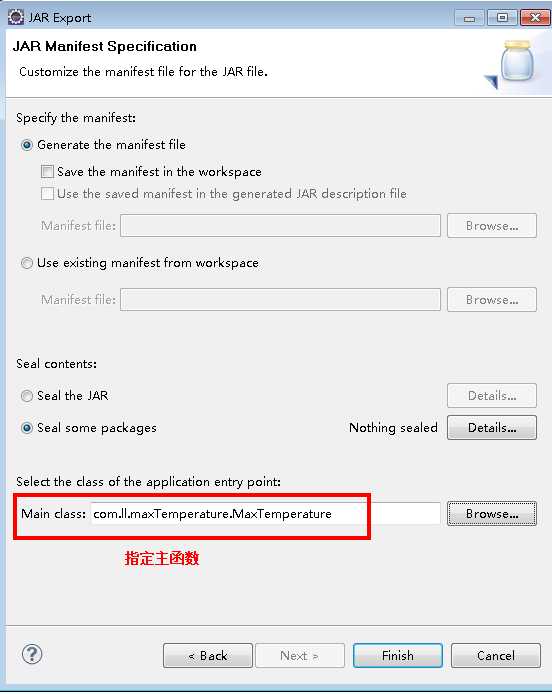

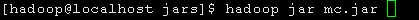

package com.ll.maxTemperature;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;public class MaxTemperature {public static void main(String[] args) throws Exception {if (args.length != 2) {args = new String[] {"hdfs://localhost:9000/user/hadoop/input/sample.txt","hdfs://localhost:9000/user/hadoop/out2" };}Job job = new Job(); // 指定作业执行规范job.setJarByClass(MaxTemperature.class);job.setJobName("Max temperature");FileInputFormat.addInputPath(job, new Path(args[0]));FileOutputFormat.setOutputPath(job, new Path(args[1])); // Reduce函数输出文件的写入路径job.setMapperClass(MaxTemperatureMapper.class);job.setCombinerClass(MaxTemperatureReducer.class);job.setReducerClass(MaxTemperatureReducer.class);job.setOutputKeyClass(Text.class);job.setOutputValueClass(IntWritable.class);System.exit(job.waitForCompletion(true) ? 0 : 1);}}// ^^ MaxTemperature

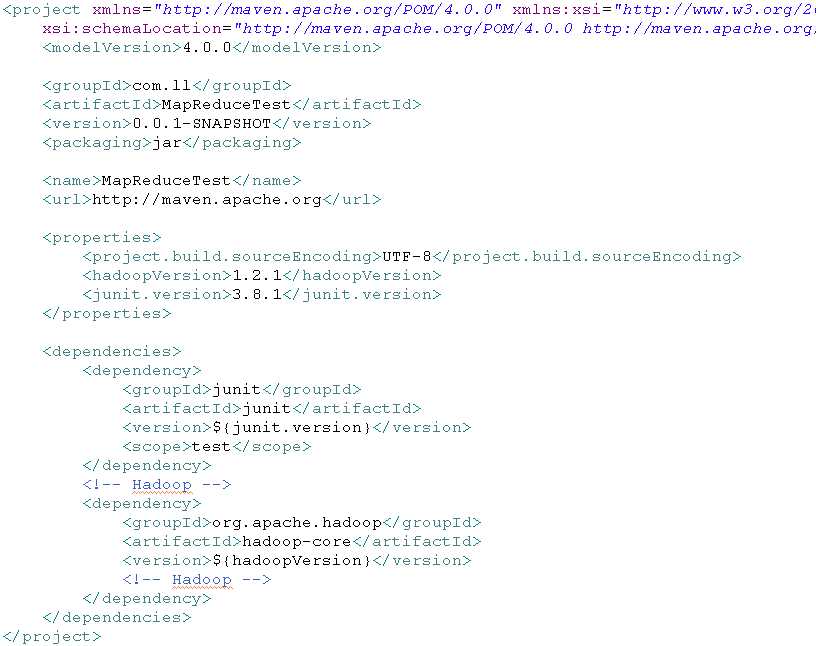

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.ll</groupId><artifactId>MapReduceTest</artifactId><version>0.0.1-SNAPSHOT</version><packaging>jar</packaging><name>MapReduceTest</name><url>http://maven.apache.org</url><properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><hadoopVersion>1.2.1</hadoopVersion><junit.version>3.8.1</junit.version></properties><dependencies><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>${junit.version}</version><scope>test</scope></dependency><!-- Hadoop --><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-core</artifactId><version>${hadoopVersion}</version><!-- Hadoop --></dependency></dependencies></project>

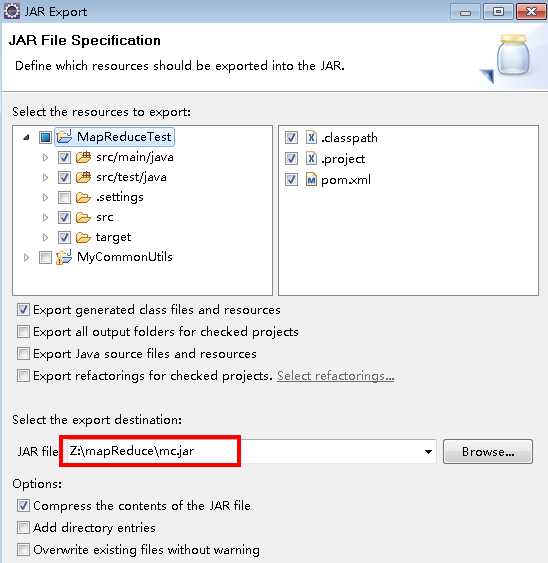

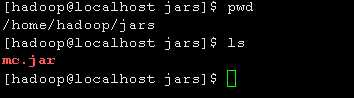

hadoop jar mc.jar

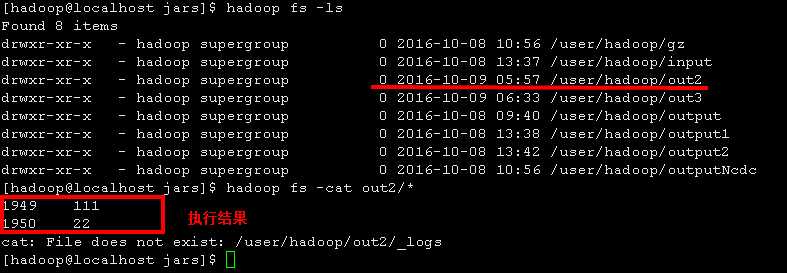

hadoop jar /home/hadoop/jars/mc.jar hdfs://localhost:9000/user/hadoop/input/sample.txt hdfs://localhost:9000/user/hadoop/out5

【Hadoop测试程序】编写MapReduce测试Hadoop环境

标签:

原文地址:http://www.cnblogs.com/ssslinppp/p/5941304.html