标签:ima 提取 div down 神经元 and oat 思路 pre

bestloss = float(‘inf‘) # 无穷大

for num in range(1000):

W = np.random.randn(10, 3073) * 0.0001

loss = L(X_train, Y_train, W)

if loss < bestloss:

bestloss = loss

bestW = W

scores = bsetW.dot(Xte_cols)

Yte_predict = np.argmax(score, axis = 0)

np.mean(Yte_predict == Yte)

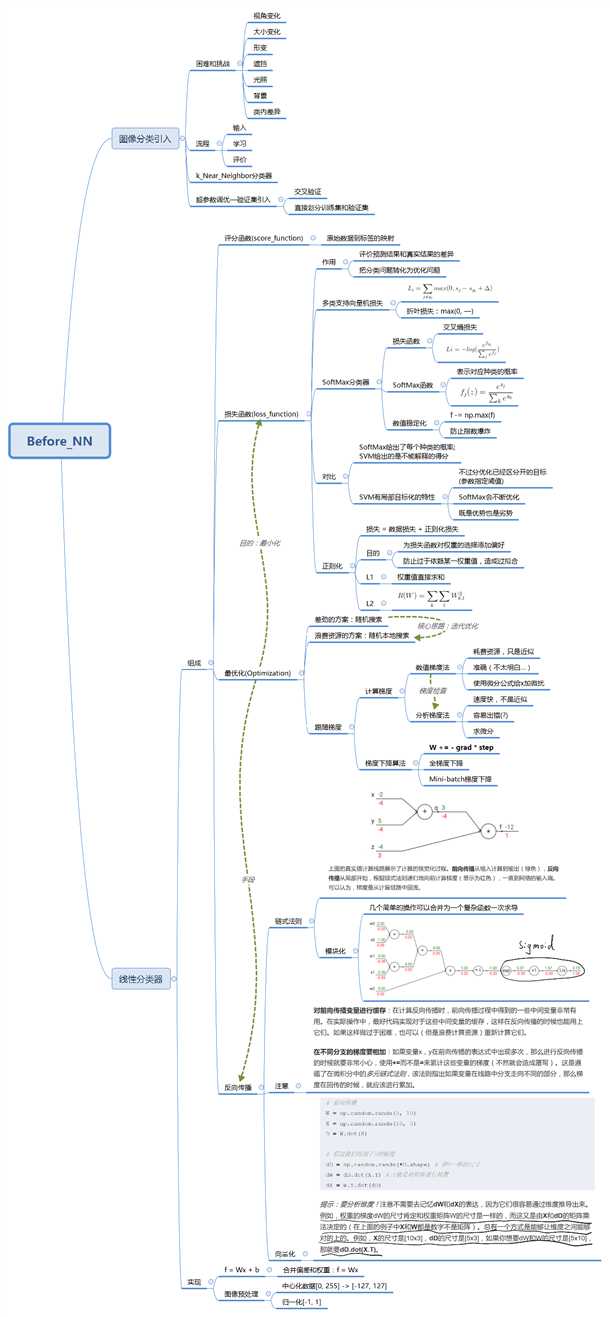

核心思路:迭代优化

W = np.random.randn(10, 3073) * 0.001

bestloss = float(‘inf‘)

for i in range(1000):

step_size = 0.0001

Wtry = np.random.randn(10, 3073) * step_size

loss = L(Xtr_cols, Ytr, Wtry)

if loss < bestloss:

W = Wtry

bestloss = loss

a 0,0,0,1

b 0,0,1,0

c 0,1,0,0

d 1,0,0,0

这样

下面的代码理论上输出1.0,实际输出0.95,也就是说在数值偏大的时候计算会不准

a = 10**9

for i in range(10**6):

a = a + 1e-6

print (a - 10**9)

# 0.95367431640625

所以会有优化初始数据的过程,最好使均值为0,方差相同:

以红色通道为例:(R-128)/128

标签:ima 提取 div down 神经元 and oat 思路 pre

原文地址:http://www.cnblogs.com/hellcat/p/6986201.html