标签:streams break oba pts exit 下载 原理 技术分享 avpacket

本文主要讲述如何利用Ffmpeg向视频文件 添加水印这一功能,文中最后会给出源代码下载地址以及视频

下载地址,视频除了讲述添加水印的基本原理以及代码实现,还提到了要注意的一些地方,因为直接运行

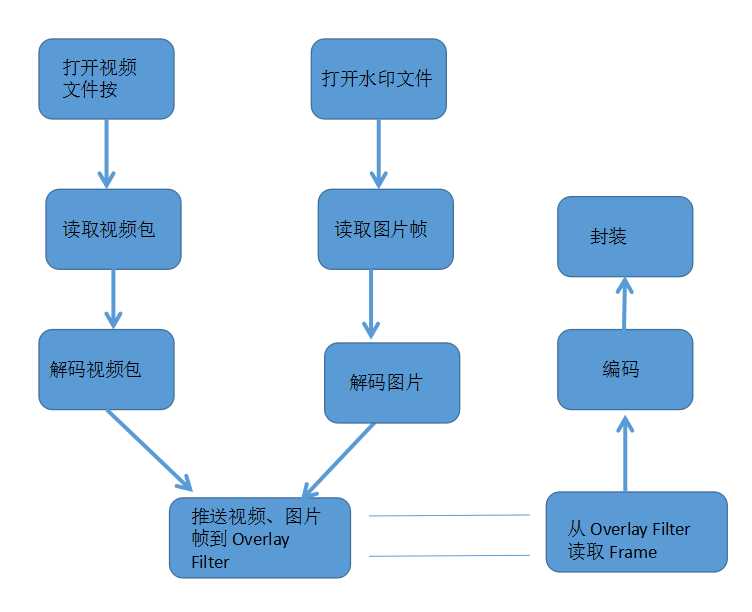

demo源码可能会有问题。利用Ffmpeg向视频文件添加水印的基本原理是将视频文件的视频包解码成一帧帧

“Frame”,通过ffmpeg Filter(overlay)实现待添加水印与“Frame”的叠加,最后将叠加后的视频帧进行编码

并将编码后的数据写到输出文件里。基本的流程如下图所示:

图1 ffmpeg 添加水印基本流程

了解了添加水印的基本原理及流程以后,下面给出代码部分:

一 打开输入源:

与以往的打开输入源的方法不同,这里的多了一个入参,根据输入的参数不同既可打开视频文件,可以打开水印图片。

int OpenInput(char *fileName,int inputIndex)

{

context[inputIndex] = avformat_alloc_context();

context[inputIndex]->interrupt_callback.callback = interrupt_cb;

AVDictionary *format_opts = nullptr;

int ret = avformat_open_input(&context[inputIndex], fileName, nullptr, &format_opts);

if(ret < 0)

{

return ret;

}

ret = avformat_find_stream_info(context[inputIndex],nullptr);

av_dump_format(context[inputIndex], 0, fileName, 0);

if(ret >= 0)

{

std::cout <<"open input stream successfully" << endl;

}

return ret;

}

二. 读取视频包(图片帧)

shared_ptr<AVPacket> ReadPacketFromSource(int inputIndex)

{

std::shared_ptr<AVPacket> packet(static_cast<AVPacket*>(av_malloc(sizeof(AVPacket))), [&](AVPacket *p) { av_packet_free(&p); av_freep(&p); });

av_init_packet(packet.get());

int ret = av_read_frame(context[inputIndex], packet.get());

if(ret >= 0)

{

return packet;

}

else

{

return nullptr;

}

}

三. 创建输出上下文

int OpenOutput(char *fileName,int inputIndex)

{

int ret = 0;

ret = avformat_alloc_output_context2(&outputContext, nullptr, "mpegts", fileName);

if(ret < 0)

{

goto Error;

}

ret = avio_open2(&outputContext->pb, fileName, AVIO_FLAG_READ_WRITE,nullptr, nullptr);

if(ret < 0)

{

goto Error;

}

for(int i = 0; i < context[inputIndex]->nb_streams; i++)

{

AVStream * stream = avformat_new_stream(outputContext, outPutEncContext->codec);

stream->codec = outPutEncContext;

if(ret < 0)

{

goto Error;

}

}

av_dump_format(outputContext, 0, fileName, 1);

ret = avformat_write_header(outputContext, nullptr);

if(ret < 0)

{

goto Error;

}

if(ret >= 0)

cout <<"open output stream successfully" << endl;

return ret ;

Error:

if(outputContext)

{

avformat_close_input(&outputContext);

}

return ret ;

}

四. 初始化编解码code

int InitEncoderCodec( int iWidth, int iHeight,int inputIndex)

{

AVCodec * pH264Codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if(NULL == pH264Codec)

{

printf("%s", "avcodec_find_encoder failed");

return -1;

}

outPutEncContext = avcodec_alloc_context3(pH264Codec);

outPutEncContext->gop_size = 30;

outPutEncContext->has_b_frames = 0;

outPutEncContext->max_b_frames = 0;

outPutEncContext->codec_id = pH264Codec->id;

outPutEncContext->time_base.num =context[inputIndex]->streams[0]->codec->time_base.num;

outPutEncContext->time_base.den = context[inputIndex]->streams[0]->codec->time_base.den;

outPutEncContext->pix_fmt = *pH264Codec->pix_fmts;

outPutEncContext->width = iWidth;

outPutEncContext->height = iHeight;

outPutEncContext->me_subpel_quality = 0;

outPutEncContext->refs = 1;

outPutEncContext->scenechange_threshold = 0;

outPutEncContext->trellis = 0;

AVDictionary *options = nullptr;

outPutEncContext->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

int ret = avcodec_open2(outPutEncContext, pH264Codec, &options);

if (ret < 0)

{

printf("%s", "open codec failed");

return ret;

}

return 1;

}

int InitDecodeCodec(AVCodecID codecId,int inputIndex)

{

auto codec = avcodec_find_decoder(codecId);

if(!codec)

{

return -1;

}

decoderContext[inputIndex] = context[inputIndex]->streams[0]->codec;

if (!decoderContext) {

fprintf(stderr, "Could not allocate video codec context\n");

exit(1);

}

int ret = avcodec_open2(decoderContext[inputIndex], codec, NULL);

return ret;

}

五. 初始化Filter

int InitInputFilter(AVFilterInOut *input,const char *filterName, int inputIndex)

{

char args[512];

memset(args,0, sizeof(args));

AVFilterContext *padFilterContext = input->filter_ctx;

auto filter = avfilter_get_by_name("buffer");

auto codecContext = context[inputIndex]->streams[0]->codec;

sprintf_s(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

codecContext->width, codecContext->height, codecContext->pix_fmt,

codecContext->time_base.num, codecContext->time_base.den /codecContext->ticks_per_frame,

codecContext->sample_aspect_ratio.num, codecContext->sample_aspect_ratio.den);

int ret = avfilter_graph_create_filter(&inputFilterContext[inputIndex],filter,filterName, args,

NULL, filter_graph);

if(ret < 0) return ret;

ret = avfilter_link(inputFilterContext[inputIndex],0,padFilterContext,input->pad_idx);

return ret;

}

int InitOutputFilter(AVFilterInOut *output,const char *filterName)

{

AVFilterContext *padFilterContext = output->filter_ctx;

auto filter = avfilter_get_by_name("buffersink");

int ret = avfilter_graph_create_filter(&outputFilterContext,filter,filterName, NULL,

NULL, filter_graph);

if(ret < 0) return ret;

ret = avfilter_link(padFilterContext,output->pad_idx,outputFilterContext,0);

return ret;

}

六. Demo

int _tmain(int argc, _TCHAR* argv[])

{

//string fileInput = "D:\\test11.ts";

string fileInput[2];

fileInput[0]= "F:\\test.ts";

fileInput[1]= "F:\\test1.jpg";

string fileOutput = "F:\\codeoutput.ts";

std::thread decodeTask;

Init();

int ret = 0;

for(int i = 0; i < 2;i++)

{

if(OpenInput((char *)fileInput[i].c_str(),i) < 0)

{

cout << "Open file Input 0 failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

ret = InitDecodeCodec(context[i]->streams[0]->codec->codec_id,i);

if(ret <0)

{

cout << "InitDecodeCodec failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

}

ret = InitEncoderCodec(decoderContext[0]->width,decoderContext[0]->height,0);

if(ret < 0)

{

cout << "open eccoder failed ret is " << ret<<endl;

cout << "InitEncoderCodec failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

//ret = InitFilter(outPutEncContext);

if(OpenOutput((char *)fileOutput.c_str(),0) < 0)

{

cout << "Open file Output failed!" << endl;

this_thread::sleep_for(chrono::seconds(10));

return 0;

}

AVFrame *pSrcFrame[2];

pSrcFrame[0] = av_frame_alloc();

pSrcFrame[1] = av_frame_alloc();

AVFrame *inputFrame[2];

inputFrame[0] = av_frame_alloc();

inputFrame[1] = av_frame_alloc();

auto filterFrame = av_frame_alloc();

int got_output = 0;

int64_t timeRecord = 0;

int64_t firstPacketTime = 0;

int64_t outLastTime = av_gettime();

int64_t inLastTime = av_gettime();

int64_t videoCount = 0;

filter_graph = avfilter_graph_alloc();

if(!filter_graph)

{

cout <<"graph alloc failed"<<endl;

goto End;

}

avfilter_graph_parse2(filter_graph, filter_descr, &inputs, &outputs);

InitInputFilter(inputs,"MainFrame",0);

InitInputFilter(inputs->next,"OverlayFrame",1);

InitOutputFilter(outputs,"output");

FreeInout();

ret = avfilter_graph_config(filter_graph, NULL);

if(ret < 0)

{

goto End;

}

decodeTask.swap(thread([&]{

bool ret = true;

while(ret)

{

auto packet = ReadPacketFromSource(1);

ret = DecodeVideo(packet.get(),pSrcFrame[1],1);

if(ret) break;

}

}));

decodeTask.join();

while(true)

{

outLastTime = av_gettime();

auto packet = ReadPacketFromSource(0);

if(packet)

{

if(DecodeVideo(packet.get(),pSrcFrame[0],0))

{

av_frame_ref( inputFrame[0],pSrcFrame[0]);

if (av_buffersrc_add_frame_flags(inputFilterContext[0], inputFrame[0], AV_BUFFERSRC_FLAG_PUSH) >= 0)

{

pSrcFrame[1]->pts = pSrcFrame[0]->pts;

//av_frame_ref( inputFrame[1],pSrcFrame[1]);

if(av_buffersrc_add_frame_flags(inputFilterContext[1],pSrcFrame[1], AV_BUFFERSRC_FLAG_PUSH) >= 0)

{

ret = av_buffersink_get_frame_flags(outputFilterContext, filterFrame,AV_BUFFERSINK_FLAG_NO_REQUEST);

//this_thread::sleep_for(chrono::milliseconds(10));

if ( ret >= 0)

{

std::shared_ptr<AVPacket> pTmpPkt(static_cast<AVPacket*>(av_malloc(sizeof(AVPacket))), [&](AVPacket *p) { av_packet_free(&p); av_freep(&p); });

av_init_packet(pTmpPkt.get());

pTmpPkt->data = NULL;

pTmpPkt->size = 0;

ret = avcodec_encode_video2(outPutEncContext, pTmpPkt.get(), filterFrame, &got_output);

if (ret >= 0 && got_output)

{

int ret = av_write_frame(outputContext, pTmpPkt.get());

}

//this_thread::sleep_for(chrono::milliseconds(10));

}

av_frame_unref(filterFrame);

}

}

}

}

else break;

}

CloseInput(0);

CloseOutput();

std::cout <<"Transcode file end!" << endl;

End:

this_thread::sleep_for(chrono::hours(10));

return 0;

}

如有什么问题,欢迎加入QQ群交流,群号:127903734。

源码地址:http://pan.baidu.com/s/1o8Lkozw

视频地址:http://pan.baidu.com/s/1jH4dYN8

标签:streams break oba pts exit 下载 原理 技术分享 avpacket

原文地址:http://www.cnblogs.com/wanggang123/p/7222215.html