Why requests

python的标准库urllib2提供了大部分需要的HTTP功能,但是API太逆天了,一个简单的功能就需要一大堆代码。

Requests 使用的是 urllib3,因此继承了它的所有特性。Requests 支持 HTTP 连接保持和连接池,支持使用 cookie 保持会话,支持文件上传,支持自动确定响应内容的编码,支持国际化的 URL 和 POST 数据自动编码。现代、国际化、人性化。。

官方文档:http://docs.python-requests.org/en/master/

中文文档:http://docs.python-requests.org/zh_CN/latest/user/quickstart.html

安装:

pip install requests

或者下载源码后安装

$ git clone git://github.com/kennethreitz/requests.git $ cd requests $ python setup.py install

也可以通过IDE安装比如pycharm(File-settings-Projecr Interpreter 点击右边的加号"+"然后搜索requests点击Install Package安装)

爬取校花网视频:

import requests

import re

import os

import hashlib

import time

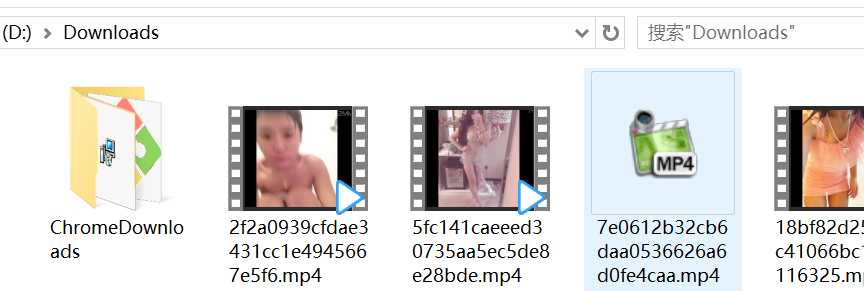

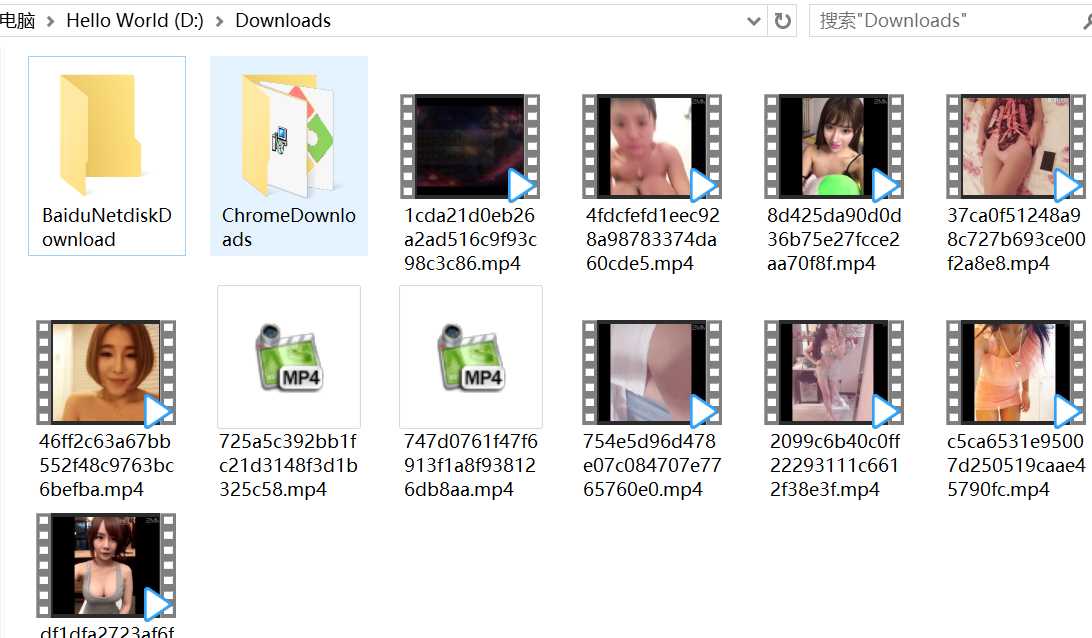

DOWLOAD_PATH = r‘D:\Downloads‘

def get_page(url):

try:

response = requests.get(url, )

if response.status_code == 200:

return response.text

except Exception:

pass

def parse_index(index_contents):

detail_urls = re.findall(‘class="items".*?href="(.*?)"‘, index_contents, re.S)

for detail_url in detail_urls:

if not detail_url.startswith(‘http‘):

detail_url = ‘http://www.xiaohuar.com‘ + detail_url

yield detail_url

def parse_detail(detail_contents):

movie_urls = re.findall(‘id="media".*?src="(.*?)"‘, detail_contents, re.S)

if movie_urls:

movie_url = movie_urls[0]

if movie_url.endswith(‘mp4‘):

yield movie_url

def download(movie_url):

print(movie_url)

try:

response = requests.get(movie_url,

)

if response.status_code == 200:

data = response.content

m = hashlib.md5()

m.update(str(time.time()).encode(‘utf-8‘))

m.update(movie_url.encode(‘utf-8‘))

filepath = os.path.join(DOWLOAD_PATH, ‘%s.mp4‘ % m.hexdigest())

with open(filepath, ‘wb‘) as f:

f.write(data)

f.flush()

print(‘下载成功‘, movie_url)

except Exception:

pass

def main():

raw_url = ‘http://www.xiaohuar.com/list-3-{page_num}.html‘

for i in range(5):

index_url = raw_url.format(page_num=i)

index_contents = get_page(index_url)

detail_urls = parse_index(index_contents)

for detail_url in detail_urls:

detail_contents = get_page(detail_url)

movie_urls = parse_detail(detail_contents)

for movie_url in movie_urls:

download(movie_url)

if __name__ == ‘__main__‘:

main()

注:D盘要创建一个Downloads文件夹

线程池版

import requests

import re

import os

import hashlib

import time

from concurrent.futures import ThreadPoolExecutor

pool = ThreadPoolExecutor(50)

DOWLOAD_PATH = r‘D:\Downloads‘

def get_page(url):

try:

response = requests.get(url, )

if response.status_code == 200:

return response.text

except Exception:

pass

def parse_index(index_contents):

index_contents = index_contents.result()

detail_urls = re.findall(‘class="items".*?href="(.*?)"‘, index_contents, re.S)

for detail_url in detail_urls:

if not detail_url.startswith(‘http‘):

detail_url = ‘http://www.xiaohuar.com‘ + detail_url

pool.submit(get_page, detail_url).add_done_callback(parse_detail)

def parse_detail(detail_contents):

detail_contents = detail_contents.result()

movie_urls = re.findall(‘id="media".*?src="(.*?)"‘, detail_contents, re.S)

if movie_urls:

movie_url = movie_urls[0]

if movie_url.endswith(‘mp4‘):

pool.submit(download, movie_url)

def download(movie_url):

try:

response = requests.get(movie_url,

)

if response.status_code == 200:

data = response.content

m = hashlib.md5()

m.update(str(time.time()).encode(‘utf-8‘))

m.update(movie_url.encode(‘utf-8‘))

filepath = os.path.join(DOWLOAD_PATH, ‘%s.mp4‘ % m.hexdigest())

with open(filepath, ‘wb‘) as f:

f.write(data)

f.flush()

print(‘下载成功‘, movie_url)

except Exception:

pass

def main():

raw_url = ‘http://www.xiaohuar.com/list-3-{page_num}.html‘

for i in range(5):

index_url = raw_url.format(page_num=i)

pool.submit(get_page, index_url).add_done_callback(parse_index)

if __name__ == ‘__main__‘:

main()

参考博客:

http://www.zhidaow.com/post/python-requests-install-and-brief-introduction

http://blog.csdn.net/shanzhizi/article/details/50903748