标签:protoc info cto database mqc esc any eth0 roots

[root@linux-node2 ~]# yum install -y salt-master salt-minion[root@linux-node2 ~]# vim /etc/salt/master

file_roots:

base:

- /srv/salt/

prod:

- /srv/salt/prod

pillar_roots:

base:

- /srv/pillar

prod:

- /srv/salt/prod/pillar

[root@linux-node2 ~]# cd /srv/

[root@linux-node2 srv]# mkdir -p /srv/salt/prod/pillar/openstack /srv/salt/prod/openstack

[root@linux-node2 srv]# tree

.

└── salt

└── prod

├── openstack

└── pillar

└── openstack

5 directories, 0 files

[root@linux-node2 srv]# /etc/init.d/salt-master start

[root@linux-node2 srv]# chkconfig salt-master on

[root@linux-node2 srv]# vim /etc/salt/minion

master: 10.0.0.102

[root@linux-node2 srv]# /etc/init.d/salt-minion start

[root@linux-node2 ~]# chkconfig salt-minion on

[root@linux-node2 srv]# salt-key

Accepted Keys:

Denied Keys:

Unaccepted Keys:

linux-node2

Rejected Keys:

[root@linux-node2 srv]# salt-key -a linux-node2

The following keys are going to be accepted:

Unaccepted Keys:

linux-node2

Proceed? [n/Y] y

Key for minion linux-node2 accepted.

[root@linux-node2 srv]# salt-key

Accepted Keys:

linux-node2

Denied Keys:

Unaccepted Keys:

Rejected Keys:

[root@linux-node2 srv]# salt '*' test.ping

linux-node2:

True

[root@linux-node2 srv]# cd /srv/salt/prod/pillar/

[root@linux-node2 pillar]# vim top.sls

prod:

'*':

- openstack.keystone

- openstack.glance

- openstack.neutron

- openstack.nova

- openstack.cinder

- openstack.horizon

- openstack.rabbit

[root@linux-node2 pillar]# cd openstack/

[root@linux-node2 openstack]# vim rabbit.sls

rabbit:

RABBITMQ_HOST: 10.0.0.102

RABBITMQ_PORT: 5672

RABBITMQ_USER: guest

RABBITMQ_PASS: guest

[root@linux-node2 openstack]# vim keystone.sls

keystone:

MYSQL_SERVER: 10.0.0.102

KEYSTONE_IP: 10.0.0.102

KEYSTONE_ADMIN_TOKEN: ADMIN

KEYSTONE_ADMIN_TENANT: admin

KEYSTONE_ADMIN_USER: admin

KEYSTONE_ADMIN_PASSWD: admin

KEYSTONE_ROLE_NAME: admin

KEYSTONE_AUTH_URL: http://10.0.0.102:35357/v2.0

KEYSTONE_DB_NAME: keystone

KEYSTONE_DB_USER: keystone

KEYSTONE_DB_PASS: keystone

DB_ALLOW: keystone.*

HOST_ALLOW: 10.0.0.0/255.255.255.0

[root@linux-node2 openstack]# vim glance.sls

glance:

MYSQL_SERVER: 10.0.0.102

GLANCE_IP: 10.0.0.102

GLANCE_DB_USER: glance

GLANCE_DB_NAME: glance

GLANCE_DB_PASS: glance

DB_ALLOW: glance.*

HOST_ALLOW: 10.0.0.0/255.255.255.0

RABBITMQ_HOST: 10.0.0.102

RABBITMQ_PORT: 5672

RABBITMQ_USER: guest

RABBITMQ_PASS: guest

AUTH_KEYSTONE_HOST: 10.0.0.102

AUTH_KEYSTONE_PORT: 35357

AUTH_KEYSTONE_PROTOCOL: http

AUTH_GLANCE_ADMIN_TENANT: service

AUTH_GLANCE_ADMIN_USER: glance

AUTH_GLANCE_ADMIN_PASS: glance

[root@linux-node2 openstack]# vim nova.sls

nova:

MYSQL_SERVER: 10.0.0.102

NOVA_IP: 10.0.0.102

NOVA_DB_NAME: nova

NOVA_DB_USER: nova

NOVA_DB_PASS: nova

DB_ALLOW: nova.*

HOST_ALLOW: 10.0.0.0/255.255.255.0

RABBITMQ_HOST: 10.0.0.102

RABBITMQ_PORT: 5672

RABBITMQ_USER: guest

RABBITMQ_PASS: guest

AUTH_KEYSTONE_HOST: 10.0.0.102

AUTH_KEYSTONE_PORT: 35357

AUTH_KEYSTONE_PROTOCOL: http

AUTH_NOVA_ADMIN_TENANT: service

AUTH_NOVA_ADMIN_USER: nova

AUTH_NOVA_ADMIN_PASS: nova

GLANCE_HOST: 10.0.0.102

AUTH_KEYSTONE_URI: http://10.0.0.102:5000

NEUTRON_URL: http://10.0.0.102:9696

NEUTRON_ADMIN_USER: neutron

NEUTRON_ADMIN_PASS: neutron

NEUTRON_ADMIN_TENANT: service

NEUTRON_ADMIN_AUTH_URL: http://10.0.0.102:5000/v2.0

NOVNCPROXY_BASE_URL: http://10.0.0.102:6080/vnc_auto.html

AUTH_URI: http://10.0.0.102:5000

[root@linux-node2 openstack]# vim neutron.sls

neutron:

MYSQL_SERVER: 10.0.0.102

NEUTRON_IP: 10.0.0.102

NEUTRON_DB_NAME: neutron

NEUTRON_DB_USER: neutron

NEUTRON_DB_PASS: neutron

AUTH_KEYSTONE_HOST: 10.0.0.102

AUTH_KEYSTONE_PORT: 35357

AUTH_KEYSTONE_PROTOCOL: http

AUTH_ADMIN_PASS: neutron

VM_INTERFACE: eth0

NOVA_URL: http://10.0.0.102:8774/v2

NOVA_ADMIN_USER: nova

NOVA_ADMIN_PASS: nova

NOVA_ADMIN_TENANT: service

NOVA_ADMIN_TENANT_ID: cb3d31490b2a4e6daf94b11e2f40accc

NOVA_ADMIN_AUTH_URL: http://10.0.0.102:35357/v2.0

AUTH_NEUTRON_ADMIN_TENANT: service

AUTH_NEUTRON_ADMIN_USER: neutron

AUTH_NEUTRON_ADMIN_PASS: neutron

DB_ALLOW: neutron.*

HOST_ALLOW: 10.0.0.0/255.255.255.0

[root@linux-node2 openstack]# vim cinder.sls

cinder:

MYSQL_SERVER: 10.0.0.102

CINDER_DBNAME: cinder

CINDER_USER: cinder

CINDER_PASS: cinder

DB_ALLOW: cinder.*

HOST_ALLOW: 10.0.0.0/255.255.255.0

RABBITMQ_HOST: 10.0.0.102

RABBITMQ_PORT: 5672

RABBITMQ_USER: guest

RABBITMQ_PASS: guest

AUTH_KEYSTONE_HOST: 10.0.0.102

AUTH_KEYSTONE_PORT: 35357

AUTH_KEYSTONE_PROTOCOL: http

AUTH_ADMIN_PASS: admin

ADMIN_PASSWD: admin

ADMIN_TOKEN: 5ba5e30637c0dedbc411

CONTROL_IP: 10.0.0.102

NFS_IP: 10.0.0.102

IPADDR: salt['network.ip_addrs']

[root@linux-node2 openstack]# vim horizon.sls

horizon:

ALLOWED_HOSTS: ['127.0.0.1', '10.0.0.102']

OPENSTACK_HOST: "10.0.0.102"

[root@linux-node2 openstack]# cd /srv/salt/prod/openstack/

[root@linux-node2 openstack]# mkdir -p init/files

[root@linux-node2 openstack]# vim all-in-one.sls

prod:

'linux-node2':

- openstack.control

'linux-node3':

- openstack.compute

[root@linux-node2 openstack]# vim control.sls

include:

- openstack.init.base

- openstack.rabbitmq.server

- openstack.mysql.server

- openstack.mysql.init

- openstack.keystone.server

- openstack.glance.server

- openstack.nova.control

- openstack.horizon.server

- openstack.neutron.server

[root@linux-node2 openstack]# vim compute.sls

include:

- openstack.init.base

- openstack.nova.compute

- openstack.neutron.linuxbridge_agent

[root@linux-node2 openstack]# cd init/

[root@linux-node2 init]# vim base.sls

ntp-service:

pkg.installed:

- name: ntp

file.managed:

- name: /etc/ntp.conf

- source: salt://openstack/init/files/ntp.conf

- user: root

- group: root

- mode: 644

cmd.run:

- name: service ntpd restart

/etc/yum.repos.d/icehouse.repo:

file.managed:

- source: salt://openstack/init/files/icehouse.repo

- user: root

- group: root

- mode: 644

/etc/yum.repos.d/epel.repo:

file.managed:

- source: salt://openstack/init/files/epel.repo

- user: root

- group: root

- mode: 644

/etc/ntp.conf:

file.managed:

- source: salt://openstack/init/files/ntp.conf

- user: root

- group: root

- mode: 644

pkg.base:

pkg.installed:

- names:

- lrzsz

- MySQL-python

- python-crypto

[root@linux-node2 init]# cd files/

[root@linux-node2 files]# cp /etc/yum.repos.d/icehouse.repo .

[root@linux-node2 files]# cp /etc/yum.repos.d/epel.repo .

[root@linux-node2 files]# cp /etc/ntp.conf .

[root@linux-node2 files]# salt '*' state.sls openstack.init.base env=prod

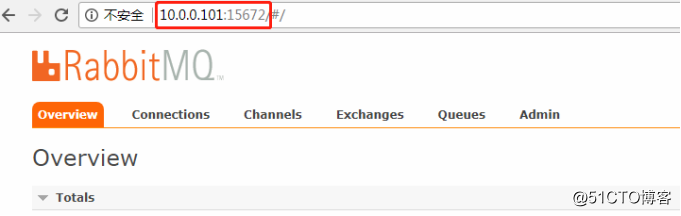

Rabbitmq安装:

[root@linux-node2 files]# cd /srv/salt/prod/openstack/

[root@linux-node2 openstack]# mkdir rabbitmq

[root@linux-node2 openstack]# cd rabbitmq/

[root@linux-node2 rabbitmq]# vim server.sls

rabbitmq-server:

pkg.installed:

- name: rabbitmq-server

cmd.run:

- name: service rabbitmq-server start && /usr/lib/rabbitmq/bin/rabbitmq-plugins enable rabbitmq_management && service rabbitmq-server restart && rabbitmqctl add_user openstack openstack && rabbitmqctl set_user_tags openstack administrator

- enable: True

- require:

- pkg: rabbitmq-server

[root@linux-node2 rabbitmq]# salt 'linux-node2' state.sls openstack.rabbitmq.server env=prod

Mysql安装:

[root@linux-node2 rabbitmq]# cd ..

[root@linux-node2 openstack]# mkdir -p mysql/files

[root@linux-node2 openstack]# cd mysql/

[root@linux-node2 mysql]# vim server.sls

mysql-server:

pkg.installed:

- name: mysql-server

file.managed:

- name: /etc/my.cnf

- source: salt://openstack/mysql/files/my.cnf

cmd.run:

- name: service mysqld restart

- enable: True

- require:

- pkg: mysql-server

- watch:

- file: mysql-server

include:

- openstack.mysql.init

[root@linux-node2 mysql]# vim init.sls

include:

- openstack.mysql.keystone

- openstack.mysql.glance

- openstack.mysql.nova

- openstack.mysql.neutron

- openstack.mysql.cinder

[root@linux-node2 mysql]# vim keystone.sls

keystone-mysql:

cmd.run:

- name: mysql -e "create database keystone;" && mysql -e "grant all on keystone.* to keystone@'10.0.0.0/255.255.255.0' identified by 'keystone';"

[root@linux-node2 mysql]# vim glance.sls

glance-mysql:

cmd.run:

- name: mysql -e "create database glance;" && mysql -e "grant all on glance.* to glance@'10.0.0.0/255.255.255.0' identified by 'glance';"

[root@linux-node2 mysql]# vim nova.sls

nova-mysql:

cmd.run:

- name: mysql -e "create database nova;" && mysql -e "grant all on nova.* to nova@'10.0.0.0/255.255.255.0' identified by 'nova';"

[root@linux-node2 mysql]# vim neutron.sls

neutron-mysql:

cmd.run:

- name: mysql -e "create database neutron;" && mysql -e "grant all on neutron.* to neutron@'10.0.0.0/255.255.255.0' identified by 'neutron';"

[root@linux-node2 mysql]# vim cinder.sls

cinder-mysql:

cmd.run:

- name: mysql -e "create database cinder;" && mysql -e "grant all on cinder.* to cinder@'10.0.0.0/255.255.255.0' identified by 'cinder';"

[root@linux-node2 mysql]# salt 'linux-node2' state.sls openstack.mysql.server env=prod

[root@linux-node2 mysql]# mysql -e "show databases;"

+--------------------+

| Database |

+--------------------+

| information_schema |

| cinder |

| glance |

| keystone |

| mysql |

| neutron |

| nova |

| test |

+--------------------+

Keystone安装:

[root@linux-node2 mysql]# cd /srv/salt/prod/openstack

[root@linux-node2 openstack]# mkdir -p keystone/files/config

[root@linux-node2 openstack]# cd keystone/

[root@linux-node2 keystone]# vim server.sls

include:

- openstack.keystone.init

keystone-install:

pkg.installed:

- names:

- openstack-keystone

- python-keystoneclient

/etc/keystone/keystone.conf:

file.managed:

- source: salt://openstack/keystone/files/config/keystone.conf

- user: keystone

- group: keystone

- template: jinja

- defaults:

KEYSTONE_ADMIN_TOKEN: {{ pillar['keystone']['KEYSTONE_ADMIN_TOKEN'] }}

MYSQL_SERVER: {{ pillar['keystone']['MYSQL_SERVER'] }}

KEYSTONE_DB_PASS: {{ pillar['keystone']['KEYSTONE_DB_PASS'] }}

KEYSTONE_DB_USER: {{ pillar['keystone']['KEYSTONE_DB_USER'] }}

KEYSTONE_DB_NAME: {{ pillar['keystone']['KEYSTONE_DB_NAME'] }}

keystone-pki-setup:

cmd.run:

- name: keystone-manage pki_setup --keystone-user keystone --keystone-group keystone && chown -R keystone:keystone /etc/keystone/ssl && chmod -R o-rwx /etc/keystone/ssl

- require:

- pkg: keystone-install

- unless: test -d /etc/keystone/ssl

keystone-db-sync:

cmd.run:

- name: keystone-manage db_sync && touch /etc/keystone-datasync.lock && chown keystone:keystone /var/log/keystone/*

- require:

- pkg: keystone-install

- unless: test -f /etc/keystone-datasync.lock

keystone-service:

cmd.run:

- name: service openstack-keystone restart

/root/keystone_admin:

file.managed:

- source: salt://openstack/keystone/files/keystone_admin

- user: root

- group: root

- mode: 644

- template: jinja

- defaults:

KEYSTONE_ADMIN_TENANT: {{ pillar['keystone']['KEYSTONE_ADMIN_TENANT'] }}

KEYSTONE_ADMIN_USER: {{ pillar['keystone']['KEYSTONE_ADMIN_USER'] }}

KEYSTONE_ADMIN_PASSWD: {{ pillar['keystone']['KEYSTONE_ADMIN_PASSWD'] }}

KEYSTONE_AUTH_URL: {{ pillar['keystone']['KEYSTONE_AUTH_URL'] }}

[root@linux-node2 keystone]# vim init.sls

keystone-init:

file.managed:

- name: /usr/local/bin/keystone_init.sh

- source: salt://openstack/keystone/files/keystone_init.sh

- mode: 755

- user: root

- group: root

- template: jinja

- defaults:

KEYSTONE_ADMIN_TOKEN: {{ pillar['keystone']['KEYSTONE_ADMIN_TOKEN'] }}

KEYSTONE_ADMIN_TENANT: {{ pillar['keystone']['KEYSTONE_ADMIN_TENANT'] }}

KEYSTONE_ADMIN_USER: {{ pillar['keystone']['KEYSTONE_ADMIN_USER'] }}

KEYSTONE_ADMIN_PASSWD: {{ pillar['keystone']['KEYSTONE_ADMIN_PASSWD'] }}

KEYSTONE_ROLE_NAME: {{ pillar['keystone']['KEYSTONE_ROLE_NAME'] }}

KEYSTONE_AUTH_URL: {{ pillar['keystone']['KEYSTONE_AUTH_URL'] }}

KEYSTONE_IP: {{ pillar['keystone']['KEYSTONE_IP'] }}

cmd.run:

- name: sleep 10 && bash /usr/local/bin/keystone_init.sh && touch /etc/keystone-init.lock

- require:

- file: keystone-init

- unless: test -f /etc/keystone-init.lock

[root@linux-node2 keystone]# cd files/

[root@linux-node2 files]# vim keystone_admin

export OS_TENANT_NAME="{{KEYSTONE_ADMIN_TENANT}}"

export OS_USERNAME="{{KEYSTONE_ADMIN_USER}}"

export OS_PASSWORD="{{KEYSTONE_ADMIN_PASSWD}}"

export OS_AUTH_URL="{{KEYSTONE_AUTH_URL}}"

[root@linux-node2 files]# vim keystone_init.sh

export OS_SERVICE_TOKEN="{{KEYSTONE_ADMIN_TOKEN}}"

export OS_SERVICE_ENDPOINT="{{KEYSTONE_AUTH_URL}}"

keystone user-create --name={{KEYSTONE_ADMIN_USER}} --pass="{{KEYSTONE_ADMIN_PASSWD}}"

keystone tenant-create --name={{KEYSTONE_ADMIN_TENANT}} --description="Admin Tenant"

keystone role-create --name={{KEYSTONE_ROLE_NAME}}

keystone user-role-add --user={{KEYSTONE_ADMIN_USER}} --tenant={{KEYSTONE_ADMIN_TENANT}} --role={{KEYSTONE_ROLE_NAME}}

keystone user-role-add --user={{KEYSTONE_ADMIN_USER}} --role=_member_ --tenant={{KEYSTONE_ADMIN_TENANT}}

keystone tenant-create --name=service

keystone service-create --name=keystone --type=identity --description="Openstack Identity"

#Keystone Service and Endpoint

keystone endpoint-create --service-id=$(keystone service-list|awk '/identity/{print $2}') \

--publicurl="http://{{KEYSTONE_IP}}:5000/v2.0" \

--adminurl="http://{{KEYSTONE_IP}}:35357/v2.0" \

--internalurl="http://{{KEYSTONE_IP}}:5000/v2.0"

unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

cd /root

source /root/keystone_admin

keystone user-role-list --user admin --tenant admin

[root@linux-node2 config]# vim keystone.conf ##修改默认配置文件中下面两项

admin_token={{KEYSTONE_ADMIN_TOKEN}}

connection=mysql://{{KEYSTONE_DB_USER}}:{{KEYSTONE_DB_PASS}}@{{MYSQL_SERVER}}/{{KEYSTONE_DB_NAME}}

[root@linux-node2 config]# salt 'linux-node2' state.sls openstack.keystone.server env=prod

[root@linux-node2 config]# source /root/keystone_admin

[root@linux-node2 config]# keystone user-role-list --user admin --tenant admin ##验证

+----------------------------------+----------+----------------------------------+----------------------------------+

| id | name | user_id | tenant_id |

+----------------------------------+----------+----------------------------------+----------------------------------+

| 9fe2ff9ee4384b1894a90878d3e92bab | _member_ | 6e212a9e54e9476cbc9fa3798cdd4f6d | ff42d5e633ef484b98fa8be7d93f0ed6 |

| 063623dc2dfd4bb49d05cdbe8ca0c4d1 | admin | 6e212a9e54e9476cbc9fa3798cdd4f6d | ff42d5e633ef484b98fa8be7d93f0ed6 |

+----------------------------------+----------+----------------------------------+----------------------------------+

Glance安装:

[root@linux-node2 config]# cd /srv/salt/prod/openstack

[root@linux-node2 openstack]# mkdir -p glance/files/config

[root@linux-node2 openstack]# cd glance/

[root@linux-node2 glance]# vim server.sls

include:

- openstack.glance.init

glance-install:

pkg.installed:

- names:

- openstack-glance

- python-glanceclient

/etc/glance/glance-api.conf:

file.managed:

- source: salt://openstack/glance/files/config/glance-api.conf

- user: glance

- group: glance

- template: jinja

- defaults:

MYSQL_SERVER: {{ pillar['keystone']['MYSQL_SERVER'] }}

GLANCE_DB_PASS: {{ pillar['glance']['GLANCE_DB_PASS'] }}

GLANCE_DB_USER: {{ pillar['glance']['GLANCE_DB_USER'] }}

GLANCE_DB_NAME: {{ pillar['glance']['GLANCE_DB_NAME'] }}

RABBITMQ_HOST: {{ pillar['rabbit']['RABBITMQ_HOST'] }}

RABBITMQ_PORT: {{ pillar['rabbit']['RABBITMQ_PORT'] }}

RABBITMQ_USER: {{ pillar['rabbit']['RABBITMQ_USER'] }}

RABBITMQ_PASS: {{ pillar['rabbit']['RABBITMQ_PASS'] }}

AUTH_KEYSTONE_HOST: {{ pillar['glance']['AUTH_KEYSTONE_HOST'] }}

AUTH_KEYSTONE_PORT: {{ pillar['glance']['AUTH_KEYSTONE_PORT'] }}

AUTH_KEYSTONE_PROTOCOL: {{ pillar['glance']['AUTH_KEYSTONE_PROTOCOL'] }}

AUTH_GLANCE_ADMIN_TENANT: {{ pillar['glance']['AUTH_GLANCE_ADMIN_TENANT'] }}

AUTH_GLANCE_ADMIN_USER: {{ pillar['glance']['AUTH_GLANCE_ADMIN_USER'] }}

AUTH_GLANCE_ADMIN_PASS: {{ pillar['glance']['AUTH_GLANCE_ADMIN_PASS'] }}

/etc/glance/glance-registry.conf:

file.managed:

- source: salt://openstack/glance/files/config/glance-registry.conf

- user: glance

- group: glance

- template: jinja

- defaults:

MYSQL_SERVER: {{ pillar['keystone']['MYSQL_SERVER'] }}

GLANCE_DB_PASS: {{ pillar['glance']['GLANCE_DB_PASS'] }}

GLANCE_DB_USER: {{ pillar['glance']['GLANCE_DB_USER'] }}

GLANCE_DB_NAME: {{ pillar['glance']['GLANCE_DB_NAME'] }}

RABBITMQ_HOST: {{ pillar['rabbit']['RABBITMQ_HOST'] }}

RABBITMQ_PORT: {{ pillar['rabbit']['RABBITMQ_PORT'] }}

RABBITMQ_USER: {{ pillar['rabbit']['RABBITMQ_USER'] }}

RABBITMQ_PASS: {{ pillar['rabbit']['RABBITMQ_PASS'] }}

AUTH_KEYSTONE_HOST: {{ pillar['glance']['AUTH_KEYSTONE_HOST'] }}

AUTH_KEYSTONE_PORT: {{ pillar['glance']['AUTH_KEYSTONE_PORT'] }}

AUTH_KEYSTONE_PROTOCOL: {{ pillar['glance']['AUTH_KEYSTONE_PROTOCOL'] }}

AUTH_GLANCE_ADMIN_TENANT: {{ pillar['glance']['AUTH_GLANCE_ADMIN_TENANT'] }}

AUTH_GLANCE_ADMIN_USER: {{ pillar['glance']['AUTH_GLANCE_ADMIN_USER'] }}

AUTH_GLANCE_ADMIN_PASS: {{ pillar['glance']['AUTH_GLANCE_ADMIN_PASS'] }}

glance-db-sync:

cmd.run:

- name: yum install -y python-crypto && glance-manage db_sync && touch /etc/glance-datasync.lock && chown glance:glance /var/log/glance/*

- require:

- pkg: glance-install

- unless: test -f /etc/glance-datasync.lock

openstack-glance-api:

file.managed:

- name: /etc/init.d/openstack-glance-api

- source: salt://openstack/glance/files/openstack-glance-api

- mode: 755

- user: root

- group: root

cmd.run:

- name: /etc/init.d/openstack-glance-api restart && chkconfig openstack-glance-api on

- watch:

- file: openstack-glance-api

openstack-glance-registry:

file.managed:

- name: /etc/init.d/openstack-glance-registry

- source: salt://openstack/glance/files/openstack-glance-registry

- mode: 755

- user: root

- group: root

cmd.run:

- name: /etc/init.d/openstack-glance-registry restart && chkconfig openstack-glance-registry on

- watch:

- file: openstack-glance-registry

[root@linux-node2 glance]# vim init.sls

glance-init:

file.managed:

- name: /usr/local/bin/glance_init.sh

- source: salt://openstack/glance/files/glance_init.sh

- mode: 755

- user: root

- group: root

- template: jinja

- defaults:

KEYSTONE_ADMIN_TENANT: {{ pillar['keystone']['KEYSTONE_ADMIN_TENANT'] }}

KEYSTONE_ADMIN_USER: {{ pillar['keystone']['KEYSTONE_ADMIN_USER'] }}

KEYSTONE_ADMIN_PASSWD: {{ pillar['keystone']['KEYSTONE_ADMIN_PASSWD'] }}

KEYSTONE_AUTH_URL: {{ pillar['keystone']['KEYSTONE_AUTH_URL'] }}

GLANCE_IP: {{ pillar['glance']['GLANCE_IP'] }}

AUTH_GLANCE_ADMIN_TENANT: {{ pillar['glance']['AUTH_GLANCE_ADMIN_TENANT'] }}

AUTH_GLANCE_ADMIN_USER: {{ pillar['glance']['AUTH_GLANCE_ADMIN_USER'] }}

AUTH_GLANCE_ADMIN_PASS: {{ pillar['glance']['AUTH_GLANCE_ADMIN_PASS'] }}

cmd.run:

- name: sleep 10 && bash /usr/local/bin/glance_init.sh && touch /etc/glance-init.lock

- require:

- file: glance-init

- unless: test -f /etc/glance-init.lock

[root@linux-node2 glance]# cd files/

[root@linux-node2 files]# vim glance_init.sh

source /root/keystone_admin

keystone user-create --name={{AUTH_GLANCE_ADMIN_USER}} --pass={{AUTH_GLANCE_ADMIN_PASS}} --email=glance@example.com

keystone user-role-add --user={{AUTH_GLANCE_ADMIN_USER}} --tenant={{AUTH_GLANCE_ADMIN_TENANT}} --role=admin

keystone service-create --name=glance --type=image --description="OpenStack Image Service"

keystone endpoint-create \

--service-id=$(keystone service-list|awk '/ image /{print $2}') \

--publicurl="http://{{GLANCE_IP}}:9292" \

--adminurl="http://{{GLANCE_IP}}:9292" \

--internalurl="http://{{GLANCE_IP}}:9292"

[root@linux-node2 files]# cd config/

[root@linux-node2 config]# grep "^[a-z]" glance-api.conf -n

6:debug=False

43:log_file=/var/log/glance/api.log

232:notifier_strategy = rabbit

242:rabbit_host={{RABBITMQ_HOST}}

243:rabbit_port={{RABBITMQ_PORT}}

244:rabbit_use_ssl=false

245:rabbit_userid={{RABBITMQ_USER}}

246:rabbit_password={{RABBITMQ_PASS}}

247:rabbit_virtual_host=/

248:rabbit_notification_exchange=glance

249:rabbit_notification_topic=notifications

250:rabbit_durable_queues=False

564:connection=mysql://{{GLANCE_DB_USER}}:{{GLANCE_DB_PASS}}@{{MYSQL_SERVER}}/{{GLANCE_DB_NAME}}

645:auth_host={{AUTH_KEYSTONE_HOST}}

646:auth_port={{AUTH_KEYSTONE_PORT}}

647:auth_protocol={{AUTH_KEYSTONE_PROTOCOL}}

648:admin_tenant_name={{AUTH_GLANCE_ADMIN_TENANT}}

649:admin_user={{AUTH_GLANCE_ADMIN_USER}}

650:admin_password={{AUTH_GLANCE_ADMIN_PASS}}

660:flavor=keystone

[root@linux-node2 config]# grep "^[a-z]" glance-registry.conf -n

6:debug=False

19:log_file=/var/log/glance/registry.log

94:connection=mysql://{{GLANCE_DB_USER}}:{{GLANCE_DB_PASS}}@{{MYSQL_SERVER}}/{{GLANCE_DB_NAME}}

175:auth_host={{AUTH_KEYSTONE_HOST}}

176:auth_port={{AUTH_KEYSTONE_PORT}}

177:auth_protocol={{AUTH_KEYSTONE_PROTOCOL}}

178:admin_tenant_name={{AUTH_GLANCE_ADMIN_TENANT}}

179:admin_user={{AUTH_GLANCE_ADMIN_USER}}

180:admin_password={{AUTH_GLANCE_ADMIN_PASS}}

190:flavor=keystone

[root@linux-node2 config]# salt 'linux-node2' state.sls openstack.glance.server env=prod

[root@linux-node2 config]# source /root/keystone_admin

[root@linux-node2 config]# glance image-list ##验证

+----+------+-------------+------------------+------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+----+------+-------------+------------------+------+--------+

+----+------+-------------+------------------+------+--------+

Nova控制节点安装:

[root@linux-node2 config]# cd /srv/salt/prod/openstack/

[root@linux-node2 openstack]# mkdir -p nova/files/config

[root@linux-node2 openstack]# cd nova/

[root@linux-node2 nova]# vim init.sls

nova-init:

file.managed:

- name: /usr/local/bin/nova_init.sh

- source: salt://openstack/nova/files/nova_init.sh

- mode: 755

- user: root

- group: root

- template: jinja

- defaults:

KEYSTONE_ADMIN_TENANT: {{ pillar['keystone']['KEYSTONE_ADMIN_TENANT'] }}

KEYSTONE_ADMIN_USER: {{ pillar['keystone']['KEYSTONE_ADMIN_USER'] }}

KEYSTONE_ADMIN_PASSWD: {{ pillar['keystone']['KEYSTONE_ADMIN_PASSWD'] }}

KEYSTONE_AUTH_URL: {{ pillar['keystone']['KEYSTONE_AUTH_URL'] }}

NOVA_IP: {{ pillar['nova']['NOVA_IP'] }}

AUTH_NOVA_ADMIN_TENANT: {{ pillar['nova']['AUTH_NOVA_ADMIN_TENANT'] }}

AUTH_NOVA_ADMIN_USER: {{ pillar['nova']['AUTH_NOVA_ADMIN_USER'] }}

AUTH_NOVA_ADMIN_PASS: {{ pillar['nova']['AUTH_NOVA_ADMIN_PASS'] }}

cmd.run:

- name: bash /usr/local/bin/nova_init.sh && touch /etc/nova-datainit.lock

- require:

- file: nova-init

- unless: test -f /etc/nova-datainit.lock

[root@linux-node2 nova]# vim config.sls

/etc/nova/nova.conf:

file.managed:

- source: salt://openstack/nova/files/config/nova.conf

- user: nova

- group: nova

- template: jinja

- defaults:

MYSQL_SERVER: {{ pillar['nova']['MYSQL_SERVER'] }}

NOVA_IP: {{ pillar['nova']['NOVA_IP'] }}

NOVA_DB_PASS: {{ pillar['nova']['NOVA_DB_PASS'] }}

NOVA_DB_USER: {{ pillar['nova']['NOVA_DB_USER'] }}

NOVA_DB_NAME: {{ pillar['nova']['NOVA_DB_NAME'] }}

RABBITMQ_HOST: {{ pillar['rabbit']['RABBITMQ_HOST'] }}

RABBITMQ_PORT: {{ pillar['rabbit']['RABBITMQ_PORT'] }}

RABBITMQ_USER: {{ pillar['rabbit']['RABBITMQ_USER'] }}

RABBITMQ_PASS: {{ pillar['rabbit']['RABBITMQ_PASS'] }}

AUTH_KEYSTONE_HOST: {{ pillar['nova']['AUTH_KEYSTONE_HOST'] }}

AUTH_KEYSTONE_PORT: {{ pillar['nova']['AUTH_KEYSTONE_PORT'] }}

AUTH_KEYSTONE_PROTOCOL: {{ pillar['nova']['AUTH_KEYSTONE_PROTOCOL'] }}

AUTH_NOVA_ADMIN_TENANT: {{ pillar['nova']['AUTH_NOVA_ADMIN_TENANT'] }}

AUTH_NOVA_ADMIN_USER: {{ pillar['nova']['AUTH_NOVA_ADMIN_USER'] }}

AUTH_NOVA_ADMIN_PASS: {{ pillar['nova']['AUTH_NOVA_ADMIN_PASS'] }}

NEUTRON_URL: {{ pillar['nova']['NEUTRON_URL'] }}

NEUTRON_ADMIN_USER: {{ pillar['nova']['NEUTRON_ADMIN_USER'] }}

NEUTRON_ADMIN_PASS: {{ pillar['nova']['NEUTRON_ADMIN_PASS'] }}

NEUTRON_ADMIN_TENANT: {{ pillar['nova']['NEUTRON_ADMIN_TENANT'] }}

NEUTRON_ADMIN_AUTH_URL: {{ pillar['nova']['NEUTRON_ADMIN_AUTH_URL'] }}

NOVNCPROXY_BASE_URL: {{ pillar['nova']['NOVNCPROXY_BASE_URL'] }}

VNCSERVER_PROXYCLIENT: {{ grains['fqdn'] }}

AUTH_URI: {{ pillar['nova']['AUTH_URI'] }}

[root@linux-node2 nova]# vim control.sls

include:

- openstack.nova.config

- openstack.nova.init

nova-control-install:

pkg.installed:

- names:

- openstack-nova-api

- openstack-nova-cert

- openstack-nova-conductor

- openstack-nova-console

- openstack-nova-novncproxy

- openstack-nova-scheduler

- python-novaclient

nova-db-sync:

cmd.run:

- name: nova-manage db sync && touch /etc/nova-dbsync.lock && chown nova:nova /var/log/nova/*

- require:

- pkg: nova-control-install

- unless: test -f /etc/nova-dbsync.lock

nova-api-service:

file.managed:

- name: /etc/init.d/openstack-nova-api

- source: salt://openstack/nova/files/openstack-nova-api

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-api restart && chkconfig openstack-nova-api on

- enable: True

- watch:

- file: nova-api-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

nova-cert-service:

file.managed:

- name: /etc/init.d/openstack-nova-cert

- source: salt://openstack/nova/files/openstack-nova-cert

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-cert restart && chkconfig openstack-nova-cert on

- enable: True

- watch:

- file: nova-cert-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

nova-conductor-service:

file.managed:

- name: /etc/init.d/openstack-nova-conductor

- source: salt://openstack/nova/files/openstack-nova-conductor

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-conductor restart && chkconfig openstack-nova-conductor on

- enable: True

- watch:

- file: nova-conductor-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

nova-consoleauth-service:

file.managed:

- name: /etc/init.d/openstack-nova-consoleauth

- source: salt://openstack/nova/files/openstack-nova-consoleauth

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-consoleauth restart && chkconfig openstack-nova-consoleauth on

- enable: True

- watch:

- file: nova-consoleauth-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

nova-novncproxy-service:

file.managed:

- name: /etc/init.d/openstack-nova-novncproxy

- source: salt://openstack/nova/files/openstack-nova-novncproxy

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-novncproxy restart && chkconfig openstack-nova-novncproxy on

- enable: True

- watch:

- file: nova-novncproxy-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

nova-scheduler-service:

file.managed:

- name: /etc/init.d/openstack-nova-scheduler

- source: salt://openstack/nova/files/openstack-nova-scheduler

- user: root

- group: root

- mode: 755

cmd.run:

- name: service openstack-nova-scheduler restart && chkconfig openstack-nova-scheduler on

- enable: True

- watch:

- file: nova-scheduler-service

- require:

- pkg: nova-control-install

- cmd: nova-db-sync

[root@linux-node2 nova]# vim compute.sls

include:

- openstack.nova.config

/etc/yum.repos.d/icehouse.repo:

file.managed:

- source: salt://openstack/init/files/icehouse.repo

- user: root

- group: root

- mode: 644

nova-compute-install:

pkg.installed:

- names:

- qemu-kvm

- libvirt

- libvirt-python

- libvirt-client

- openstack-nova-compute

- python-novaclient

- sysfsutils

cmd.run:

- name: /etc/init.d/libvirtd restart && chkconfig libvirtd on && /etc/init.d/messagebus restart && chkconfig messagebus on && /etc/init.d/openstack-nova-compute restart && chkconfig openstack-nova-compute on

[root@linux-node2 nova]# salt 'linux-node2' state.sls openstack.nova.control env=prod

[root@linux-node2 openstack]# nova host-list

+-------------+-------------+----------+

| host_name | service | zone |

+-------------+-------------+----------+

| linux-node2 | cert | internal |

| linux-node2 | conductor | internal |

| linux-node2 | scheduler | internal |

| linux-node2 | consoleauth | internal |

+-------------+-------------+----------+

[root@linux-node2 openstack]# nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

Nova计算节点安装:

[root@linux-node2 nova]# salt 'linux-node3' state.sls openstack.nova.compute env=prod

[root@linux-node2 nova]# nova service-list ##验证

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

| nova-conductor | linux-node1 | internal | enabled | down | 2018-07-21T17:10:11.000000 | - |

| nova-cert | linux-node2 | internal | enabled | up | 2018-07-21T18:04:53.000000 | - |

| nova-conductor | linux-node2 | internal | enabled | up | 2018-07-21T18:04:53.000000 | - |

| nova-scheduler | linux-node2 | internal | enabled | up | 2018-07-21T18:04:43.000000 | - |

| nova-consoleauth | linux-node2 | internal | enabled | up | 2018-07-21T18:04:43.000000 | - |

| nova-compute | linux-node3 | nova | enabled | up | 2018-07-21T18:04:53.000000 | - |

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

[root@linux-node2 nova]# nova service-list ##验证

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

| nova-conductor | linux-node1 | internal | enabled | down | 2018-07-21T17:10:11.000000 | - |

| nova-cert | linux-node2 | internal | enabled | up | 2018-07-21T18:06:03.000000 | - |

| nova-conductor | linux-node2 | internal | enabled | up | 2018-07-21T18:06:03.000000 | - |

| nova-scheduler | linux-node2 | internal | enabled | up | 2018-07-21T18:06:03.000000 | - |

| nova-consoleauth | linux-node2 | internal | enabled | up | 2018-07-21T18:06:03.000000 | - |

| nova-compute | linux-node3 | nova | enabled | up | 2018-07-21T18:06:03.000000 | - |

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

Neutron安装:

[root@linux-node2 nova]# cd /srv/salt/prod/openstack/

[root@linux-node2 openstack]# mkdir -p neutron/files/config

[root@linux-node2 openstack]# cd neutron/

[root@linux-node2 neutron]# vim server.sls

include:

- openstack.neutron.config

- openstack.neutron.linuxbridge_agent

- openstack.neutron.init

neutron-server:

pkg.installed:

- names:

- openstack-neutron

- openstack-neutron-ml2

- python-neutronclient

- openstack-neutron-linuxbridge

file.managed:

- name: /etc/init.d/neutron-server

- source: salt://openstack/neutron/files/neutron-server

- mode: 755

- user: root

- group: root

cmd.run:

- name: /etc/init.d/neutron-server start && chkconfig neutron-server on

- require:

- pkg: neutron-server

[root@linux-node2 neutron]# vim config.sls

/etc/neutron/neutron.conf:

file.managed:

- source: salt://openstack/neutron/files/config/neutron.conf

- user: neutron

- group: neutron

- template: jinja

- defaults:

MYSQL_SERVER: {{ pillar['neutron']['MYSQL_SERVER'] }}

NEUTRON_IP: {{ pillar['neutron']['NEUTRON_IP'] }}

NEUTRON_DB_NAME: {{ pillar['neutron']['NEUTRON_DB_NAME'] }}

NEUTRON_DB_USER: {{ pillar['neutron']['NEUTRON_DB_USER'] }}

NEUTRON_DB_PASS: {{ pillar['neutron']['NEUTRON_DB_PASS'] }}

AUTH_KEYSTONE_HOST: {{ pillar['neutron']['AUTH_KEYSTONE_HOST'] }}

AUTH_KEYSTONE_PORT: {{ pillar['neutron']['AUTH_KEYSTONE_PORT'] }}

AUTH_KEYSTONE_PROTOCOL: {{ pillar['neutron']['AUTH_KEYSTONE_PROTOCOL'] }}

AUTH_ADMIN_PASS: {{ pillar['neutron']['AUTH_ADMIN_PASS'] }}

NOVA_URL: {{ pillar['neutron']['NOVA_URL'] }}

NOVA_ADMIN_USER: {{ pillar['neutron']['NOVA_ADMIN_USER'] }}

NOVA_ADMIN_PASS: {{ pillar['neutron']['NOVA_ADMIN_PASS'] }}

NOVA_ADMIN_TENANT: {{ pillar['neutron']['NOVA_ADMIN_TENANT'] }}

NOVA_ADMIN_AUTH_URL: {{ pillar['neutron']['NOVA_ADMIN_AUTH_URL'] }}

RABBITMQ_HOST: {{ pillar['rabbit']['RABBITMQ_HOST'] }}

RABBITMQ_PORT: {{ pillar['rabbit']['RABBITMQ_PORT'] }}

RABBITMQ_USER: {{ pillar['rabbit']['RABBITMQ_USER'] }}

RABBITMQ_PASS: {{ pillar['rabbit']['RABBITMQ_PASS'] }}

AUTH_NEUTRON_ADMIN_TENANT: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_TENANT'] }}

AUTH_NEUTRON_ADMIN_USER: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_USER'] }}

AUTH_NEUTRON_ADMIN_PASS: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_PASS'] }}

VM_INTERFACE: {{ pillar['neutron']['VM_INTERFACE'] }}

/etc/neutron/plugins/linuxbridge/linuxbridge_conf.ini:

file.managed:

- source: salt://openstack/neutron/files/config/plugins/linuxbridge/linuxbridge_conf.ini

- user: neutron

- group: neutron

/etc/neutron/plugins/ml2/ml2_conf.ini:

file.managed:

- source: salt://openstack/neutron/files/config/plugins/ml2/ml2_conf.ini

- user: neutron

- group: neutron

/etc/nova/nova.conf:

file.managed:

- source: salt://openstack/neutron/files/config/nova.conf

- user: nova

- group: nova

cmd.run:

- name: for i in {api,conductor,scheduler};do service openstack-nova-"$i" restart;done

- require:

- file: /etc/nova/nova.conf

[root@linux-node2 neutron]# vim linuxbridge_agent.sls

include:

- openstack.neutron.config

neutron-linuxbridge-agent:

pkg.installed:

- names:

- openstack-neutron

- openstack-neutron-ml2

- python-neutronclient

- openstack-neutron-linuxbridge

file.managed:

- name: /etc/init.d/neutron-linuxbridge-agent

- source: salt://openstack/neutron/files/neutron-linuxbridge-agent

- mode: 755

- user: root

- group: root

cmd.run:

- name: /etc/init.d/neutron-linuxbridge-agent restart && chkconfig neutron-linuxbridge-agent on

- watch:

- file: neutron-linuxbridge-agent

- require:

- pkg: neutron-linuxbridge-agent

[root@linux-node2 neutron]# vim init.sls

neutron-init:

file.managed:

- name: /usr/local/bin/neutron_init.sh

- source: salt://openstack/neutron/files/neutron_init.sh

- mode: 755

- user: root

- group: root

- template: jinja

- defaults:

KEYSTONE_ADMIN_TENANT: {{ pillar['keystone']['KEYSTONE_ADMIN_TENANT'] }}

KEYSTONE_ADMIN_USER: {{ pillar['keystone']['KEYSTONE_ADMIN_USER'] }}

KEYSTONE_ADMIN_PASSWD: {{ pillar['keystone']['KEYSTONE_ADMIN_PASSWD'] }}

KEYSTONE_AUTH_URL: {{ pillar['keystone']['KEYSTONE_AUTH_URL'] }}

NEUTRON_IP: {{ pillar['neutron']['NEUTRON_IP'] }}

AUTH_NEUTRON_ADMIN_TENANT: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_TENANT'] }}

AUTH_NEUTRON_ADMIN_USER: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_USER'] }}

AUTH_NEUTRON_ADMIN_PASS: {{ pillar['neutron']['AUTH_NEUTRON_ADMIN_PASS'] }}

cmd.run:

- name: bash /usr/local/bin/neutron_init.sh && touch /etc/neutron-datainit.lock

- require:

- file: /usr/local/bin/neutron_init.sh

- unless: test -f /etc/neutron-datainit.lock

[root@linux-node2 neutron]# cd files/

[root@linux-node2 files]# vim neutron_init.sh

#!/bin/bash

source /root/keystone_admin

keystone user-create --name={{AUTH_NEUTRON_ADMIN_USER}} --pass={{AUTH_NEUTRON_ADMIN_PASS}} --email=neutron@example.com

keystone user-role-add --user={{AUTH_NEUTRON_ADMIN_USER}} --tenant={{AUTH_NEUTRON_ADMIN_TENANT}} --role=admin

keystone service-create --name=neutron --type=network --description="OpenStack Networking Service"

keystone endpoint-create \

--service-id=$(keystone service-list |awk '/ network / {print $2}') \

--publicurl="http://{{NEUTRON_IP}}:9696" \

--adminurl="http://{{NEUTRON_IP}}:9696" \

--internalurl="http://{{NEUTRON_IP}}:9696"

[root@linux-node2 files]# cd config/

[root@linux-node2 config]# mkdir -p plugins/ml2 plugins/linuxbridge

[root@linux-node2 config]# cd /srv/salt/prod/openstack/

[root@linux-node2 openstack]# salt 'linux-node2' state.sls openstack.neutron.server env=prod

[root@linux-node2 openstack]# source /root/keystone_admin ##验证

[root@linux-node2 openstack]# keystone service-list

+----------------------------------+----------+----------+------------------------------+

| id | name | type | description |

+----------------------------------+----------+----------+------------------------------+

| 072f60ea0aae424cbf97d4eda8bc1b14 | glance | image | OpenStack Image Service |

| 7bd1f04cfd464aef8893c1a55ed4f50b | keystone | identity | Openstack Identity |

| d89e62d88e794eafbdd65bbc9ffb7224 | neutron | network | OpenStack Networking Service |

| bb05c298a4f04438907e8308a1a06183 | nova | compute | OpenStack Compute Service |

+----------------------------------+----------+----------+------------------------------+

[root@linux-node2 openstack]# keystone endpoint-list

+----------------------------------+-----------+-----------------------------------------+-----------------------------------------+-----------------------------------------+----------------------------------+

| id | region | publicurl | internalurl | adminurl | service_id |

+----------------------------------+-----------+-----------------------------------------+-----------------------------------------+-----------------------------------------+----------------------------------+

| 40b01195ac904e6c8bb805302701fed0 | regionOne | http://10.0.0.101:9292 | http://10.0.0.101:9292 | http://10.0.0.101:9292 | 072f60ea0aae424cbf97d4eda8bc1b14 |

| 5c7edc7f558a4ad9a245c939e4822098 | regionOne | http://10.0.0.101:9696 | http://10.0.0.101:9696 | http://10.0.0.101:9696 | d89e62d88e794eafbdd65bbc9ffb7224 |

| 9721ad7478df47788e698eade8e53892 | regionOne | http://10.0.0.101:8774/v2/%(tenant_id)s | http://10.0.0.101:8774/v2/%(tenant_id)s | http://10.0.0.101:8774/v2/%(tenant_id)s | bb05c298a4f04438907e8308a1a06183 |

| c1eddc09226a437c967933194837b13d | regionOne | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:35357/v2.0 | 7bd1f04cfd464aef8893c1a55ed4f50b |

+----------------------------------+-----------+-----------------------------------------+-----------------------------------------+-----------------------------------------+----------------------------------+

[root@linux-node2 openstack]# neutron agent-list

+--------------------------------------+--------------------+-------------+-------+----------------+

| id | agent_type | host | alive | admin_state_up |

+--------------------------------------+--------------------+-------------+-------+----------------+

| 60ea2468-00eb-4dbc-8fee-a3cbf3af01f9 | Linux bridge agent | linux-node1 | xxx | True |

+--------------------------------------+--------------------+-------------+-------+----------------+

linux-node3安装:

[root@linux-node2 openstack]# salt 'linux-node3' state.sls openstack.neutron.linuxbridge_agent env=prod

[root@linux-node2 openstack]# source /root/keystone_admin ##验证

[root@linux-node2 openstack]# nova host-list

+-------------+-------------+----------+

| host_name | service | zone |

+-------------+-------------+----------+

| linux-node2 | cert | internal |

| linux-node2 | conductor | internal |

| linux-node2 | scheduler | internal |

| linux-node2 | consoleauth | internal |

| linux-node3 | compute | nova |

| linux-node3 | scheduler | internal |

+-------------+-------------+----------+

[root@linux-node2 openstack]# neutron agent-list

+--------------------------------------+--------------------+-------------+-------+----------------+

| id | agent_type | host | alive | admin_state_up |

+--------------------------------------+--------------------+-------------+-------+----------------+

| f196e8bf-0056-4144-9ddd-0b22e08d922a | Linux bridge agent | linux-node3 | xxx | True |

| ff22c049-df61-4995-bef6-9cb7fb5a2af2 | Linux bridge agent | linux-node2 | xxx | True |

+--------------------------------------+--------------------+-------------+-------+----------------+

Horizon安装:

[root@linux-node2 openstack]# mkdir -p horizon/files/config

[root@linux-node2 openstack]# cd horizon/

[root@linux-node2 horizon]# vim server.sls

openstack_dashboard:

pkg.installed:

- names:

- httpd

- mod_wsgi

- memcached

- python-memcached

- openstack-dashboard

file.managed:

- name: /etc/openstack-dashboard/local_settings

- source: salt://openstack/horizon/files/config/local_settings

- user: apache

- group: apache

- template: jinja

- defaults:

ALLOWED_HOSTS: {{ pillar['horizon']['ALLOWED_HOSTS'] }}

OPENSTACK_HOST: {{ pillar['horizon']['OPENSTACK_HOST'] }}

cmd.run:

- name: /etc/init.d/httpd start && chkconfig httpd on && /etc/init.d/memcached start && chkconfig memcached on

- require:

- pkg: openstack_dashboard

- watch:

- file: openstack_dashboard

Django14-1.4.21-1.el6.noarch.rpm:

file.managed:

- name: /root/Django14-1.4.21-1.el6.noarch.rpm

- source: salt://openstack/horizon/files/Django14-1.4.21-1.el6.noarch.rpm

cmd.run:

- name: rpm -ivh Django14-1.4.21-1.el6.noarch.rpm

注:不管能否解决你遇到的问题,欢迎相互交流,共同提高!

标签:protoc info cto database mqc esc any eth0 roots

原文地址:http://blog.51cto.com/13162375/2148804