标签:bind The mod exp root k8s poi storage inf

部署环境 $ kubectl get node NAME STATUS ROLES AGE VERSION master01 Ready master 13d v1.14.0 master02 Ready master 13d v1.14.0 master03 Ready master 13d v1.14.0 node01 Ready <none> 13d v1.14.0 node02 Ready <none> 13d v1.14.0 node03 Ready <none> 13d v1.14.0

目录结构 ├── control │ ├── alertmanager 组件1 │ │ ├── config.yaml │ │ ├── deploymen.yaml │ │ └── service.yaml │ ├── grafana 组件2 │ │ ├── deployment.yaml │ │ ├── pvc.yaml │ │ ├── pv.yaml │ │ └── service.yaml │ ├── namespace │ │ └── namespace.yaml │ ├── node-exporter 组件3 │ │ ├── node-exporter-service.yaml │ │ └── node-exporter.yaml │ └── prometheus 组件4 │ ├── configmap │ │ ├── config.yaml │ │ ├── config.yaml.bak │ │ ├── prometheus.yaml │ │ ├── rules-down.yml │ │ └── rules-load.yml.bak │ ├── deployment.yaml │ ├── pvc.yaml │ ├── pv.yaml │ ├── rbac.yaml │ └── service.yaml

一、创建名称空间

mkdir control/{alertmanager,grafana,namespace,node-exporter,prometheus}

cd control/namespace

cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ns-monitor

labels:

name: ns-monitor

# 生成配置文件

kubectl create -f namespace.yaml

二、部署prometheus node exporter (在master01 操作) cd /root/control/node-exporter

创建 node exporter pod

$ cat node-exporter.yaml

apiVersion: apps/v1beta2

kind: DaemonSet

metadata:

name: node-exporter

namespace: ns-monitor

labels:

app: node-exporter

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

protocol: TCP

name: http

hostNetwork: true

hostPID: true

tolerations: # 在master 节点也会创建pod

- effect: NoSchedule

operator: Exists

# 生成配置文件

kubectl create -f node-exporter.yaml

创建node exporter pod service

$ cat node-exporter-service.yaml

apiVersion: v1

kind: Service

metadata:

name: node-exporter-service

namespace: ns-monitor

labels:

app: node-exporter-service

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

app: node-exporter

# 生成配置文件

kubectl create -f node-exporter-service.yaml

三、部署prometheus (在master01 操作) cd control/prometheus

创建prometheus pv (前提在各个node 节点创建目录/nfs/prometheus/data)

$ cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: "prometheus-data-pv"

labels:

name: "prometheus-data-pv"

release: stable

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: /nfs/prometheus/data

#生成配置文件

kubectl create -f pv.yaml

创建 prometheus pvc

$ cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-data-pvc

namespace: ns-monitor

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

selector:

matchLabels:

name: prometheus-data-pv

release: stable

#生成配置文件

kubectl create -f pvc.yaml

创建 rbac 认证,因为我们需要在 prometheus 中去访问 Kubernetes 的相关信息,所以我们这里管理了一个名为 prometheus 的 serviceAccount 对象

$ cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs:

- get

- watch

- list

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- watch

- list

- nonResourceURLs: ["/metrics"]

verbs:

- get

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: ns-monitor

labels:

app: prometheus

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: ns-monitor

roleRef:

kind: ClusterRole

name: prometheus

apiGroup: rbac.authorization.k8s.io

#生成配置文件

kubectl create -f rbac.yaml

创建prometheus 主配置文件 configmap

cd /root/control/prometheus/configmap

$ cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-conf

namespace: ns-monitor

labels:

app: prometheus

data:

prometheus.yml: |-

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- ‘alertmanager-service.ns-monitor:9093‘ #如果报错,可以先注释此行,后续会添加此配置

# Load rules once and periodically evaluate them according to the global ‘evaluation_interval‘.

rule_files:

- "/etc/prometheus/rules/nodedown.rule.yml"

scrape_configs:

- job_name: ‘prometheus‘

static_configs:

- targets: [‘localhost:9090‘]

- job_name: ‘grafana‘

static_configs:

- targets:

- ‘grafana-service.ns-monitor:3000‘

- job_name: ‘kubernetes-apiservers‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: ‘kubernetes-nodes‘

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: ‘kubernetes-cadvisor‘

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: ‘kubernetes-service-endpoints‘

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: ‘kubernetes-services‘

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-ingresses‘

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-pods‘

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

# 生成配置文件

kubectl create -f config.yaml

创建 prometheus rules 配置文件

$ cat rules-down.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: ns-monitor

labels:

app: prometheus

data:

nodedown.rule.yml: |

groups:

- name: test-rule

rules:

- alert: NodeDown

expr: up == 0

for: 1m

labels:

team: node

annotations:

summary: "{{$labels.instance}}: down detected"

description: "{{$labels.instance}}: is down 1m (current value is: {{ $value }}"

#生成配置文件

kubectl create -f rules-down.yml

创建 prometheus pod

$ cat deployment.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: prometheus

namespace: ns-monitor

labels:

app: prometheus

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

securityContext:

runAsUser: 0

containers:

- name: prometheus

image: prom/prometheus:latest

volumeMounts:

- mountPath: /prometheus

name: prometheus-data-volume

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-conf-volume

subPath: prometheus.yml

- mountPath: /etc/prometheus/rules

name: prometheus-rules-volume

ports:

- containerPort: 9090

protocol: TCP

volumes:

- name: prometheus-data-volume

persistentVolumeClaim:

claimName: prometheus-data-pvc

- name: prometheus-conf-volume

configMap:

name: prometheus-conf

- name: prometheus-rules-volume

configMap:

name: prometheus-rules

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

# 生成配置文件

kubectl create -f deployment.yaml

创建 prometheus service

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: ‘true‘

labels:

app: prometheus

name: prometheus-service

namespace: ns-monitor

spec:

ports:

- port: 9090

targetPort: 9090

selector:

app: prometheus

type: NodePort

# 生成配置文件

kubectl create -f service.yaml

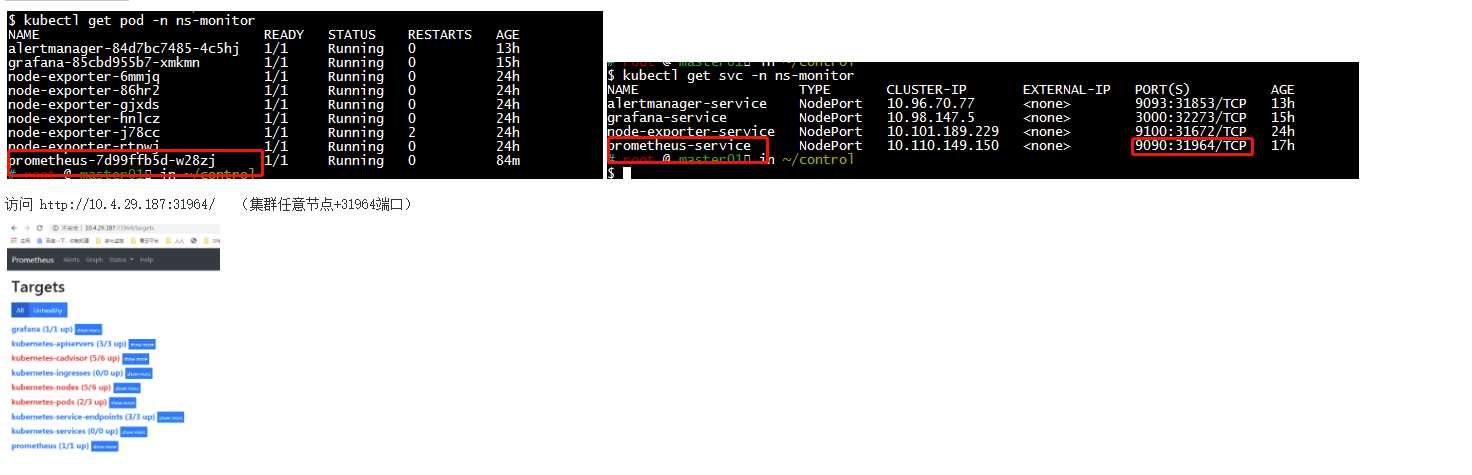

查看prometheus

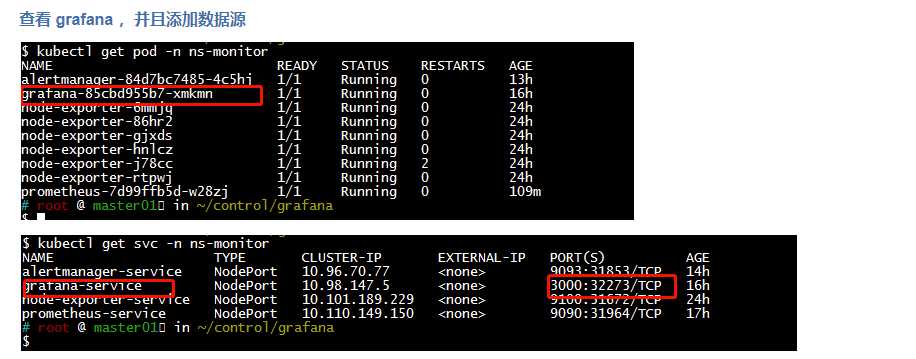

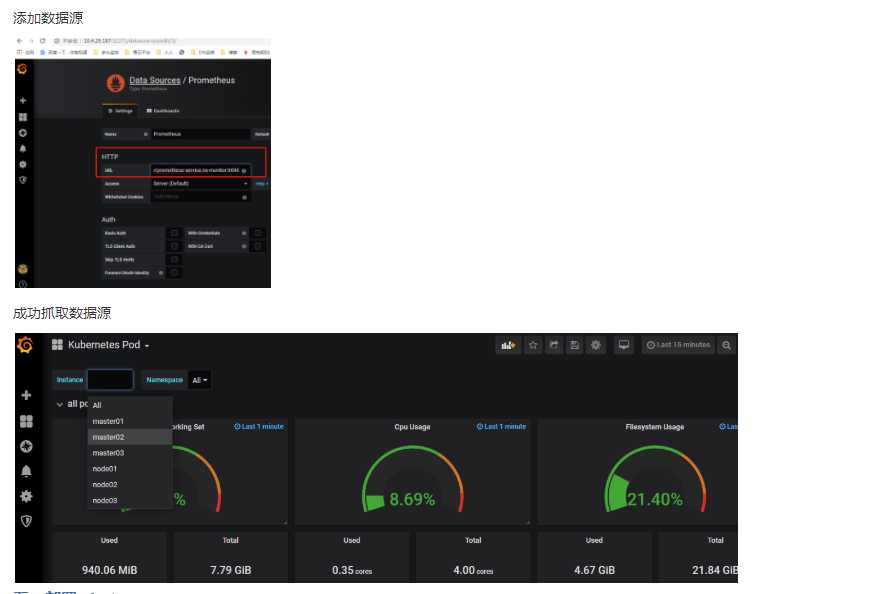

四、部署 grafana (在master 01操作) cd /root/control/grafana

创建pv (前提是在各node 节点创建数据目录/nfs/grafana/data )

$ cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: "grafana-data-pv"

labels:

name: grafana-data-pv

release: stable

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

hostPath:

path: /nfs/grafana/data

#生成配置文件

kubectl create -f pv.yaml

创建pvc

$ cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data-pvc

namespace: ns-monitor

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

selector:

matchLabels:

name: grafana-data-pv

release: stable

# 生成配置文件

kubectl create -f pvc.yaml

创建 grafana pod

$ cat deployment.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: grafana

namespace: ns-monitor

labels:

app: grafana

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

runAsUser: 0

containers:

- name: grafana

image: grafana/grafana:latest

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-data-volume

ports:

- containerPort: 3000

protocol: TCP

volumes:

- name: grafana-data-volume

persistentVolumeClaim:

claimName: grafana-data-pvc

#生成配置文件

kubectl create -f deployment.yaml

创建 grafana pod service

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana-service

namespace: ns-monitor

spec:

ports:

- port: 3000

targetPort: 3000

selector:

app: grafana

type: NodePort

#生成配置文件

kubectl create -f service.yaml

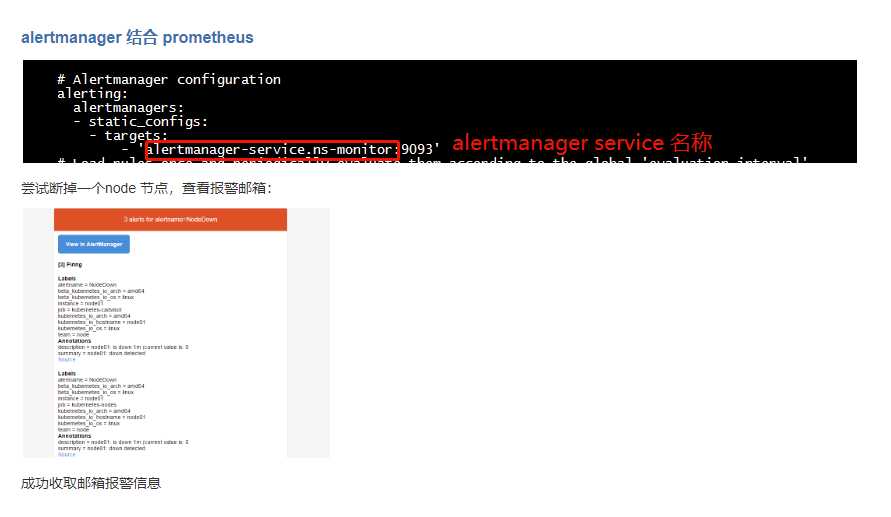

五、部署 alertmanager cd /root/control/alertmanager

创建 alertmanager 主配置文件 configmap

$ cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alert-config

namespace: ns-monitor

data:

config.yml: |-

global:

smtp_smarthost: ‘smtp.exmail.qq.com:465‘

smtp_from: ‘xxx@donews.com‘

smtp_auth_username: ‘xxx@donews.com‘

smtp_auth_password: ‘yNE8wZDfYsadsadsad13ctr65Gra‘

smtp_require_tls: false

route:

group_by: [‘alertname‘, ‘cluster‘]

group_wait: 30s

group_interval: 5m

repeat_interval: 5m

receiver: default

routes:

- receiver: email

group_wait: 10s

match:

team: node

receivers:

- name: ‘default‘

email_configs:

- to: ‘lixinliang@donews.com‘

send_resolved: true

- name: ‘email‘

email_configs:

- to: ‘lixinliang@donews.com‘

send_resolved: true

# 生成配置文件

kubectl create -f config.yaml

创建 alertmanager pod

$ cat deploymen.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: alertmanager

namespace: ns-monitor

labels:

app: alertmanager

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: prom/alertmanager:v0.15.3

imagePullPolicy: IfNotPresent

args:

- "--config.file=/etc/alertmanager/config.yml"

- "--storage.path=/alertmanager/data"

ports:

- containerPort: 9093

name: http

volumeMounts:

- mountPath: "/etc/alertmanager"

name: alertcfg

resources:

requests:

cpu: 100m

memory: 256Mi

volumes:

- name: alertcfg

configMap:

name: alert-config

# 生成配置文件

kubectl create -f deploymen.yaml

创建 alertmanager service

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: alertmanager

name: alertmanager-service

namespace: ns-monitor

spec:

ports:

- port: 9093

targetPort: 9093

selector:

app: alertmanager

type: NodePort

# 生成配置文件

kubectl create -f service.yaml

标签:bind The mod exp root k8s poi storage inf

原文地址:https://www.cnblogs.com/lixinliang/p/12217245.html