标签:art ret highlight next image strip ima for gen

时间久了,自然就忘了。一时性起,爬取豆瓣玩玩。

1.scrapy startproject novels 创建novel 项目

2.cd novels && scrapy genspider douban douban.com 创建模板

3.上代码。

爬虫主页面:

# -*- coding: utf-8 -*-

import scrapy

from novels.items import Douban250BookItem

class DoubanSpider(scrapy.Spider):

name = ‘douban‘

allowed_domains = [‘douban.com‘]

start_urls = [‘https://book.douban.com/top250‘]

def parse(self, response):

#第一页

yield scrapy.Request(response.url, callback=self.paese_next)

#其他页(这里我只得出其他9页的href)

for page in response.xpath(‘//div[@class="paginator"]/a‘):

url = page.xpath(‘@href‘).extract_first()

yield scrapy.Request(url,callback=self.paese_next)

def paese_next(self,response):

for item in response.xpath(‘//tr[@class="item"]‘):

book = Douban250BookItem()

book[‘name‘] = item.xpath(‘td[2]/div[1]/a/@title‘).extract_first()

price_str = item.xpath(‘td[2]/p/text()‘).extract_first().split(‘/‘)[-1]

book[‘price‘] = price_str.strip()

book[‘ratings‘] = item.xpath(‘td[2]/div[2]/span[2]/text()‘).extract_first()

yield book

记住,要在设置里面 加入user_agent ,否则爬不了

item.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class NovelsItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

pass

class Douban250BookItem(scrapy.Item):

name = scrapy.Field()

price = scrapy.Field()

ratings = scrapy.Field()

pipelines.py代码;

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don‘t forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import pymysql

class NovelsPipeline(object):

def process_item(self, item, spider):

name = item[‘name‘]

price = item[‘price‘]

ratings = item[‘ratings‘]

connection = pymysql.connect(

host=‘127.0.0.1‘,

user=‘root‘,

passwd=‘root‘,

db=‘python_scrapy‘,

# charset=‘utf-8‘,

cursorclass=pymysql.cursors.DictCursor

)

try:

with connection.cursor() as cursor:

# 创建更新值的sql语句

sql = """INSERT INTO `douban250` (name, price, ratings) VALUES (%s, %s, %s) """

cursor.execute(

sql, (name, price, ratings)

)

connection.commit()

finally:

connection.close()

return item

以上就是最基本的scrapy 代码了。

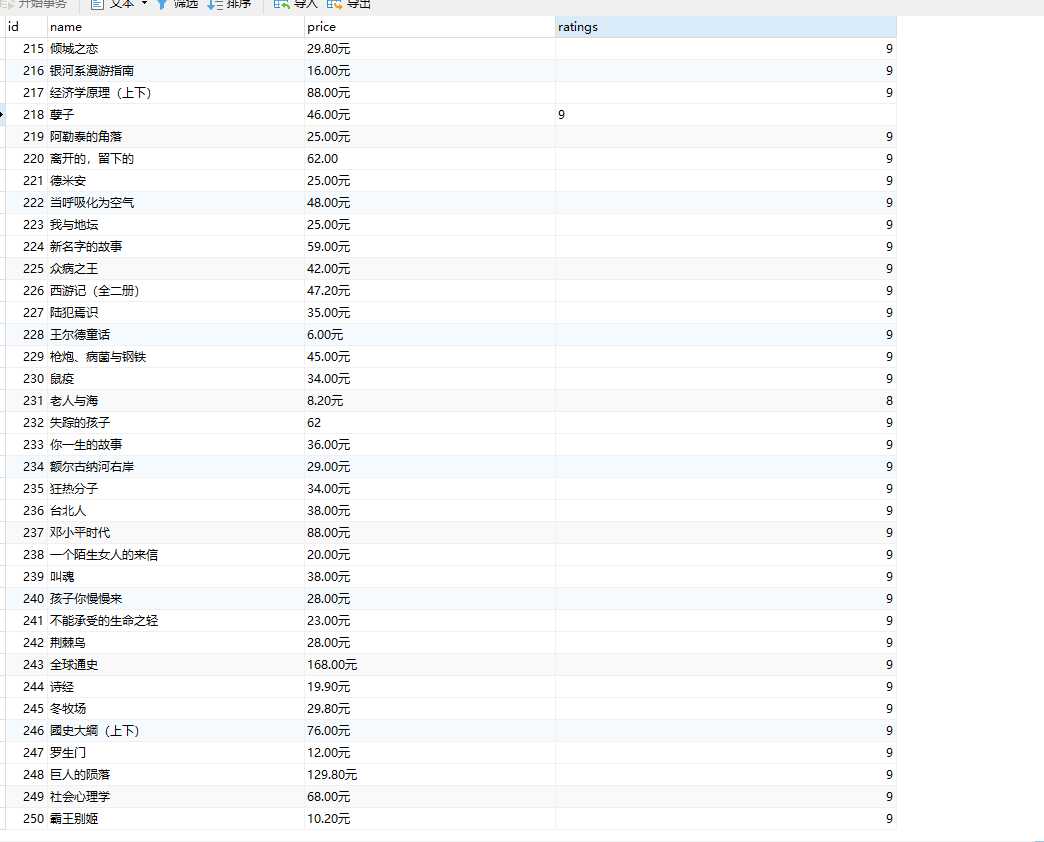

结果:

刚好250行 。

以上代码作个复习。好长时间不写爬虫了,温故而知新!

标签:art ret highlight next image strip ima for gen

原文地址:https://www.cnblogs.com/wujf-myblog/p/12363124.html