标签:oca list copy minimum https val finally row 输出

参考以下博客:

1.https://segmentfault.com/a/1190000015917420

2.https://blog.csdn.net/chenzhenyu123456/article/details/80751477(主要参考)

3.https://zhuanlan.zhihu.com/p/37705980(MTCNN和FaceNet讲解)

论文链接:

MTCNN:https://arxiv.org/ftp/arxiv/papers/1604/1604.02878.pdf

FaceNet:https://arxiv.org/pdf/1503.03832.pdf

论文项目地址:

https://github.com/AITTSMD/MTCNN-Tensorflow

https://github.com/davidsandberg/facenet

结合博客1和博客2实现了图片的验证、识别、查找和实时的验证、识别、查找。

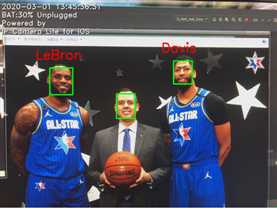

本来想把2020年全明星的成员都训练一遍再进行识别,后来发现识别率较低,可能是数据量不够,每个文件夹下的图片大概有20-25张。因此最后提交作业时仅训练了三个类别。

此次项目仅仅是融合了前两篇博客的内容,并对主函数做了一些调整,由于是课程项目作业,所以并未深究一些论文细节。

完整项目可直接参考博客2里的源码,以下代码是对/face_recognition/test_image.py所做的修改:

1 # -*- coding: utf-8 -*- 2 import os 3 import sys 4 5 BASE_DIR = os.path.dirname(os.path.abspath(__file__)) 6 sys.path.append(BASE_DIR) 7 sys.path.append(os.path.join(BASE_DIR, ‘../ui_with_tkinter‘)) 8 sys.path.append(os.path.join(BASE_DIR, ‘../face_recognition‘)) 9 import classifier as clf 10 import numpy as np 11 import cv2 12 import tensorflow as tf 13 import facenet 14 import detect_face 15 import align_dlib 16 import dlib 17 from PIL import Image, ImageDraw, ImageFont 18 19 os.environ["CUDA_VISIBLE_DEVICES"] = ‘0‘ # use GPU with ID=0 20 config = tf.ConfigProto() 21 config.gpu_options.per_process_gpu_memory_fraction = 0.5 # maximun alloc gpu50% of MEM 22 config.gpu_options.allow_growth = True # allocate dynamically 23 24 25 def predict_labels(best_model_list, 26 test_features, 27 all_data=False): 28 """Predict with the best three models. 29 Inputs: 30 - best_model_list: A list contains the best three models. 31 - test_features: A numpy array contains the features of the test images. 32 - face_number: An integer, the number of faces that we found in the test image. 33 - all_data: True or False, whether the model is trained with all data. 34 35 Return: 36 - labels_array: A numpy array contains the labels which predicted by 37 the best three models . 38 """ 39 labels_array = [] 40 for index, model in enumerate(best_model_list): 41 if all_data: 42 predict = model[0].predict(test_features) 43 else: 44 predict = model[0].predict(test_features) 45 labels_array.append(predict) 46 47 labels_array = np.array(labels_array) 48 return labels_array 49 50 51 def predict_probability(best_probability_model, 52 test_features, 53 face_number, 54 all_data=False): 55 """Predict with softmax probability model. 56 Inputs: 57 - best_probability_model: The best pre_trained softmax model. 58 - test_features: A numpy array contains the features of the test images. 59 - face_number: An integer, the number of faces that we found in the test image. 60 - all_data: True or False, whether the model is trained with all data. 61 62 Returns: 63 - labels: A numpy array contains the predicted labels. 64 - probability: A numpy array contains the probability of the label. 65 """ 66 labels, probability = None, None 67 if face_number is not 0: 68 if all_data: 69 labels = best_probability_model[0].predict(test_features) 70 all_probability = best_probability_model[0].predict_proba(test_features) 71 else: 72 labels = best_probability_model[0].predict(test_features) 73 all_probability = best_probability_model[0].predict_proba(test_features) 74 print(‘all_probability‘, all_probability) 75 print(‘labels‘, labels) 76 print(‘all_probability.shape‘, all_probability.shape) 77 78 # print(probability.shape) -> (face_number, class_number) 79 # print(all_probability) 80 probability = all_probability[np.arange(face_number), np.argmax(all_probability, axis=1)] 81 # np.argmax(all_probability, axis=1)] 82 print(‘probability‘, probability) 83 print(‘probability.shape‘, probability.shape) 84 85 return labels, probability 86 87 88 def find_people_from_image(best_probability_model, test_features, face_number, all_data=False): 89 """ 90 Inputs: 91 - best_probability_model: The best pre_trained softmax model. 92 - test_features: A numpy array contains the features of the test images. 93 - face_number: An integer, the number of faces that we found in the test image. 94 - all_data: If all_data is True, using the models which trained by all 95 training data. Otherwise using the models which trained by partial data. 96 97 e.g. 98 test_features: [feature_1, feature_2, feature_3, feature_4, feature_5] 99 100 first we get all predictive labels and their corresponding probability 101 [(A, 0.3), (A, 0.2), (B, 0.1), (B, 0.3), (C, 0.1)] 102 second we choose the maximum probability for each unique label 103 [(A, 0.3), (B, 0.3), (C, 0.1)] 104 finally, get the indices for each unique label. 105 [0, 3, 4] 106 107 then return 108 label: 109 probability: 110 unique_labels: [A, B, C] 111 unique_probability: [0.3, 0.3, 0.1] 112 unique_indices: [0, 3, 4] 113 """ 114 labels, probability = predict_probability( 115 best_probability_model, test_features, face_number, all_data=all_data) 116 117 unique_labels = np.unique(labels) 118 print(‘unique_labels‘, unique_labels) 119 unique_probability, unique_indices = [], [] 120 121 for label in unique_labels: 122 indices = np.argwhere(labels == label)[:, 0] 123 unique_probability.append(np.max(probability[indices])) 124 unique_indices.append(indices[np.argmax(probability[indices])]) 125 126 unique_probability = np.array(unique_probability) 127 unique_indices = np.array(unique_indices) 128 print(‘unique_probability‘, unique_probability) 129 print(‘unique_indices‘, unique_indices) 130 131 return labels, probability, unique_labels, unique_probability, unique_indices 132 133 134 def check_sf_features(feature, label): 135 """Verification. 136 Inputs: 137 - feature: The feature to be verified. 138 - label: The label used for verification. 139 140 Returns: 141 - True or False, verification result. 142 - sum_dis, the distance loss. 143 """ 144 if os.path.exists(‘features‘): 145 sf_features = np.load(open(‘features/{}.npy‘.format(label), ‘rb‘)) 146 else: 147 sf_features = np.load(open(‘../features/{}.npy‘.format(label), ‘rb‘)) 148 sf_dis = np.sqrt(np.sum((sf_features - feature) ** 2, axis=1)) 149 # print(sf_dis) 150 sum_dis = np.sum(sf_dis) 151 # print(sum_dis) 152 valid_num1 = np.sum(sf_dis > 1.0) 153 valid_num2 = np.sum(sf_dis > 1.1) 154 if valid_num1 >= 4: 155 return False, sum_dis 156 157 if valid_num2 >= 3: 158 return False, sum_dis 159 160 return True, sum_dis 161 162 163 def recognition(image, state, fr=None, all_data=False, language=‘english‘): 164 """Implement face verification, face recognition and face search functions. 165 Inputs: 166 - image_path: A string contains the path to the image. 167 - fr: The object of the UI class. 168 - state: ‘verification‘, face verification 169 ‘recognition‘, face recognition 170 ‘search‘, face search. 171 - used_labels: The labels used to face verification and face search, 172 which does not used in face recognition 173 - image_size: The input size of the MTCNN. 174 - all_data: If all_data is True, using the models which trained by all 175 training data. Otherwise using the models which trained by partial data. 176 - output: True or False, output the process information. 177 178 Returns: 179 - answer: The answer predicted by the model. 180 - image_data: The image data after prediction. 181 """ 182 answer = ‘‘ 183 predict_info = [] 184 for i in state: 185 if i not in {‘verification‘, ‘recognition‘, ‘search‘}: 186 raise ValueError(‘{} is not a valid argument!‘.format(state)) 187 test_features, face_number, face_boxes, flag = process(‘test‘, 188 image, 189 image_size=144, ) 190 if flag: 191 for i in state: 192 if i == ‘verification‘: 193 print(‘Start verification‘) 194 195 labels_array = predict_labels(best_classifier_model_list, 196 test_features, 197 all_data=all_data) 198 labels = [] 199 for line in labels_array.T: # 转置 200 unique, counts = np.unique(line, return_counts=True) # 该函数是去除数组中的重复数字,并进行排序之后输出 201 temp_label = unique[np.argmax(counts)] 202 labels.append(temp_label) 203 204 if used_labels[0] in labels: 205 if language == ‘chinese‘: 206 answer = answer + ‘验证成功!这张图像被认定为{}!‘.format(used_labels[0]) 207 info = ‘验证成功!这张图像被认定为{}!‘.format(used_labels[0]) 208 else: 209 answer = answer + ‘Successful Verification! This image was ‘ 210 ‘identified as {}!‘.format(used_labels[0]) 211 info = ‘Successful Verification! This image ‘ 212 ‘was identified as {}!‘.format(used_labels[0]) 213 else: 214 if language == ‘chinese‘: 215 answer = answer + ‘验证失败!这张图像不被认定为{}!‘ 216 ‘‘.format(used_labels[0]) 217 info = ‘验证失败!这张图像不被认定为{}!‘.format(used_labels[0]) 218 else: 219 answer = answer + ‘Verification failed! This image is not ‘ 220 ‘recognized as {}!‘.format(used_labels[0]) 221 info = ‘Verification failed! This image is not ‘ 222 ‘recognized as {}!‘.format(used_labels[0]) 223 224 for index, box in enumerate(face_boxes): 225 face_position = box.astype(int) 226 cv2.rectangle(image, (face_position[0], face_position[1]), ( 227 face_position[2], face_position[3]), (0, 255, 0), 2) 228 229 if fr is not None: 230 predict_info.append(info) 231 fr.show_information(predict_info, predict=True) 232 if mode == ‘video‘: 233 cv2.imshow(‘camera‘, image) 234 else: 235 cv2.imshow(‘camera‘, image) 236 cv2.imwrite(‘../result/verification.jpg‘, image) 237 cv2.waitKey() 238 239 elif i == ‘recognition‘: 240 print(‘Start recognition‘) 241 labels, _, unique_labels, unique_probability, unique_indices = 242 find_people_from_image(best_probability_model, test_features, face_number, all_data=all_data) 243 info = ‘‘ 244 if language == ‘chinese‘: 245 info = info + ‘从图像中检测到‘ 246 else: 247 info = info + ‘Detected ‘ 248 answer = answer + info 249 250 for index, label in enumerate(labels): 251 if index in unique_indices: 252 if check_sf_features(test_features[index], label)[0] is False: 253 if language == ‘chinese‘: 254 # labels[index] = ‘未知‘ 255 labels[index] = ‘‘ 256 else: 257 # labels[index] = ‘Unknown‘ 258 labels[index] = ‘‘ 259 else: 260 if language == ‘chinese‘: 261 info = info + ‘{},‘.format(label) 262 answer = answer + ‘{},‘.format(label) 263 else: 264 info = info + ‘{},‘.format(label) 265 answer = answer + ‘{},‘.format(label) 266 else: 267 if language == ‘chinese‘: 268 labels[index] = ‘‘ 269 # labels[index] = ‘未知‘ 270 else: 271 # labels[index] = ‘Unknown‘ 272 labels[index] = ‘‘ 273 info = info[:-1] 274 answer = answer[:-1] 275 if language == ‘english‘: 276 info = info + ‘ in this image!‘ 277 answer = answer + ‘ in this image!‘ 278 else: 279 info = info + ‘!‘ 280 answer = answer + ‘!‘ 281 282 if fr is not None: 283 predict_info.append(info) 284 fr.show_information(predict_info, predict=True) 285 for index, label in enumerate(labels): 286 face_position = face_boxes[index].astype(int) 287 image_data = puttext(image, face_position, label, face_number, language=‘english‘) 288 if mode == ‘video‘: 289 cv2.imshow(‘camera‘, image_data) 290 else: 291 cv2.imshow(‘camera‘, image_data) 292 cv2.imwrite(‘../result/recognition.jpg‘, image_data) 293 cv2.waitKey() 294 295 296 elif i == ‘search‘: 297 print(‘Start search‘) 298 _, _, unique_labels, unique_probability, unique_indices = 299 find_people_from_image(best_probability_model, test_features, face_number, all_data=all_data) 300 n = unique_labels.shape[0] 301 302 found_indices = [] 303 if language == ‘chinese‘: 304 info = ‘从图像中找到‘ 305 else: 306 info = ‘Found ‘ 307 answer = answer + info 308 for i in range(n): 309 if unique_labels[i] not in used_labels: 310 continue 311 index = unique_indices[i] 312 if check_sf_features(test_features[index], unique_labels[i])[0] is False: 313 continue 314 if language == ‘chinese‘: 315 answer = answer + ‘{},‘.format(unique_labels[i]) 316 info = info + ‘{},‘.format(unique_labels[i]) 317 else: 318 answer = answer + ‘{},‘.format(unique_labels[i]) 319 info = info + ‘{},‘.format(unique_labels[i]) 320 found_indices.append(i) 321 322 info = info[:-1] 323 answer = answer[:-1] 324 if language == ‘english‘: 325 info = info + ‘ in this image!‘ 326 answer = answer + ‘ in this image!‘ 327 else: 328 info = info + ‘!‘ 329 answer = answer + ‘!‘ 330 331 if fr is not None: 332 predict_info.append(info) 333 fr.show_information(predict_info, predict=True) 334 for i in found_indices: 335 index = unique_indices[i] 336 face_position = face_boxes[index].astype(int) 337 image_data = puttext(image, face_position, unique_labels[i], face_number, language=‘english‘) 338 if mode == ‘video‘: 339 cv2.imshow(‘camera‘, image_data) 340 else: 341 cv2.imshow(‘camera‘, image_data) 342 cv2.imwrite(‘../result/search.jpg‘, image_data) 343 cv2.waitKey() 344 return answer 345 else: 346 return 0 347 348 349 def process(state, image, image_size=144): 350 if state == ‘test‘: 351 test_image_data = image.copy() 352 test_features = [] 353 face_boxes, _ = detect_face.detect_face( 354 test_image_data, minsize, p_net, r_net, o_net, threshold, factor) 355 face_number = face_boxes.shape[0] 356 357 if face_number is 0: 358 print(‘face number is 0‘) 359 return None, face_number, None, 0 360 else: 361 for face_position in face_boxes: 362 face_position = face_position.astype(int) 363 face_rect = dlib.rectangle(int(face_position[0]), int( 364 face_position[1]), int(face_position[2]), int(face_position[3])) 365 366 # test_pose_landmarks = face_pose_predictor(test_image_data, face_rect) 367 # test_image_landmarks = test_pose_landmarks.parts() 368 369 aligned_data = face_aligner.align( 370 image_size, test_image_data, face_rect, landmarkIndices=align_dlib.AlignDlib.OUTER_EYES_AND_NOSE) 371 372 # plt.subplot(face_number, 1, index) 373 # plt.imshow(aligned_data) 374 # plt.axis(‘off‘) 375 # plt.show() 376 # cv2.imwrite(‘datasets/team_aligned/{}.jpg‘.format(str(index)), 377 # cv2.cvtColor(aligned_data, cv2.COLOR_RGB2BGR)) 378 379 aligned_data = facenet.prewhiten(aligned_data) 380 last_data = aligned_data.reshape((1, image_size, image_size, 3)) 381 test_features.append(sess.run(embeddings, feed_dict={ 382 images_placeholder: last_data, phase_train_placeholder: False})[0]) 383 test_features = np.array(test_features) 384 385 return test_features, face_number, face_boxes, 1 386 387 388 def puttext(image_data, face_position, label, face_number, language=‘english‘): 389 # print(‘face_position[%d]‘ % (index), face_position) 390 label_pixels, font_size, line_size, rect_size, up_offset = 391 None, None, None, None, None 392 if face_number == 1: 393 rect_size = 2 394 if language == ‘chinese‘: 395 label_pixels = 30 * len(label) # 140 * len(label) 396 font_size = 30 # 140 397 up_offset = 40 # 140 398 else: 399 label_pixels = 20 * len(label) 400 up_offset = 20 401 font_size = 1 402 line_size = 2 403 elif face_number < 4: 404 rect_size = 2 # 7 405 if language == ‘chinese‘: 406 label_pixels = 30 * len(label) # 140 * len(label) 407 font_size = 30 # 140 408 up_offset = 40 # 140 409 else: 410 label_pixels = 20 * len(label) 411 up_offset = 20 412 font_size = 1 413 line_size = 2 414 elif face_number >= 4: 415 rect_size = 2 # 6 416 if language == ‘chinese‘: 417 label_pixels = 30 * len(label) # 100 * len(label) 418 font_size = 30 # 100 419 up_offset = 40 # 100 420 else: 421 label_pixels = 10 * len(label) 422 up_offset = 20 423 font_size = 1 424 line_size = 2 425 426 dis = (label_pixels - (face_position[2] - face_position[0])) // 2 427 # dis = 0 428 429 if language == ‘chinese‘: 430 # The color coded storage order in cv2(BGR) and PIL(RGB) is different 431 cv2img = cv2.cvtColor(image_data, cv2.COLOR_BGR2RGB) 432 pilimg = Image.fromarray(cv2img) 433 # use PIL to display Chinese characters in images 434 draw = ImageDraw.Draw(pilimg) 435 font = ImageFont.truetype(‘../resources/STKAITI.TTF‘, 436 font_size, encoding="utf-8") 437 # draw.text((face_position[0] - dis, face_position[1]-up_offset), 438 # label, (0, 0, 255), font=font) 439 draw.text((face_position[0] - dis, face_position[1] - up_offset), 440 label, (0, 0, 255), font=font) 441 # convert to cv2 442 image_data = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR) 443 else: 444 cv2.putText(image_data, label, 445 (face_position[0] - dis, face_position[1] - up_offset), 446 cv2.FONT_HERSHEY_SIMPLEX, font_size, 447 (0, 0, 255), line_size) 448 image_data = cv2.rectangle(image_data, (face_position[0], face_position[1]), 449 (face_position[2], face_position[3]), 450 (0, 255, 0), rect_size) 451 return image_data 452 453 454 if __name__ == ‘__main__‘: 455 minsize = 20 # minimum size of face 456 threshold = [0.6, 0.7, 0.7] # three steps‘s threshold 457 factor = 0.709 # scale factor 458 gpu_memory_fraction = 0.6 459 460 with tf.Graph().as_default(): 461 gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=gpu_memory_fraction) 462 sess = tf.Session(config=tf.ConfigProto(gpu_options=gpu_options, 463 log_device_placement=False)) 464 with sess.as_default(): 465 p_net, r_net, o_net = detect_face.create_mtcnn(sess, ‘../models/mtcnn/‘) 466 predictor_model = ‘../models/shape_predictor_68_face_landmarks.dat‘ 467 face_aligner = align_dlib.AlignDlib(predictor_model) 468 model_dir = ‘../models/20170512-110547/20170512-110547.pb‘ # model directory 469 tf.Graph().as_default() 470 sess = tf.Session() 471 facenet.load_model(model_dir) 472 images_placeholder = tf.get_default_graph().get_tensor_by_name("input:0") 473 embeddings = tf.get_default_graph().get_tensor_by_name("embeddings:0") 474 phase_train_placeholder = tf.get_default_graph().get_tensor_by_name("phase_train:0") 475 all_data = True 476 best_classifier_model_list = clf.load_best_classifier_model(all_data=all_data) 477 best_probability_model = clf.load_best_probability_model(all_data=True) 478 #print(‘best_probability_model‘, best_probability_model)

#以上是加载模型

479 480 fit_all_data = True 481 mode = ‘video‘ # ‘video‘ or ‘picture‘ 482 state = [‘recognition‘] # state = [‘verification‘, ‘recognition‘, ‘search‘] 483 used_labels = [‘LeBron‘] # verification只验证used_labels[0],search时会查找所有label 484 485 if mode == ‘video‘: 486 video = "http://admin:admin@192.168.0.13:8081"#ip摄像头地址,需要修改为自己的ip地址 487 # video = 0 488 capture = cv2.VideoCapture(video) 489 cv2.namedWindow("camera", 1) 490 language = ‘english‘ 491 c = 0 492 num = 0 493 frame_interval = 3 # frame intervals 494 test_features = [] 495 while True: 496 ret, frame = capture.read() 497 cv2.imshow("camera", frame) 498 answer = recognition(frame, state, fr=None, all_data=fit_all_data, language=‘english‘, ) 499 print(answer) 500 c += 1 501 key = cv2.waitKey(3) 502 if key == 27: 503 # esc键退出 504 print("esc break...") 505 break 506 if key == ord(‘ ‘): 507 # 保存一张图像 508 num = num + 1 509 filename = "frames_%s.jpg" % num 510 cv2.imwrite(‘../result/‘ + filename, frame) 511 # When everything is done, release the capture 512 capture.release() 513 cv2.destroyWindow("camera") 514 else: 515 image = cv2.imread(‘../test/42.jpg‘) 516 answer = recognition(image, fr=None, state=state, all_data=fit_all_data, language=‘english‘, ) 517 print(answer)

标签:oca list copy minimum https val finally row 输出

原文地址:https://www.cnblogs.com/DJames23/p/12404834.html