标签:visio err eve dep building web fstab air centos

环境说明:# 工作系统: win 10 on linux

# 操作系统:centos7

# docker版本:19.03.5

# rancher版本: latest

# rke 版本: v1.0.4

# K8S master 节点IP:192.168.2.175,192.168.2.176,192.168.2.177

# K8S worker节点IP: 192.168.2.175,192.168.2.176,192.168.2.177,192.168.2.185,192.168.2.187

# K8S etcd 节点IP:192.168.2.175,192.168.2.176,192.168.2.177

# helm 版本:v3.0.2# 操作在所有节点进行

# 修改内核参数:

关闭swap

vim /etc/sysctl.conf

vm.swappiness=0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

sysctl -p

临时生效

swapoff -a && sysctl -w vm.swappiness=0

# 修改 fstab 不在挂载 swap

vi /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

# 安装docker

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 添加docker配置

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{

"max-concurrent-downloads": 20,

"data-root": "/apps/docker/data",

"exec-root": "/apps/docker/root",

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"],

"log-driver": "json-file",

"bridge": "docker0",

"oom-score-adjust": -1000,

"debug": false,

"log-opts": {

"max-size": "100M",

"max-file": "10"

},

"default-ulimits": {

"nofile": {

"Name": "nofile",

"Hard": 1024000,

"Soft": 1024000

},

"nproc": {

"Name": "nproc",

"Hard": 1024000,

"Soft": 1024000

},

"core": {

"Name": "core",

"Hard": -1,

"Soft": -1

}

}

}

# 安装依赖

yum install -y yum-utils ipvsadm telnet wget net-tools conntrack ipset jq iptables curl sysstat libseccomp socat nfs-utils fuse fuse-devel

# 安装docker依赖

yum install -y python-pip python-devel yum-utils device-mapper-persistent-data lvm2

# 安装docker

yum install -y docker-ce

# reload service 配置

systemctl daemon-reload

# 重启docker

systemctl restart docker

# 设置开机启动

systemctl enable docker

# 下载 rke

mkdir rke

cd rke

wget https://github.com/rancher/rke/releases/download/v1.0.4/rke_linux-amd64

# 部署rke

mv rke_linux-amd64 /bin/rke

chmod +x /bin/rke

# 下载 helm

wget https://get.helm.sh/helm-v3.1.2-linux-amd64.tar.gz

# 解压 helm-v3.1.2-linux-amd64.tar.gz

tar -xvf helm-v3.1.2-linux-amd64.tar.gz

cd linux-amd64/

mv helm /bin/

# 创建 rke 使用用户

# 所有节点包括操作节点

useradd rke

passwd rke # 设置 密码

mkdir -p /home/rke/.ssh

chown -R rke.rke /home/rke/.ssh/

usermod -aG docker rke

# 创建 rke 使用ssh 公私钥

su rke

bash

ssh-keygen # 直接默认回车

ssh-copy-id node 节点IP 分发密钥# 工作机上执行

cd rke

rke config --name cluster.yml

# 生成单节点master 部署配置文件 多节点直接添加节点就好

root@Qist:/tmp# rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]:

[+] Number of Hosts [1]:

[+] SSH Address of host (1) [none]: 192.168.2.175

[+] SSH Port of host (1) [22]: 22

[+] SSH Private Key Path of host (192.168.2.175) [none]:

[-] You have entered empty SSH key path, trying fetch from SSH key parameter

[+] SSH Private Key of host (192.168.2.175) [none]:

[-] You have entered empty SSH key, defaulting to cluster level SSH key: ~/.ssh/id_rsa

[+] SSH User of host (192.168.2.175) [ubuntu]: rke

[+] Is host (192.168.2.175) a Control Plane host (y/n)? [y]: y

[+] Is host (192.168.2.175) a Worker host (y/n)? [n]: y

[+] Is host (192.168.2.175) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (192.168.2.175) [none]:

[+] Internal IP of host (192.168.2.175) [none]:

[+] Docker socket path on host (192.168.2.175) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal) [canal]: flannel

[+] Authentication Strategy [x509]:

[+] Authorization Mode (rbac, none) [rbac]: rbac

[+] Kubernetes Docker image [rancher/hyperkube:v1.17.2-rancher1]: rancher/hyperkube:v1.17.3-rancher1

[+] Cluster domain [cluster.local]:

[+] Service Cluster IP Range [10.43.0.0/16]:

[+] Enable PodSecurityPolicy [n]:

[+] Cluster Network CIDR [10.42.0.0/16]:

[+] Cluster DNS Service IP [10.43.0.10]:

[+] Add addon manifest URLs or YAML files [no]:

# 修改kube-proxy 数据转发规则为IPVS 找到kubeproxy 添加proxy-mode: "ipvs"

kubeproxy:

image: ""

extra_args:

proxy-mode: "ipvs"

extra_binds: []

extra_env: []

#修改 集群配置文件

vim cluster.yml

# If you intened to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: 192.168.2.175

port: "22"

internal_address: ""

role:

- etcd

- controlplane

- worker

hostname_override: ""

user: rke

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.2.176

port: "22"

internal_address: ""

role:

- etcd

- controlplane

- worker

hostname_override: ""

user: rke

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.2.177

port: "22"

internal_address: ""

role:

- etcd

- controlplane

- worker

hostname_override: ""

user: rke

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.2.185

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rke

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.2.187

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rke

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args:

proxy-mode: "ipvs"

extra_binds: []

extra_env: []

network:

plugin: flannel

options: {}

mtu: 0

node_selector: {}

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/coreos-etcd:v3.4.3-rancher1

alpine: rancher/rke-tools:v0.1.52

nginx_proxy: rancher/rke-tools:v0.1.52

cert_downloader: rancher/rke-tools:v0.1.52

kubernetes_services_sidecar: rancher/rke-tools:v0.1.52

kubedns: rancher/k8s-dns-kube-dns:1.15.0

dnsmasq: rancher/k8s-dns-dnsmasq-nanny:1.15.0

kubedns_sidecar: rancher/k8s-dns-sidecar:1.15.0

kubedns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

coredns: rancher/coredns-coredns:1.6.5

coredns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

kubernetes: rancher/hyperkube:v1.17.3-rancher1

flannel: rancher/coreos-flannel:v0.11.0-rancher1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher5

calico_node: rancher/calico-node:v3.10.2

calico_cni: rancher/calico-cni:v3.10.2

calico_controllers: rancher/calico-kube-controllers:v3.10.2

calico_ctl: rancher/calico-ctl:v2.0.0

calico_flexvol: rancher/calico-pod2daemon-flexvol:v3.10.2

canal_node: rancher/calico-node:v3.10.2

canal_cni: rancher/calico-cni:v3.10.2

canal_flannel: rancher/coreos-flannel:v0.11.0

canal_flexvol: rancher/calico-pod2daemon-flexvol:v3.10.2

weave_node: weaveworks/weave-kube:2.5.2

weave_cni: weaveworks/weave-npc:2.5.2

pod_infra_container: rancher/pause:3.1

ingress: rancher/nginx-ingress-controller:nginx-0.25.1-rancher1

ingress_backend: rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1

metrics_server: rancher/metrics-server:v0.3.6

windows_pod_infra_container: rancher/kubelet-pause:v0.1.3

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: false

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

monitoring:

provider: ""

options: {}

node_selector: {}

restore:

restore: false

snapshot_name: ""

dns: null# 进入rke 用户

su rke

# 运行bash

bash

# 进入rke 配置文件目录

cd rke

# 开始部署

rke up

rke@Qist:/mnt/g/work/rke$ rke up

INFO[0000] Running RKE version: v1.0.4

INFO[0000] Initiating Kubernetes cluster

INFO[0000] [dialer] Setup tunnel for host [192.168.2.176]

INFO[0000] [dialer] Setup tunnel for host [192.168.2.175]

INFO[0000] [dialer] Setup tunnel for host [192.168.2.185]

INFO[0000] [dialer] Setup tunnel for host [192.168.2.177]

INFO[0000] [dialer] Setup tunnel for host [192.168.2.187]

INFO[0000] Checking if container [cluster-state-deployer] is running on host [192.168.2.176], try #1

INFO[0000] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0001] Starting container [cluster-state-deployer] on host [192.168.2.176], try #1

INFO[0002] [state] Successfully started [cluster-state-deployer] container on host [192.168.2.176]

INFO[0002] Checking if container [cluster-state-deployer] is running on host [192.168.2.177], try #1

INFO[0002] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0003] Starting container [cluster-state-deployer] on host [192.168.2.177], try #1

INFO[0005] [state] Successfully started [cluster-state-deployer] container on host [192.168.2.177]

INFO[0005] Checking if container [cluster-state-deployer] is running on host [192.168.2.185], try #1

INFO[0005] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0006] Starting container [cluster-state-deployer] on host [192.168.2.185], try #1

INFO[0007] [state] Successfully started [cluster-state-deployer] container on host [192.168.2.185]

INFO[0007] Checking if container [cluster-state-deployer] is running on host [192.168.2.187], try #1

INFO[0007] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0007] Starting container [cluster-state-deployer] on host [192.168.2.187], try #1

INFO[0008] [state] Successfully started [cluster-state-deployer] container on host [192.168.2.187]

INFO[0008] Checking if container [cluster-state-deployer] is running on host [192.168.2.175], try #1

INFO[0008] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0009] Starting container [cluster-state-deployer] on host [192.168.2.175], try #1

INFO[0010] [state] Successfully started [cluster-state-deployer] container on host [192.168.2.175]

INFO[0010] [certificates] Generating CA kubernetes certificates

INFO[0010] [certificates] Generating Kubernetes API server aggregation layer requestheader client CA certificates

INFO[0010] [certificates] Generating Kubernetes API server certificates

INFO[0010] [certificates] Generating Service account token key

INFO[0010] [certificates] Generating Kube Controller certificates

INFO[0010] [certificates] Generating Kube Scheduler certificates

INFO[0010] [certificates] Generating Kube Proxy certificates

INFO[0010] [certificates] Generating Node certificate

INFO[0010] [certificates] Generating admin certificates and kubeconfig

INFO[0011] [certificates] Generating Kubernetes API server proxy client certificates

INFO[0011] [certificates] Generating kube-etcd-192-168-2-175 certificate and key

INFO[0011] [certificates] Generating kube-etcd-192-168-2-176 certificate and key

INFO[0011] [certificates] Generating kube-etcd-192-168-2-177 certificate and key

INFO[0011] Successfully Deployed state file at [./cluster.rkestate]

INFO[0011] Building Kubernetes cluster

INFO[0011] [dialer] Setup tunnel for host [192.168.2.187]

INFO[0011] [dialer] Setup tunnel for host [192.168.2.176]

INFO[0011] [dialer] Setup tunnel for host [192.168.2.175]

INFO[0011] [dialer] Setup tunnel for host [192.168.2.177]

INFO[0011] [dialer] Setup tunnel for host [192.168.2.185]

INFO[0012] [network] Deploying port listener containers

INFO[0012] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0012] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0012] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0012] Starting container [rke-etcd-port-listener] on host [192.168.2.176], try #1

INFO[0012] Starting container [rke-etcd-port-listener] on host [192.168.2.175], try #1

INFO[0012] Starting container [rke-etcd-port-listener] on host [192.168.2.177], try #1

INFO[0012] [network] Successfully started [rke-etcd-port-listener] container on host [192.168.2.176]

INFO[0012] [network] Successfully started [rke-etcd-port-listener] container on host [192.168.2.175]

INFO[0013] [network] Successfully started [rke-etcd-port-listener] container on host [192.168.2.177]

INFO[0013] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0013] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0013] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0013] Starting container [rke-cp-port-listener] on host [192.168.2.175], try #1

INFO[0013] Starting container [rke-cp-port-listener] on host [192.168.2.176], try #1

INFO[0013] Starting container [rke-cp-port-listener] on host [192.168.2.177], try #1

INFO[0013] [network] Successfully started [rke-cp-port-listener] container on host [192.168.2.176]

INFO[0013] [network] Successfully started [rke-cp-port-listener] container on host [192.168.2.175]

INFO[0014] [network] Successfully started [rke-cp-port-listener] container on host [192.168.2.177]

INFO[0014] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0014] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0014] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0014] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0014] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.2.175], try #1

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.2.185], try #1

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.2.177], try #1

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.2.176], try #1

INFO[0014] Starting container [rke-worker-port-listener] on host [192.168.2.187], try #1

INFO[0014] [network] Successfully started [rke-worker-port-listener] container on host [192.168.2.175]

INFO[0014] [network] Successfully started [rke-worker-port-listener] container on host [192.168.2.176]

INFO[0015] [network] Successfully started [rke-worker-port-listener] container on host [192.168.2.177]

INFO[0015] [network] Successfully started [rke-worker-port-listener] container on host [192.168.2.185]

INFO[0015] [network] Successfully started [rke-worker-port-listener] container on host [192.168.2.187]

INFO[0015] [network] Port listener containers deployed successfully

INFO[0015] [network] Running etcd <-> etcd port checks

INFO[0015] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0015] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0015] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0015] Starting container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0015] Starting container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0015] Starting container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0016] [network] Successfully started [rke-port-checker] container on host [192.168.2.177]

INFO[0016] [network] Successfully started [rke-port-checker] container on host [192.168.2.175]

INFO[0016] Removing container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0016] [network] Successfully started [rke-port-checker] container on host [192.168.2.176]

INFO[0016] Removing container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0017] Removing container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0017] [network] Running control plane -> etcd port checks

INFO[0017] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0017] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0017] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0017] Starting container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0017] Starting container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0017] Starting container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0017] [network] Successfully started [rke-port-checker] container on host [192.168.2.176]

INFO[0017] [network] Successfully started [rke-port-checker] container on host [192.168.2.175]

INFO[0017] Removing container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0017] Removing container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0017] [network] Successfully started [rke-port-checker] container on host [192.168.2.177]

INFO[0018] Removing container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0018] [network] Running control plane -> worker port checks

INFO[0018] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0018] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0018] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0018] Starting container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0018] [network] Successfully started [rke-port-checker] container on host [192.168.2.176]

INFO[0018] Starting container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0018] Starting container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0018] Removing container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0018] [network] Successfully started [rke-port-checker] container on host [192.168.2.175]

INFO[0018] [network] Successfully started [rke-port-checker] container on host [192.168.2.177]

INFO[0018] Removing container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0019] Removing container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0019] [network] Running workers -> control plane port checks

INFO[0019] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0019] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0019] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0019] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0019] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0019] Starting container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0019] Starting container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0019] Starting container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0019] Starting container [rke-port-checker] on host [192.168.2.185], try #1

INFO[0019] Starting container [rke-port-checker] on host [192.168.2.187], try #1

INFO[0019] [network] Successfully started [rke-port-checker] container on host [192.168.2.175]

INFO[0019] [network] Successfully started [rke-port-checker] container on host [192.168.2.176]

INFO[0019] Removing container [rke-port-checker] on host [192.168.2.175], try #1

INFO[0019] Removing container [rke-port-checker] on host [192.168.2.176], try #1

INFO[0019] [network] Successfully started [rke-port-checker] container on host [192.168.2.177]

INFO[0019] Removing container [rke-port-checker] on host [192.168.2.177], try #1

INFO[0019] [network] Successfully started [rke-port-checker] container on host [192.168.2.185]

INFO[0019] [network] Successfully started [rke-port-checker] container on host [192.168.2.187]

INFO[0020] Removing container [rke-port-checker] on host [192.168.2.185], try #1

INFO[0020] Removing container [rke-port-checker] on host [192.168.2.187], try #1

INFO[0020] [network] Checking KubeAPI port Control Plane hosts

INFO[0020] [network] Removing port listener containers

INFO[0020] Removing container [rke-etcd-port-listener] on host [192.168.2.175], try #1

INFO[0020] Removing container [rke-etcd-port-listener] on host [192.168.2.177], try #1

INFO[0020] Removing container [rke-etcd-port-listener] on host [192.168.2.176], try #1

INFO[0020] [remove/rke-etcd-port-listener] Successfully removed container on host [192.168.2.177]

INFO[0020] [remove/rke-etcd-port-listener] Successfully removed container on host [192.168.2.176]

INFO[0020] [remove/rke-etcd-port-listener] Successfully removed container on host [192.168.2.175]

INFO[0020] Removing container [rke-cp-port-listener] on host [192.168.2.175], try #1

INFO[0020] Removing container [rke-cp-port-listener] on host [192.168.2.176], try #1

INFO[0020] Removing container [rke-cp-port-listener] on host [192.168.2.177], try #1

INFO[0021] [remove/rke-cp-port-listener] Successfully removed container on host [192.168.2.176]

INFO[0021] [remove/rke-cp-port-listener] Successfully removed container on host [192.168.2.177]

INFO[0021] [remove/rke-cp-port-listener] Successfully removed container on host [192.168.2.175]

INFO[0021] Removing container [rke-worker-port-listener] on host [192.168.2.175], try #1

INFO[0021] Removing container [rke-worker-port-listener] on host [192.168.2.176], try #1

INFO[0021] Removing container [rke-worker-port-listener] on host [192.168.2.177], try #1

INFO[0021] Removing container [rke-worker-port-listener] on host [192.168.2.185], try #1

INFO[0021] Removing container [rke-worker-port-listener] on host [192.168.2.187], try #1

INFO[0021] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.2.175]

INFO[0021] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.2.176]

INFO[0021] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.2.177]

INFO[0021] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.2.187]

INFO[0021] [remove/rke-worker-port-listener] Successfully removed container on host [192.168.2.185]

INFO[0021] [network] Port listener containers removed successfully

INFO[0021] [certificates] Deploying kubernetes certificates to Cluster nodes

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.2.185], try #1

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.2.176], try #1

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.2.175], try #1

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.2.187], try #1

INFO[0021] Checking if container [cert-deployer] is running on host [192.168.2.177], try #1

INFO[0021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0021] Starting container [cert-deployer] on host [192.168.2.176], try #1

INFO[0021] Starting container [cert-deployer] on host [192.168.2.175], try #1

INFO[0021] Starting container [cert-deployer] on host [192.168.2.187], try #1

INFO[0021] Starting container [cert-deployer] on host [192.168.2.177], try #1

INFO[0021] Starting container [cert-deployer] on host [192.168.2.185], try #1

INFO[0022] Checking if container [cert-deployer] is running on host [192.168.2.176], try #1

INFO[0022] Checking if container [cert-deployer] is running on host [192.168.2.175], try #1

INFO[0022] Checking if container [cert-deployer] is running on host [192.168.2.187], try #1

INFO[0022] Checking if container [cert-deployer] is running on host [192.168.2.177], try #1

INFO[0022] Checking if container [cert-deployer] is running on host [192.168.2.185], try #1

INFO[0027] Checking if container [cert-deployer] is running on host [192.168.2.176], try #1

INFO[0027] Removing container [cert-deployer] on host [192.168.2.176], try #1

INFO[0027] Checking if container [cert-deployer] is running on host [192.168.2.175], try #1

INFO[0027] Removing container [cert-deployer] on host [192.168.2.175], try #1

INFO[0027] Checking if container [cert-deployer] is running on host [192.168.2.187], try #1

INFO[0027] Removing container [cert-deployer] on host [192.168.2.187], try #1

INFO[0027] Checking if container [cert-deployer] is running on host [192.168.2.177], try #1

INFO[0027] Removing container [cert-deployer] on host [192.168.2.177], try #1

INFO[0027] Checking if container [cert-deployer] is running on host [192.168.2.185], try #1

INFO[0027] Removing container [cert-deployer] on host [192.168.2.185], try #1

INFO[0027] [reconcile] Rebuilding and updating local kube config

INFO[0027] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]

INFO[0029] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]

INFO[0031] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]

INFO[0033] [certificates] Successfully deployed kubernetes certificates to Cluster nodes

INFO[0033] [reconcile] Reconciling cluster state

INFO[0033] [reconcile] This is newly generated cluster

INFO[0033] Pre-pulling kubernetes images

INFO[0033] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0033] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0033] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0033] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.185]

INFO[0033] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.187]

INFO[0033] Kubernetes images pulled successfully

INFO[0033] [etcd] Building up etcd plane..

INFO[0033] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0033] Starting container [etcd-fix-perm] on host [192.168.2.175], try #1

INFO[0034] Successfully started [etcd-fix-perm] container on host [192.168.2.175]

INFO[0034] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.175]

INFO[0034] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.175]

INFO[0034] Container [etcd-fix-perm] is still running on host [192.168.2.175]

INFO[0035] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.175]

INFO[0035] Removing container [etcd-fix-perm] on host [192.168.2.175], try #1

INFO[0035] [remove/etcd-fix-perm] Successfully removed container on host [192.168.2.175]

INFO[0035] Image [rancher/coreos-etcd:v3.4.3-rancher1] exists on host [192.168.2.175]

INFO[0035] Starting container [etcd] on host [192.168.2.175], try #1

INFO[0036] [etcd] Successfully started [etcd] container on host [192.168.2.175]

INFO[0036] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.2.175]

INFO[0036] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0036] Starting container [etcd-rolling-snapshots] on host [192.168.2.175], try #1

INFO[0036] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.2.175]

INFO[0041] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0041] Starting container [rke-bundle-cert] on host [192.168.2.175], try #1

INFO[0042] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.2.175]

INFO[0042] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.175]

INFO[0042] Container [rke-bundle-cert] is still running on host [192.168.2.175]

INFO[0043] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.175]

INFO[0043] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.2.175]

INFO[0043] Removing container [rke-bundle-cert] on host [192.168.2.175], try #1

INFO[0043] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0043] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0043] [etcd] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0043] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0043] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0043] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0044] Starting container [etcd-fix-perm] on host [192.168.2.176], try #1

INFO[0044] Successfully started [etcd-fix-perm] container on host [192.168.2.176]

INFO[0044] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.176]

INFO[0044] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.176]

INFO[0044] Container [etcd-fix-perm] is still running on host [192.168.2.176]

INFO[0045] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.176]

INFO[0045] Removing container [etcd-fix-perm] on host [192.168.2.176], try #1

INFO[0045] [remove/etcd-fix-perm] Successfully removed container on host [192.168.2.176]

INFO[0045] Image [rancher/coreos-etcd:v3.4.3-rancher1] exists on host [192.168.2.176]

INFO[0045] Starting container [etcd] on host [192.168.2.176], try #1

INFO[0046] [etcd] Successfully started [etcd] container on host [192.168.2.176]

INFO[0046] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.2.176]

INFO[0046] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0046] Starting container [etcd-rolling-snapshots] on host [192.168.2.176], try #1

INFO[0046] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.2.176]

INFO[0051] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0051] Starting container [rke-bundle-cert] on host [192.168.2.176], try #1

INFO[0052] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.2.176]

INFO[0052] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.176]

INFO[0052] Container [rke-bundle-cert] is still running on host [192.168.2.176]

INFO[0053] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.176]

INFO[0053] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.2.176]

INFO[0053] Removing container [rke-bundle-cert] on host [192.168.2.176], try #1

INFO[0053] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0053] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0053] [etcd] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0053] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0054] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0054] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0054] Starting container [etcd-fix-perm] on host [192.168.2.177], try #1

INFO[0054] Successfully started [etcd-fix-perm] container on host [192.168.2.177]

INFO[0054] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.177]

INFO[0054] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.177]

INFO[0054] Container [etcd-fix-perm] is still running on host [192.168.2.177]

INFO[0055] Waiting for [etcd-fix-perm] container to exit on host [192.168.2.177]

INFO[0055] Removing container [etcd-fix-perm] on host [192.168.2.177], try #1

INFO[0056] [remove/etcd-fix-perm] Successfully removed container on host [192.168.2.177]

INFO[0056] Image [rancher/coreos-etcd:v3.4.3-rancher1] exists on host [192.168.2.177]

INFO[0056] Starting container [etcd] on host [192.168.2.177], try #1

INFO[0056] [etcd] Successfully started [etcd] container on host [192.168.2.177]

INFO[0056] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.2.177]

INFO[0056] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0057] Starting container [etcd-rolling-snapshots] on host [192.168.2.177], try #1

INFO[0057] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.2.177]

INFO[0062] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0062] Starting container [rke-bundle-cert] on host [192.168.2.177], try #1

INFO[0063] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.2.177]

INFO[0063] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.177]

INFO[0063] Container [rke-bundle-cert] is still running on host [192.168.2.177]

INFO[0064] Waiting for [rke-bundle-cert] container to exit on host [192.168.2.177]

INFO[0064] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.2.177]

INFO[0064] Removing container [rke-bundle-cert] on host [192.168.2.177], try #1

INFO[0064] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0064] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0064] [etcd] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0064] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0065] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0065] [etcd] Successfully started etcd plane.. Checking etcd cluster health

INFO[0065] [controlplane] Building up Controller Plane..

INFO[0065] Checking if container [service-sidekick] is running on host [192.168.2.175], try #1

INFO[0065] Checking if container [service-sidekick] is running on host [192.168.2.176], try #1

INFO[0065] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0065] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0065] Checking if container [service-sidekick] is running on host [192.168.2.177], try #1

INFO[0065] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0065] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0065] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0065] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0065] Starting container [kube-apiserver] on host [192.168.2.176], try #1

INFO[0066] Starting container [kube-apiserver] on host [192.168.2.177], try #1

INFO[0066] [controlplane] Successfully started [kube-apiserver] container on host [192.168.2.176]

INFO[0066] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.2.176]

INFO[0066] Starting container [kube-apiserver] on host [192.168.2.175], try #1

INFO[0066] [controlplane] Successfully started [kube-apiserver] container on host [192.168.2.177]

INFO[0066] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.2.177]

INFO[0067] [controlplane] Successfully started [kube-apiserver] container on host [192.168.2.175]

INFO[0067] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.2.175]

INFO[0079] [healthcheck] service [kube-apiserver] on host [192.168.2.176] is healthy

INFO[0079] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0079] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0080] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0080] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0080] [healthcheck] service [kube-apiserver] on host [192.168.2.175] is healthy

INFO[0080] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0080] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0080] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0080] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0080] Starting container [kube-controller-manager] on host [192.168.2.176], try #1

INFO[0081] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0081] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0081] [controlplane] Successfully started [kube-controller-manager] container on host [192.168.2.176]

INFO[0081] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.2.176]

INFO[0081] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0081] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0081] Starting container [kube-controller-manager] on host [192.168.2.175], try #1

INFO[0082] [controlplane] Successfully started [kube-controller-manager] container on host [192.168.2.175]

INFO[0082] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.2.175]

INFO[0082] [healthcheck] service [kube-apiserver] on host [192.168.2.177] is healthy

INFO[0082] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0082] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0083] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0083] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0083] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0083] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0083] Starting container [kube-controller-manager] on host [192.168.2.177], try #1

INFO[0084] [controlplane] Successfully started [kube-controller-manager] container on host [192.168.2.177]

INFO[0084] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.2.177]

INFO[0086] [healthcheck] service [kube-controller-manager] on host [192.168.2.176] is healthy

INFO[0086] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0086] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0086] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0086] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0087] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0087] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0087] [healthcheck] service [kube-controller-manager] on host [192.168.2.175] is healthy

INFO[0087] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0087] Starting container [kube-scheduler] on host [192.168.2.176], try #1

INFO[0087] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0087] [controlplane] Successfully started [kube-scheduler] container on host [192.168.2.176]

INFO[0087] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.2.176]

INFO[0087] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0087] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0088] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0088] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0088] Starting container [kube-scheduler] on host [192.168.2.175], try #1

INFO[0088] [controlplane] Successfully started [kube-scheduler] container on host [192.168.2.175]

INFO[0088] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.2.175]

INFO[0090] [healthcheck] service [kube-controller-manager] on host [192.168.2.177] is healthy

INFO[0090] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0090] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0090] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0090] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0091] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0091] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0091] Starting container [kube-scheduler] on host [192.168.2.177], try #1

INFO[0091] [controlplane] Successfully started [kube-scheduler] container on host [192.168.2.177]

INFO[0091] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.2.177]

INFO[0092] [healthcheck] service [kube-scheduler] on host [192.168.2.176] is healthy

INFO[0092] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0093] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0093] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0093] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0093] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0093] [healthcheck] service [kube-scheduler] on host [192.168.2.175] is healthy

INFO[0093] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0094] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0094] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0094] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0094] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0097] [healthcheck] service [kube-scheduler] on host [192.168.2.177] is healthy

INFO[0097] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0097] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0098] [controlplane] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0098] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0098] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0098] [controlplane] Successfully started Controller Plane..

INFO[0098] [authz] Creating rke-job-deployer ServiceAccount

INFO[0098] [authz] rke-job-deployer ServiceAccount created successfully

INFO[0098] [authz] Creating system:node ClusterRoleBinding

INFO[0098] [authz] system:node ClusterRoleBinding created successfully

INFO[0098] [authz] Creating kube-apiserver proxy ClusterRole and ClusterRoleBinding

INFO[0098] [authz] kube-apiserver proxy ClusterRole and ClusterRoleBinding created successfully

INFO[0098] Successfully Deployed state file at [./cluster.rkestate]

INFO[0098] [state] Saving full cluster state to Kubernetes

INFO[0098] [state] Successfully Saved full cluster state to Kubernetes ConfigMap: cluster-state

INFO[0098] [worker] Building up Worker Plane..

INFO[0098] Checking if container [service-sidekick] is running on host [192.168.2.175], try #1

INFO[0098] Checking if container [service-sidekick] is running on host [192.168.2.176], try #1

INFO[0098] Checking if container [service-sidekick] is running on host [192.168.2.177], try #1

INFO[0098] [sidekick] Sidekick container already created on host [192.168.2.176]

INFO[0098] [sidekick] Sidekick container already created on host [192.168.2.175]

INFO[0098] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0098] [sidekick] Sidekick container already created on host [192.168.2.177]

INFO[0098] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0098] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0098] Starting container [kubelet] on host [192.168.2.176], try #1

INFO[0098] Starting container [kubelet] on host [192.168.2.175], try #1

INFO[0098] Starting container [kubelet] on host [192.168.2.177], try #1

INFO[0098] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0098] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0099] Starting container [nginx-proxy] on host [192.168.2.187], try #1

INFO[0099] [worker] Successfully started [kubelet] container on host [192.168.2.175]

INFO[0099] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.2.175]

INFO[0099] [worker] Successfully started [kubelet] container on host [192.168.2.177]

INFO[0099] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.2.177]

INFO[0099] [worker] Successfully started [kubelet] container on host [192.168.2.176]

INFO[0099] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.2.176]

INFO[0099] Starting container [nginx-proxy] on host [192.168.2.185], try #1

INFO[0099] [worker] Successfully started [nginx-proxy] container on host [192.168.2.187]

INFO[0099] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0099] [worker] Successfully started [nginx-proxy] container on host [192.168.2.185]

INFO[0099] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0100] Starting container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0100] Starting container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0100] [worker] Successfully started [rke-log-linker] container on host [192.168.2.185]

INFO[0100] Removing container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0100] [worker] Successfully started [rke-log-linker] container on host [192.168.2.187]

INFO[0101] [remove/rke-log-linker] Successfully removed container on host [192.168.2.185]

INFO[0101] Checking if container [service-sidekick] is running on host [192.168.2.185], try #1

INFO[0101] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0101] Removing container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0101] [remove/rke-log-linker] Successfully removed container on host [192.168.2.187]

INFO[0101] Checking if container [service-sidekick] is running on host [192.168.2.187], try #1

INFO[0101] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.185]

INFO[0101] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0101] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.187]

INFO[0101] Starting container [kubelet] on host [192.168.2.185], try #1

INFO[0101] Starting container [kubelet] on host [192.168.2.187], try #1

INFO[0102] [worker] Successfully started [kubelet] container on host [192.168.2.185]

INFO[0102] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.2.185]

INFO[0102] [worker] Successfully started [kubelet] container on host [192.168.2.187]

INFO[0102] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.2.187]

INFO[0119] [healthcheck] service [kubelet] on host [192.168.2.175] is healthy

INFO[0119] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0119] [healthcheck] service [kubelet] on host [192.168.2.176] is healthy

INFO[0119] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0119] [healthcheck] service [kubelet] on host [192.168.2.177] is healthy

INFO[0119] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0119] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0119] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0120] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0120] [worker] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0120] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0120] [worker] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0120] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0120] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0120] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.175]

INFO[0120] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0120] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.176]

INFO[0120] [worker] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0120] Starting container [kube-proxy] on host [192.168.2.175], try #1

INFO[0120] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0120] Starting container [kube-proxy] on host [192.168.2.176], try #1

INFO[0121] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0121] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.177]

INFO[0121] [worker] Successfully started [kube-proxy] container on host [192.168.2.175]

INFO[0121] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.2.175]

INFO[0121] [worker] Successfully started [kube-proxy] container on host [192.168.2.176]

INFO[0121] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.2.176]

INFO[0121] Starting container [kube-proxy] on host [192.168.2.177], try #1

INFO[0121] [worker] Successfully started [kube-proxy] container on host [192.168.2.177]

INFO[0121] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.2.177]

INFO[0123] [healthcheck] service [kubelet] on host [192.168.2.185] is healthy

INFO[0123] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0123] [healthcheck] service [kubelet] on host [192.168.2.187] is healthy

INFO[0123] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0123] Starting container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0123] Starting container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0124] [worker] Successfully started [rke-log-linker] container on host [192.168.2.185]

INFO[0124] Removing container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0124] [worker] Successfully started [rke-log-linker] container on host [192.168.2.187]

INFO[0124] Removing container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0124] [remove/rke-log-linker] Successfully removed container on host [192.168.2.185]

INFO[0124] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.185]

INFO[0124] Starting container [kube-proxy] on host [192.168.2.185], try #1

INFO[0124] [remove/rke-log-linker] Successfully removed container on host [192.168.2.187]

INFO[0124] Image [rancher/hyperkube:v1.17.3-rancher1] exists on host [192.168.2.187]

INFO[0124] Starting container [kube-proxy] on host [192.168.2.187], try #1

INFO[0125] [worker] Successfully started [kube-proxy] container on host [192.168.2.187]

INFO[0125] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.2.187]

INFO[0125] [worker] Successfully started [kube-proxy] container on host [192.168.2.185]

INFO[0125] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.2.185]

INFO[0126] [healthcheck] service [kube-proxy] on host [192.168.2.175] is healthy

INFO[0126] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0126] [healthcheck] service [kube-proxy] on host [192.168.2.176] is healthy

INFO[0126] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0126] Starting container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0126] Starting container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0126] [worker] Successfully started [rke-log-linker] container on host [192.168.2.176]

INFO[0126] Removing container [rke-log-linker] on host [192.168.2.176], try #1

INFO[0126] [worker] Successfully started [rke-log-linker] container on host [192.168.2.175]

INFO[0126] Removing container [rke-log-linker] on host [192.168.2.175], try #1

INFO[0127] [healthcheck] service [kube-proxy] on host [192.168.2.177] is healthy

INFO[0127] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0127] [remove/rke-log-linker] Successfully removed container on host [192.168.2.176]

INFO[0127] [remove/rke-log-linker] Successfully removed container on host [192.168.2.175]

INFO[0127] Starting container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0127] [worker] Successfully started [rke-log-linker] container on host [192.168.2.177]

INFO[0127] Removing container [rke-log-linker] on host [192.168.2.177], try #1

INFO[0128] [remove/rke-log-linker] Successfully removed container on host [192.168.2.177]

INFO[0130] [healthcheck] service [kube-proxy] on host [192.168.2.187] is healthy

INFO[0130] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0130] [healthcheck] service [kube-proxy] on host [192.168.2.185] is healthy

INFO[0130] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0130] Starting container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0130] Starting container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0131] [worker] Successfully started [rke-log-linker] container on host [192.168.2.187]

INFO[0131] Removing container [rke-log-linker] on host [192.168.2.187], try #1

INFO[0131] [worker] Successfully started [rke-log-linker] container on host [192.168.2.185]

INFO[0131] Removing container [rke-log-linker] on host [192.168.2.185], try #1

INFO[0131] [remove/rke-log-linker] Successfully removed container on host [192.168.2.187]

INFO[0131] [remove/rke-log-linker] Successfully removed container on host [192.168.2.185]

INFO[0131] [worker] Successfully started Worker Plane..

INFO[0131] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.187]

INFO[0131] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.176]

INFO[0131] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.175]

INFO[0131] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.185]

INFO[0131] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.2.177]

INFO[0132] Starting container [rke-log-cleaner] on host [192.168.2.176], try #1

INFO[0132] Starting container [rke-log-cleaner] on host [192.168.2.175], try #1

INFO[0132] Starting container [rke-log-cleaner] on host [192.168.2.187], try #1

INFO[0132] Starting container [rke-log-cleaner] on host [192.168.2.185], try #1

INFO[0132] Starting container [rke-log-cleaner] on host [192.168.2.177], try #1

INFO[0132] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.2.176]

INFO[0132] Removing container [rke-log-cleaner] on host [192.168.2.176], try #1

INFO[0132] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.2.175]

INFO[0132] Removing container [rke-log-cleaner] on host [192.168.2.175], try #1

INFO[0132] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.2.187]

INFO[0132] Removing container [rke-log-cleaner] on host [192.168.2.187], try #1

INFO[0132] [remove/rke-log-cleaner] Successfully removed container on host [192.168.2.176]

INFO[0132] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.2.185]

INFO[0132] Removing container [rke-log-cleaner] on host [192.168.2.185], try #1

INFO[0132] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.2.177]

INFO[0132] [remove/rke-log-cleaner] Successfully removed container on host [192.168.2.175]

INFO[0132] Removing container [rke-log-cleaner] on host [192.168.2.177], try #1

INFO[0132] [remove/rke-log-cleaner] Successfully removed container on host [192.168.2.187]

INFO[0133] [remove/rke-log-cleaner] Successfully removed container on host [192.168.2.185]

INFO[0133] [remove/rke-log-cleaner] Successfully removed container on host [192.168.2.177]

INFO[0133] [sync] Syncing nodes Labels and Taints

INFO[0133] [sync] Successfully synced nodes Labels and Taints

INFO[0133] [network] Setting up network plugin: flannel

INFO[0133] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0133] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0133] [addons] Executing deploy job rke-network-plugin

INFO[0138] [addons] Setting up coredns

INFO[0138] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0138] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0138] [addons] Executing deploy job rke-coredns-addon

INFO[0144] [addons] CoreDNS deployed successfully..

INFO[0144] [dns] DNS provider coredns deployed successfully

INFO[0144] [addons] Setting up Metrics Server

INFO[0144] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0144] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0144] [addons] Executing deploy job rke-metrics-addon

INFO[0149] [addons] Metrics Server deployed successfully

INFO[0149] [ingress] Setting up nginx ingress controller

INFO[0149] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0149] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0149] [addons] Executing deploy job rke-ingress-controller

INFO[0159] [ingress] ingress controller nginx deployed successfully

INFO[0159] [addons] Setting up user addons

INFO[0159] [addons] no user addons defined

INFO[0159] Finished building Kubernetes cluster successfully

# 部署成功如果中途有报错就多执行几次kubeconfig=/mnt/g/work/rke/kube_config_cluster.yml

rke@Qist:/mnt/g/work/rke$ kubectl --kubeconfig=$kubeconfig get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

rke@Qist:/mnt/g/work/rke$ kubectl --kubeconfig=$kubeconfig get nodes

NAME STATUS ROLES AGE VERSION

192.168.2.175 Ready controlplane,etcd,worker 5m21s v1.17.3

192.168.2.176 Ready controlplane,etcd,worker 5m22s v1.17.3

192.168.2.177 Ready controlplane,etcd,worker 5m21s v1.17.3

192.168.2.185 Ready worker 5m15s v1.17.3

192.168.2.187 Ready worker 5m16s v1.17.3

rke@Qist:/mnt/g/work/rke$ kubectl --kubeconfig=$kubeconfig get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx default-http-backend-67cf578fc4-jnkvj 1/1 Running 0 5m11s

ingress-nginx nginx-ingress-controller-8b76f 1/1 Running 0 5m11s

ingress-nginx nginx-ingress-controller-cpgr8 1/1 Running 0 5m11s

ingress-nginx nginx-ingress-controller-f2r76 1/1 Running 0 5m11s

ingress-nginx nginx-ingress-controller-hhpgx 1/1 Running 0 5m11s

ingress-nginx nginx-ingress-controller-vqjq6 1/1 Running 0 5m11s

kube-system coredns-7c5566588d-d9nh8 1/1 Running 0 5m20s

kube-system coredns-7c5566588d-gszkx 1/1 Running 0 5m11s

kube-system coredns-autoscaler-65bfc8d47d-j8lzq 1/1 Running 0 5m19s

kube-system kube-flannel-lm77c 2/2 Running 0 5m26s

kube-system kube-flannel-pqvq2 2/2 Running 0 5m26s

kube-system kube-flannel-qchmm 2/2 Running 0 5m26s

kube-system kube-flannel-sdrdq 2/2 Running 0 5m26s

kube-system kube-flannel-tcth9 2/2 Running 0 5m26s

kube-system metrics-server-6b55c64f86-cqd74 1/1 Running 0 5m16s

kube-system rke-coredns-addon-deploy-job-gm7hf 0/1 Completed 0 5m23s

kube-system rke-ingress-controller-deploy-job-dk4dl 0/1 Completed 0 5m13s

kube-system rke-metrics-addon-deploy-job-2kttt 0/1 Completed 0 5m18s

kube-system rke-network-plugin-deploy-job-rtzhn 0/1 Completed 0 5m29s

# 证明K8S 集群部署成功# 添加rancher helm 源 二选一 我这里选择 latest

helm repo add rancher-latest https://releases.rancher.com/server-charts/latest

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

# 添加 latest yuan源

rke@Qist:/mnt/g/work/rke$ helm repo add rancher-latest > https://releases.rancher.com/server-charts/latest

"rancher-latest" has been added to your repositories

# 创建补丁dns 补丁文件 如果不打补丁 会出现 helm 源不能访问 cattle-cluster-agent 启动失败等等

vim dnspath.yaml

spec:

template:

spec:

dnsPolicy: ClusterFirst

dnsConfig:

options:

- name: single-request-reopen

hostAliases:

- hostnames:

- rke.tycng.com

ip: 192.168.2.175

# rke.tycng.com 为rancher 集群访问域名

# rke.tycng.com 准备证书 可以申请免费证书

# 重命名证书文件名字

cert=tls.crt

key=tls.key

# 准备完成

# 部署 rancher

# 创建 namespace cattle-system

kubectl --kubeconfig=$kubeconfig create namespace cattle-system

# 提交rke.tycng.com 使用的证书密钥

kubectl --kubeconfig=$kubeconfig -n cattle-system create secret tls tls-rancher-ingress --cert=./tls.crt --key=./tls.key

# 部署 rancher

helm --kubeconfig=$kubeconfig install rancher rancher-latest/rancher --namespace cattle-system --set hostname=rke.tycng.com --set ingress.tls.source=secret

rke@Qist:/mnt/g/work/rke$ helm --kubeconfig=$kubeconfig install rancher rancher-latest/rancher > --namespace cattle-system > --set hostname=rke.tycng.com > --set ingress.tls.source=secret

NAME: rancher

LAST DEPLOYED: Wed Mar 18 11:35:11 2020

NAMESPACE: cattle-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Rancher Server has been installed.

NOTE: Rancher may take several minutes to fully initialize. Please standby while Certificates are being issued and Ingress comes up.

Check out our docs at https://rancher.com/docs/rancher/v2.x/en/

Browse to https://rke.tycng.com

Happy Containering!

# 查看部署状态

rke@Qist:/mnt/g/work/rke$ kubectl --kubeconfig=$kubeconfig get pod -n cattle-system

NAME READY STATUS RESTARTS AGE

rancher-7d769c47d6-gg796 1/1 Running 2 54s

rancher-7d769c47d6-lbqxp 1/1 Running 1 54s

rancher-7d769c47d6-qz2tg 1/1 Running 1 54s

# 部署正常

# dns 解析 rke.tycng.com

# 浏览器 rke.tycng.com

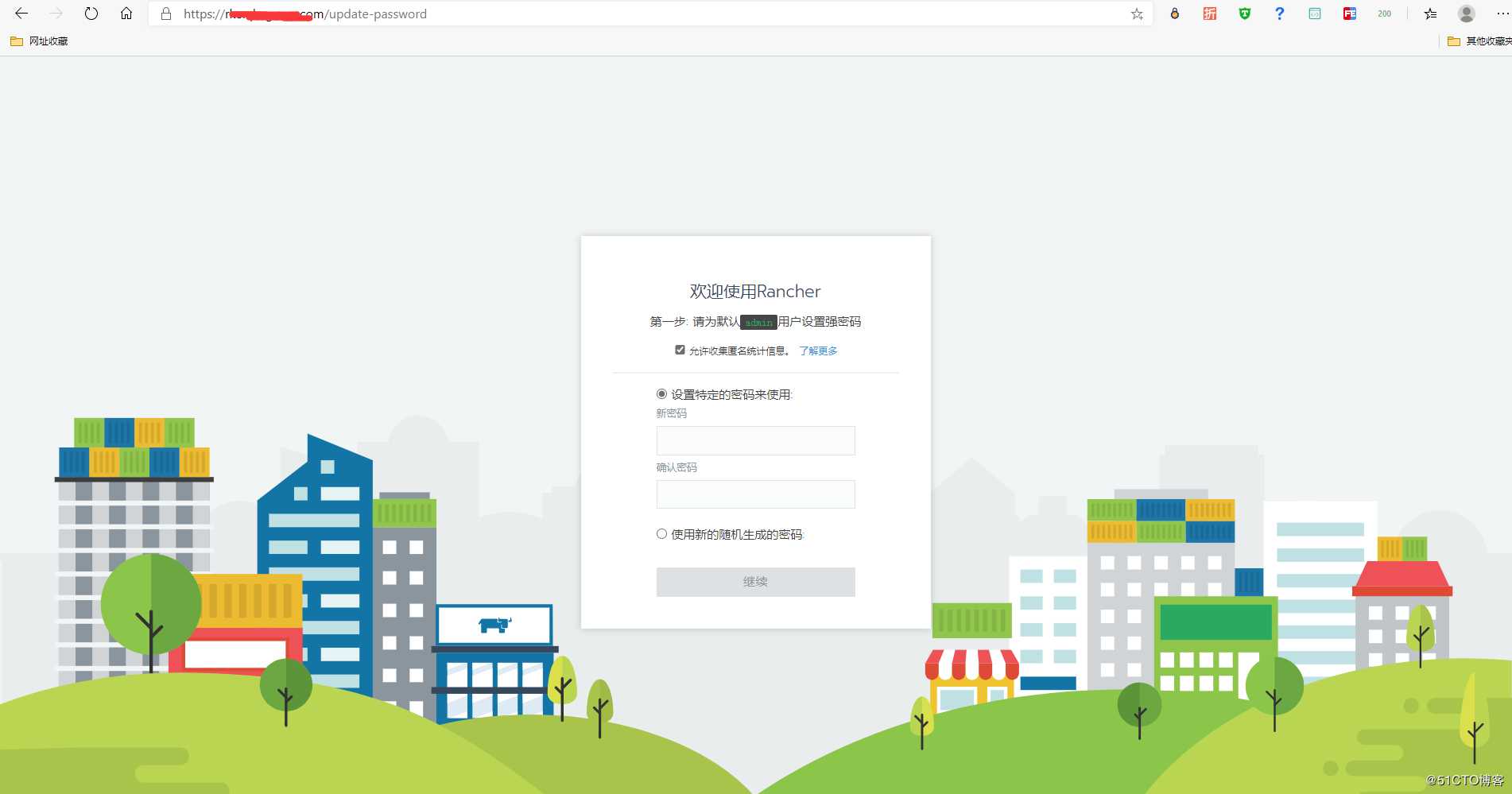

设置密码

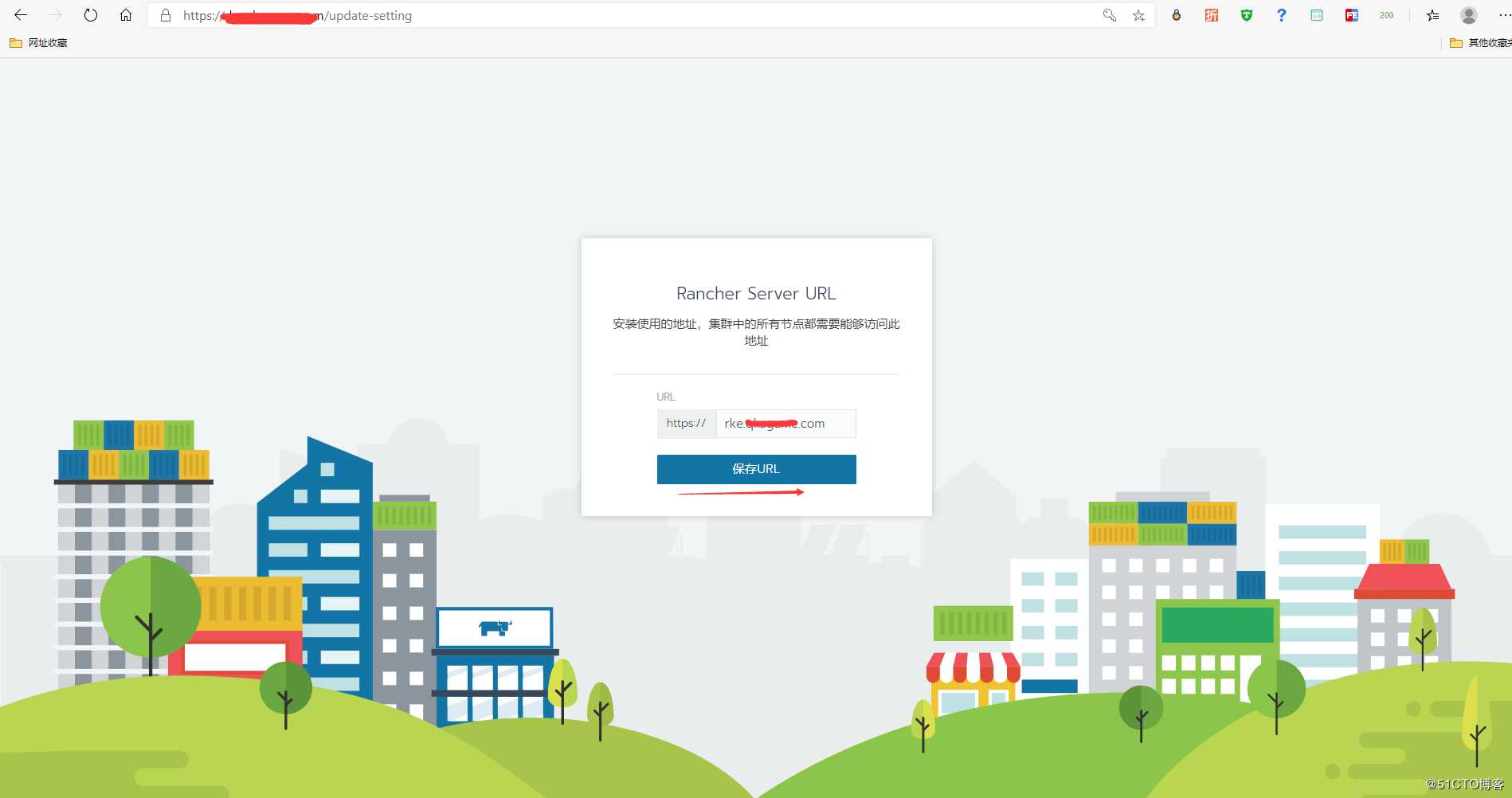

保存url

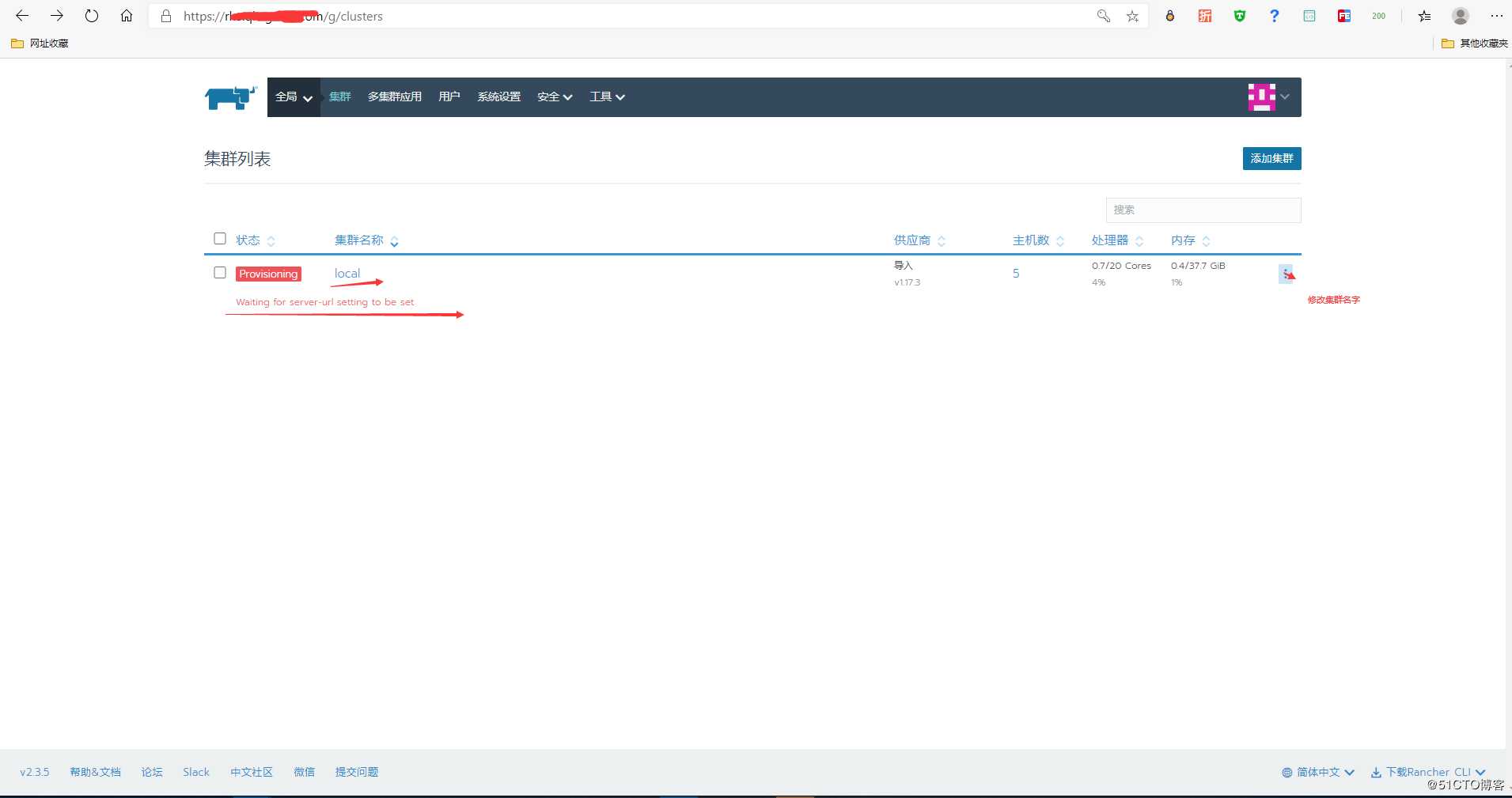

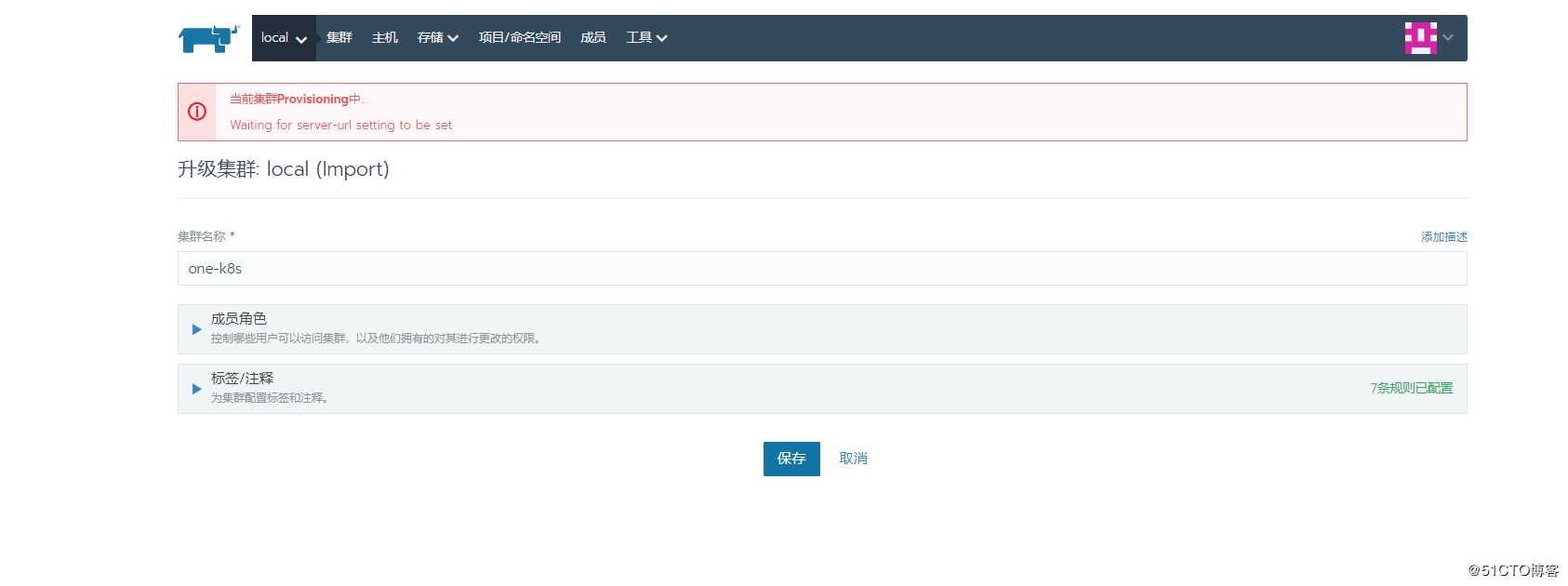

可以修改集群名字

# cattle-cluster-agent 如果出现启动不正常请打补丁

kubectl --kubeconfig=$kubeconfig -n cattle-system patch deployments cattle-cluster-agent --patch "$(cat dnspath.yaml)"

# 这个url 错误也请打补丁path.yaml

spec:

template:

spec:

dnsPolicy: ClusterFirst

dnsConfig:

options:

- name: single-request-reopen

kubectl --kubeconfig=$kubeconfig -n cattle-system patch deployments rancher --patch "$(cat path.yaml)"

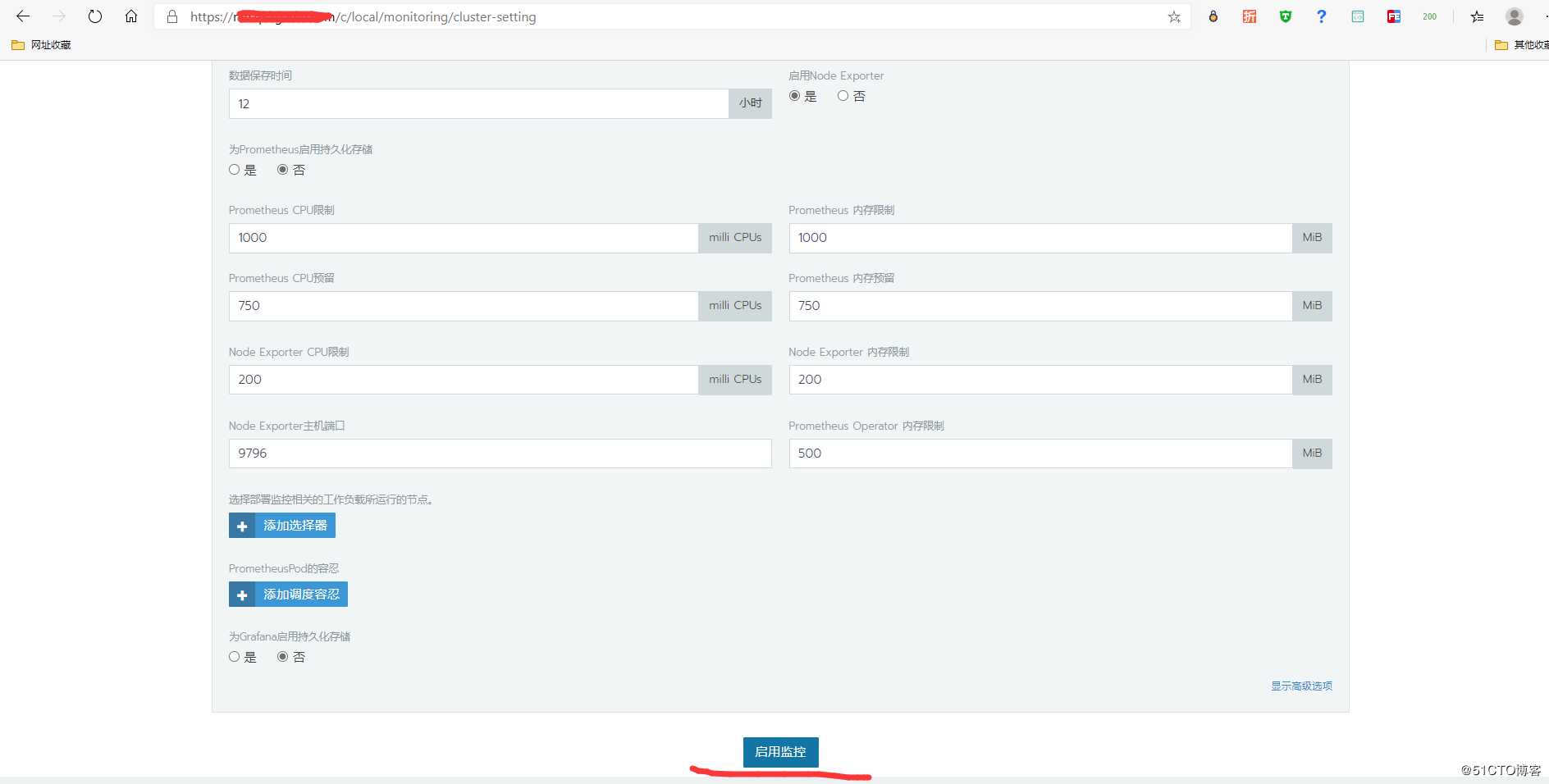

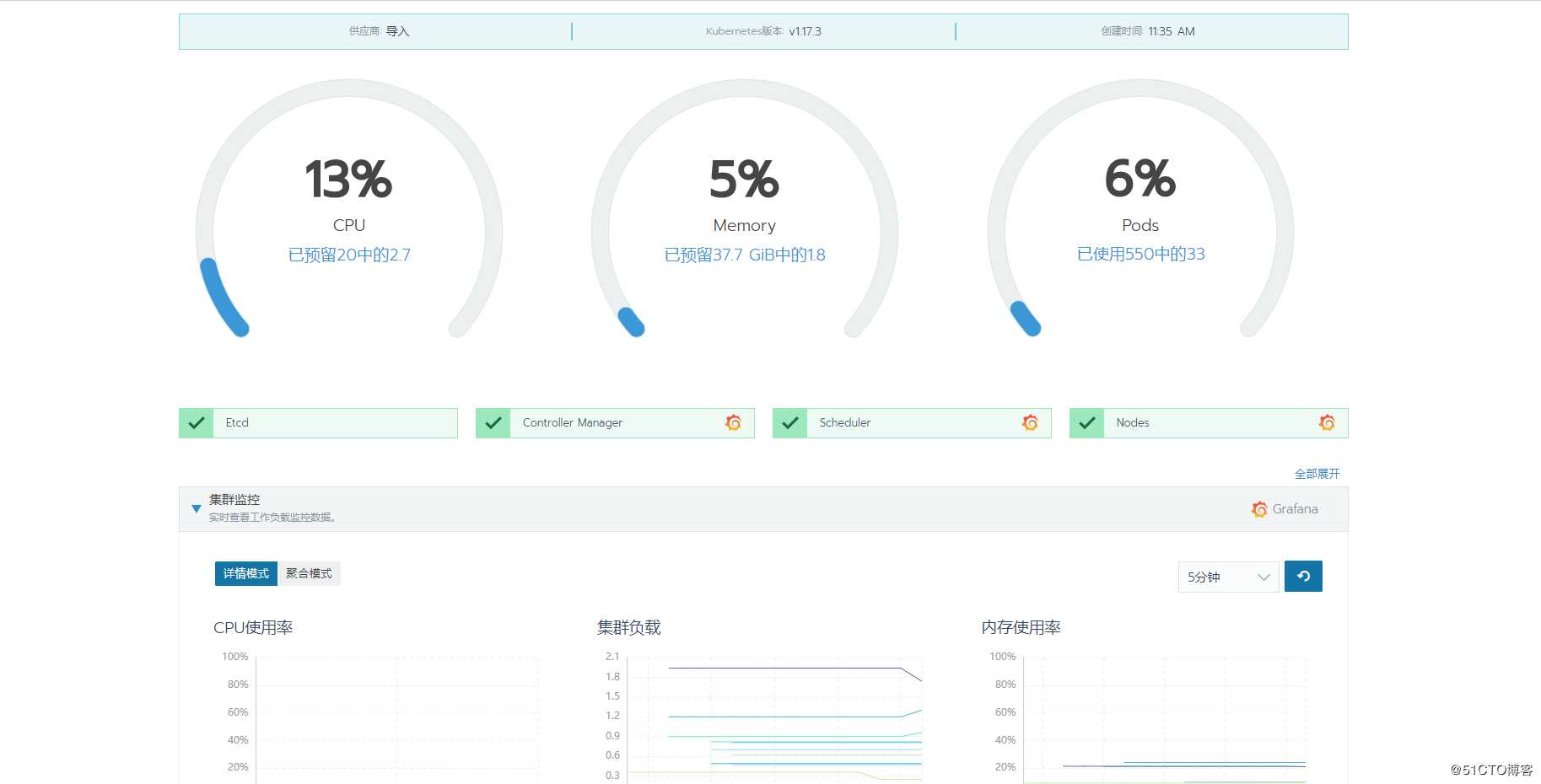

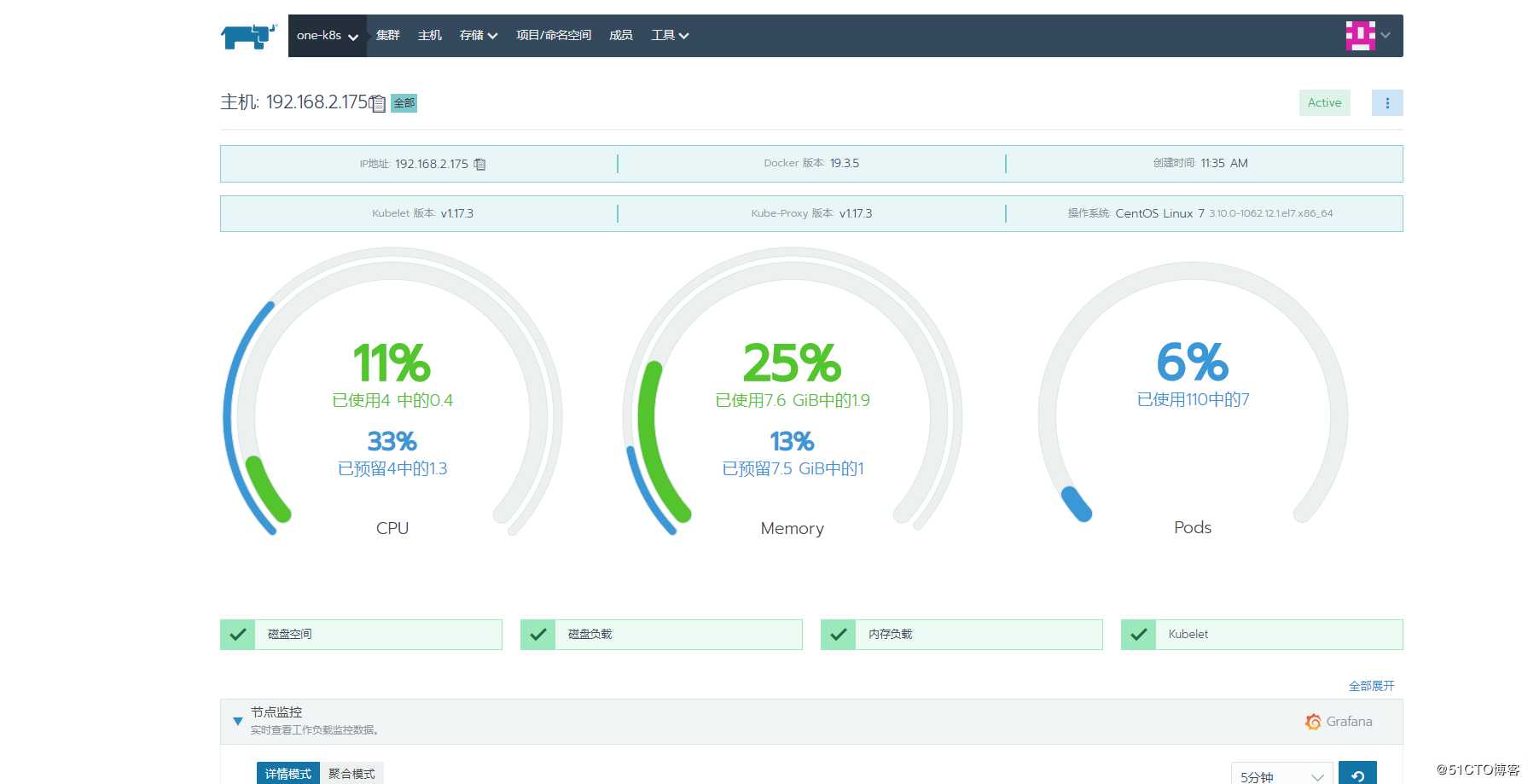

# 部署监控

部署完成

# 在配置文件中添加/删除工作节点后运行rke up --update-only,除了工作节点修改之外,其他修改将被忽略

rke up --update-only

# 删除集群

kubectl --kubeconfig=$kubeconfig delete all --all -A

rke removerke高可用部署K8S集群及rancher server 高可用

标签:visio err eve dep building web fstab air centos

原文地址:https://blog.51cto.com/juestnow/2479615