标签:error 资源 col add 抓取 nbsp tab nload file

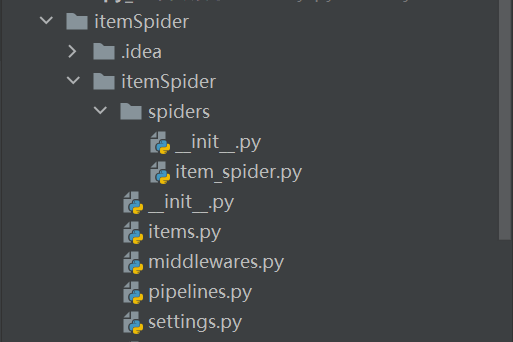

首先创建 itemSpider

在spiders 里面创建 item_spider.py 输入

""" 语言版本: python:3.6.1 scrapy:1.3.3 """ import scrapy import re class itemSpider(scrapy.Spider): name = ‘yumi‘ start_urls = [‘http://3000.ym788.vip/‘] def parse(self, response): urls1 = response.xpath("//ul[@class=‘nav navbar-nav‘]//@href").extract() #mingcheng = response.xpath("//div[@class=‘width1200‘]//a//text()").extract() e = [] urls2 = [‘http://3000.ym788.vip‘] for i in range(len(urls1)): c1 = urls2[0] + urls1[i] e.append(c1) for urls3 in e: yield scrapy.Request(urls3, callback=self.fenlei) def fenlei(self, response): urls = response.xpath("//div[@class=‘name left‘]//@href").extract() c = [] url1 = [‘http://3000.ym788.vip‘] for i in range(len(urls)): c1 = url1[0] + urls[i] c.append(c1) for url3 in c: yield scrapy.Request(url3, callback=self.get_title) next_page1 = response.xpath(‘//a[@target="_self"][text()="下一页"]//@href‘).extract() d = [] for i in range(len(next_page1)): d1 = url1[0] + next_page1[i] d.append(d1) for g in d: if d is not None: g = response.urljoin(g) yield scrapy.Request(g, callback=self.fenlei) def get_title(self, response): # item = IPpronsItem() #mingyan = response.xpath("/html/body/b/b/b/div[4]") IP = response.xpath("//div[@class=‘col-xs-9 movie-info padding-right-5‘]//h1").extract_first() port = response.xpath(‘//a[@is_source="no"]//text()‘).extract_first() mingcheng = response.xpath(‘/html/body/div[2]/div/div/div[1]/div[1]/div[2]/table/tbody/tr[3]/td[2]‘).extract_first() #port = re.findall(‘[a-zA-Z]+://[^\s]*[.com|.cn]*[.m3u8]‘, port) IP =re.findall(‘[\u4e00-\u9fa5]+‘, IP) mingcheng = re.findall(‘[\u4e00-\u9fa5]+‘, mingcheng) IP = ‘:‘.join(IP) mingcheng = ‘,‘.join(mingcheng) fileName = ‘%s.txt‘ % mingcheng # 爬取的内容存入文件 f = open(fileName, "a+", encoding=‘utf-8‘) # 追加写入文件 f.write(IP + ‘,‘) f.write(‘\n‘) f.write(port + ‘,‘) f.write(‘\n‘) f.close()

在settings里面添加

DOWNLOAD_DELAY = 0 CONCURRENT_REQUESTS = 100 CONCURRENT_REQUESTS_PER_DOMAIN = 100 CONCURRENT_REQUESTS_PER_IP = 100 COOKIES_ENABLED = False LOG_LEVEL = ‘ERROR‘

最后 运行就可以了

Scrapy 实现抓取玉米资源网 按分类抓取全站资源 ,X站慎入! 手机电脑 可以直接看

标签:error 资源 col add 抓取 nbsp tab nload file

原文地址:https://www.cnblogs.com/aotumandaren/p/13714058.html