标签:image ram one 自动补全 += tar lstm att lines

github地址:https://github.com/taishan1994/tensorflow-bilstm-crf

1、熟悉数据

msra数据集总共有三个文件:

train.txt:部分数据

当/o 希望工程/o 救助/o 的/o 百万/o 儿童/o 成长/o 起来/o ,/o 科教/o 兴/o 国/o 蔚然成风/o 时/o ,/o 今天/o 有/o 收藏/o 价值/o 的/o 书/o 你/o 没/o 买/o ,/o 明日/o 就/o 叫/o 你/o 悔不当初/o !/o 藏书/o 本来/o 就/o 是/o 所有/o 传统/o 收藏/o 门类/o 中/o 的/o 第一/o 大户/o ,/o 只是/o 我们/o 结束/o 温饱/o 的/o 时间/o 太/o 短/o 而已/o 。/o 因/o 有关/o 日/ns 寇/o 在/o 京/ns 掠夺/o 文物/o 详情/o ,/o 藏/o 界/o 较为/o 重视/o ,/o 也是/o 我们/o 收藏/o 北京/ns 史料/o 中/o 的/o 要件/o 之一/o 。/o

test.txt:部分数据

今天的演讲会是由哈佛大学费正清东亚研究中心主任傅高义主持的。

testright.txt:部分数据

今天的演讲会是由/o 哈佛大学费正清东亚研究中心/nt 主任/o 傅高义/nr 主持的。/o

2、数据预处理

代码:

#coding:utf-8 import os BASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__))) #当前程序上上一级目录,这里为ner import sys sys.path.append(BASE_DIR) print(BASE_DIR) import codecs import re import pandas as pd import numpy as np from config.globalConfig import * #============================第一步:给每一个字打上标签=================================== def wordtag(): #用utf-8-sig编码的原因是文本保存时包含了BOM(Byte Order Mark,字节顺序标记,\ufeff出现在文本文件头部,为了去掉这个 input_data = codecs.open(os.path.join(PATH,‘data/msra/train.txt‘),‘r‘,‘utf-8-sig‘) #一般使用codes打开文件,不会出现编码问题 output_data = codecs.open(os.path.join(PATH,‘data/msra/wordtag.txt‘),‘w‘,‘utf-8‘) for line in input_data.readlines(): #line=re.split(‘[,。;!:?、‘’“”]/[o]‘.decode(‘utf-8‘),line.strip()) line = line.strip().split() if len(line)==0: #过滤掉‘‘ continue for word in line: #遍历列表中的每一个词 word = word.split(‘/‘) #[‘希望工程‘, ‘o‘],每个词是这样的了 if word[1]!=‘o‘: #如果不是o if len(word[0])==1: #如果是一个字,那么就直接给标签 output_data.write(word[0]+"/B_"+word[1]+" ") elif len(word[0])==2: #如果是两个字则拆分给标签 output_data.write(word[0][0]+"/B_"+word[1]+" ") output_data.write(word[0][1]+"/E_"+word[1]+" ") else: #如果两个字以上,也是拆开给标签 output_data.write(word[0][0]+"/B_"+word[1]+" ") for j in word[0][1:len(word[0])-1]: output_data.write(j+"/M_"+word[1]+" ") output_data.write(word[0][-1]+"/E_"+word[1]+" ") else: #如果表示前是o的话,将拆开为字并分别给标签/o for j in word[0]: output_data.write(j+"/o"+" ") output_data.write(‘\n‘) input_data.close() output_data.close() #============================第二步:构建二维字列表以及其对应的二维标签列表=================================== wordtag() datas = list() labels = list() linedata=list() linelabel=list() # 0表示补全的id tag2id = {‘‘ :0, ‘B_ns‘ :1, ‘B_nr‘ :2, ‘B_nt‘ :3, ‘M_nt‘ :4, ‘M_nr‘ :5, ‘M_ns‘ :6, ‘E_nt‘ :7, ‘E_nr‘ :8, ‘E_ns‘ :9, ‘o‘: 10} id2tag = {0:‘‘ , 1:‘B_ns‘ , 2:‘B_nr‘ , 3:‘B_nt‘ , 4:‘M_nt‘ , 5:‘M_nr‘ , 6:‘M_ns‘ , 7:‘E_nt‘ , 8:‘E_nr‘ , 9:‘E_ns‘ , 10: ‘o‘} input_data = codecs.open(os.path.join(PATH,‘data/msra/wordtag.txt‘),‘r‘,‘utf-8‘) for line in input_data.readlines(): #每一个line实际上是这样子的:当/o 希/o 望/o 工/o 程/o 救/o 助/o 注意最后多了个‘‘ line=re.split(‘[,。;!:?、‘’“”]/[o]‘.encode("utf-8").decode(‘utf-8‘),line.strip()) #a按指定字符划分字符串 for sen in line: # sen = sen.strip().split() #每一个字符串列表再按照弄空格划分,然后每个字是:当/o if len(sen)==0: #过滤掉为空的 continue linedata=[] linelabel=[] num_not_o=0 for word in sen: #遍历每一个字 word = word.split(‘/‘) #第一位是字,第二位是标签 linedata.append(word[0]) #加入到字列表 linelabel.append(tag2id[word[1]]) #加入到标签列表,要转换成对应的id映射 if word[1]!=‘o‘: num_not_o+=1 #记录标签不是o的字的个数 if num_not_o!=0: #如果num_not_o不为0,则表明当前linedata和linelabel有要素 datas.append(linedata) labels.append(linelabel) input_data.close() print(len(datas)) print(len(labels)) #============================第三步:构建word2id以及id2word=================================== #from compiler.ast import flatten (在python3中不推荐使用),我们自己定义一个 def flat2gen(alist): for item in alist: if isinstance(item, list): for subitem in item: yield subitem else: yield item all_words = list(flat2gen(datas)) #获得包含所有字的列表 sr_allwords = pd.Series(all_words) #转换为pandas中的Series sr_allwords = sr_allwords.value_counts() #统计每一个字出现的次数,相当于去重 set_words = sr_allwords.index #每一个字就是一个index,这里的字按照频数从高到低排序了 set_ids = range(1, len(set_words)+1) #给每一个字一个id映射,注意这里是从1开始,因为我们填充序列时使用0填充的,也就是id为0的已经被占用了 word2id = pd.Series(set_ids, index=set_words) #字 id id2word = pd.Series(set_words, index=set_ids) #id 字 word2id["unknow"] = len(word2id)+1 #加入一个unknow,如果没出现的字就用unknow的id代替 #============================第四步:定义序列最大长度,对序列进行处理================================== max_len = MAX_LEN #句子的最大长度 def X_padding(words): """把 words 转为 id 形式,并自动补全位 max_len 长度。""" ids = list(word2id[words]) if len(ids) >= max_len: # 长则弃掉 return ids[:max_len] ids.extend([0]*(max_len-len(ids))) # 短则补全 return ids def y_padding(ids): """把 tags 转为 id 形式, 并自动补全位 max_len 长度。""" if len(ids) >= max_len: # 长则弃掉 return ids[:max_len] ids.extend([0]*(max_len-len(ids))) # 短则补全 return ids def get_true_len(ids): return len(ids) df_data = pd.DataFrame({‘words‘: datas, ‘tags‘: labels}, index=range(len(datas))) #DataFrame,索引是序列的个数,列是字序列以及对应的标签序列 df_data[‘length‘] = df_data["tags"].apply(get_true_len) #获得每个序列真实的长度 df_data[‘length‘][df_data[‘length‘] > MAX_LEN] = MAX_LEN #这里需要注意,如果序列长度大于最大长度,则其真实长度必须设定为最大长度,否则后面会报错 df_data[‘x‘] = df_data[‘words‘].apply(X_padding) #超截短补,新定义一列 df_data[‘y‘] = df_data[‘tags‘].apply(y_padding) #超截短补,新定义一列 x = np.asarray(list(df_data[‘x‘].values)) #转为list y = np.asarray(list(df_data[‘y‘].values)) #转为list length = np.asarray(list(df_data[‘length‘].values)) #转为list #============================第四步:划分训练集、测试集、验证集================================== #from sklearn.model_selection import train_test_split #x_train,x_test, y_train, y_test = train_test_split(x, y, test_size=0.1, random_state=43) #random_state:避免每一个划分得不同 #x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.2, random_state=43) #我们要加入每个序列的长度,因此sklearn自带的划分就没有用了,自己写一个 def split_data(data,label,seq_length,ratio): len_data = data.shape[0] #设置随机数种子,保证每次生成的结果都是一样的 np.random.seed(43) #permutation随机生成0-len(data)随机序列 shuffled_indices = np.random.permutation(len_data) #test_ratio为测试集所占的百分比 test_set_size = int(len_data * ratio) test_indices = shuffled_indices[:test_set_size] train_indices = shuffled_indices[test_set_size:] train_data = data[train_indices,:] train_label = label[train_indices] train_seq_length = seq_length[train_indices] test_data = data[test_indices,:] test_label = label[test_indices] test_seq_length = seq_length[test_indices] return train_data,test_data,train_label,test_label,train_seq_length,test_seq_length x_train,x_test, y_train, y_test, z_train, z_test = split_data(x, y, seq_length=length, ratio=0.1) #random_state:避免每一个划分得不同 x_train, x_valid, y_train, y_valid, z_train, z_valid = split_data(x_train, y_train, seq_length=z_train, ratio=0.2) #============================第五步:将所有需要的存为pickle文件备用================================== print(‘Finished creating the data generator.‘) import pickle import os with open(os.path.join(PATH,‘process_data/msra/MSRA.pkl‘), ‘wb‘) as outp: pickle.dump(word2id, outp) pickle.dump(id2word, outp) pickle.dump(tag2id, outp) pickle.dump(id2tag, outp) pickle.dump(x_train, outp) pickle.dump(y_train, outp) pickle.dump(z_train, outp) pickle.dump(x_test, outp) pickle.dump(y_test, outp) pickle.dump(z_test, outp) pickle.dump(x_valid, outp) pickle.dump(y_valid, outp) pickle.dump(z_valid, outp) print(‘** Finished saving the data.‘)

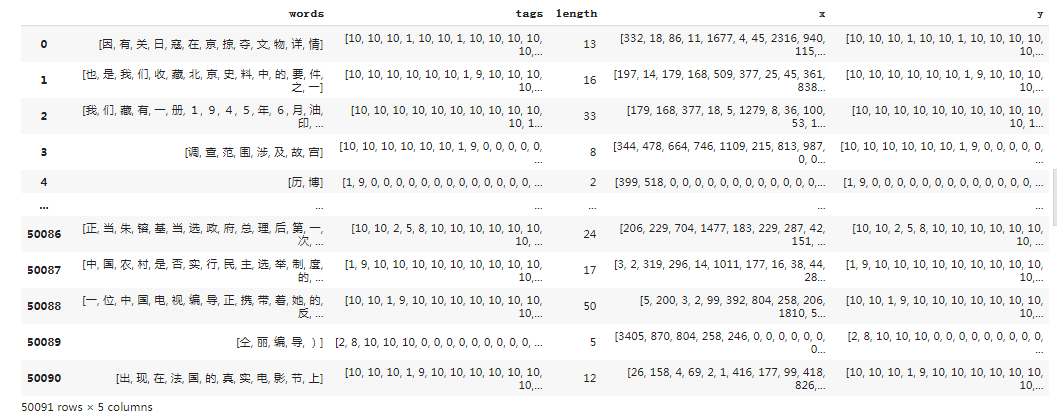

中间步骤的df_data如下:

需要注意的是上面的训练、验证、测试数据都是从训练数据中切分的,不在字表中的字会用‘unknow‘的id进行映射,对于长度不够的句子会用0进行填充到最大长度。

3、定义模型

# -*- coding: utf-8 -* import numpy as np import tensorflow as tf class BilstmCrfModel: def __init__(self,config,embedding_pretrained,dropout_keep=1): self.embedding_size = config.msraConfig.embedding_size self.embedding_dim = config.msraConfig.embedding_dim self.max_len = config.msraConfig.max_len self.tag_size = config.msraConfig.tag_size self.pretrained = config.msraConfig.pre_trained self.dropout_keep = dropout_keep self.embedding_pretrained = embedding_pretrained self.inputX = tf.placeholder(dtype=tf.int32, shape=[None,self.max_len], name="input_data") self.inputY = tf.placeholder(dtype=tf.int32,shape=[None,self.max_len], name="labels") self.seq_lens = tf.placeholder(dtype=tf.int32, shape=[None]) self._build_net() def _build_net(self): # word_embeddings:[4027,100] # 词嵌入层 with tf.name_scope("embedding"): # 利用预训练的词向量初始化词嵌入矩阵 if self.pretrained: embedding_w = tf.Variable(tf.cast(self.embedding_pretrained, dtype=tf.float32, name="word2vec"), name="embedding_w") else: embedding_w = tf.get_variable("embedding_w", shape=[self.embedding_size, self.embedding_dim], initializer=tf.contrib.layers.xavier_initializer()) # 利用词嵌入矩阵将输入的数据中的词转换成词向量,维度[batch_size, sequence_length, embedding_size] input_embedded = tf.nn.embedding_lookup(embedding_w, self.inputX) input_embedded = tf.nn.dropout(input_embedded,self.dropout_keep) with tf.name_scope("bilstm"): lstm_fw_cell = tf.nn.rnn_cell.LSTMCell(self.embedding_dim, forget_bias=1.0, state_is_tuple=True) lstm_bw_cell = tf.nn.rnn_cell.LSTMCell(self.embedding_dim, forget_bias=1.0, state_is_tuple=True) (output_fw, output_bw), states = tf.nn.bidirectional_dynamic_rnn(lstm_fw_cell, lstm_bw_cell, input_embedded, dtype=tf.float32, time_major=False, scope=None) bilstm_out = tf.concat([output_fw, output_bw], axis=2) # Fully connected layer. with tf.name_scope("output"): W = tf.get_variable( "output_w", shape=[2 * self.embedding_dim, self.tag_size], initializer=tf.contrib.layers.xavier_initializer()) b = tf.Variable(tf.constant(0.1, shape=[self.max_len, self.tag_size]), name="output_b") self.bilstm_out = tf.tanh(tf.matmul(bilstm_out, W) + b) with tf.name_scope("crf"): # Linear-CRF. log_likelihood, self.transition_params = tf.contrib.crf.crf_log_likelihood(self.bilstm_out, self.inputY, self.seq_lens) self.loss = tf.reduce_mean(-log_likelihood) self.viterbi_sequence, viterbi_score = tf.contrib.crf.crf_decode(self.bilstm_out, self.transition_params, self.seq_lens)

4、定义主函数

from config.globalConfig import * from config.msraConfig import Config from dataset.msraDataset import MsraDataset from utils.get_batch import BatchGenerator from models.bilstm_crf import BilstmCrfModel import tensorflow as tf import os import numpy as np from utils.tmp import find_all_tag,get_labels,get_multi_metric,mean,get_binary_metric labels_list = [‘ns‘,‘nt‘,‘nr‘] def train(config,model,save_path,trainBatchGen,valBatchGen): globalStep = tf.Variable(0, name="globalStep", trainable=False) save_path = os.path.join(save_path,"best_validation") saver = tf.train.Saver() with tf.Session() as sess: # 定义trainOp # 定义优化函数,传入学习速率参数 optimizer = tf.train.AdamOptimizer(config.trainConfig.learning_rate) # 计算梯度,得到梯度和变量 gradsAndVars = optimizer.compute_gradients(model.loss) # 将梯度应用到变量下,生成训练器 trainOp = optimizer.apply_gradients(gradsAndVars, global_step=globalStep) sess.run(tf.global_variables_initializer()) best_f_beta_val = 0.0 #最佳验证集的f1值 for epoch in range(1,config.trainConfig.epoch+1): for trainX_batch,trainY_batch,train_seqlen in trainBatchGen.next_batch(config.trainConfig.batch_size): feed_dict = { model.inputX : trainX_batch, #[batch,max_len] model.inputY : trainY_batch, #[batch,max_len] model.seq_lens : train_seqlen, #[batch] } _, loss, pre = sess.run([trainOp,model.loss,model.viterbi_sequence],feed_dict) currentStep = tf.train.global_step(sess, globalStep) true_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(trainY_batch,train_seqlen)] pre_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(pre,train_seqlen)] precision,recall,f1 = get_multi_metric(true_idx2label,pre_idx2label,train_seqlen,labels_list) if currentStep % 100 == 0: print("[train] step:{} loss:{:.4f} precision:{:.4f} recall:{:.4f} f1:{:.4f}".format(currentStep,loss,precision,recall,f1)) if currentStep % 100 == 0: #要计算所有验证样本的 losses = [] f_betas = [] precisions = [] recalls = [] for valX_batch,valY_batch,val_seqlen in valBatchGen.next_batch(config.trainConfig.batch_size): feed_dict = { model.inputX : valX_batch, #[batch,max_len] model.inputY : valY_batch, #[batch,max_len] model.seq_lens : val_seqlen, #[batch] } val_loss, val_pre = sess.run([model.loss,model.viterbi_sequence],feed_dict) val_true_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(valY_batch,val_seqlen)] val_pre_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(val_pre,val_seqlen)] val_precision,val_recall,val_f1 = get_multi_metric(val_true_idx2label,val_pre_idx2label,val_seqlen,labels_list) losses.append(val_loss) f_betas.append(val_f1) precisions.append(val_precision) recalls.append(val_recall) if mean(f_betas) > best_f_beta_val: # 保存最好结果 best_f_beta_val = mean(f_betas) last_improved = currentStep saver.save(sess=sess, save_path=save_path) improved_str = ‘*‘ else: improved_str = ‘‘ print("[val] loss:{:.4f} precision:{:.4f} recall:{:.4f} f1:{:.4f} {}".format( mean(losses),mean(precisions),mean(recalls),mean(f_betas),improved_str )) def test(config,model,save_path,testBatchGen): with tf.Session() as sess: sess.run(tf.global_variables_initializer()) saver = tf.train.Saver() ckpt = tf.train.get_checkpoint_state(‘checkpoint/msra/‘) path = ckpt.model_checkpoint_path saver.restore(sess, path) # 读取保存的模型 precisions = [] recalls = [] f1s = [] for testX_batch,testY_batch,test_seqlen in testBatchGen.next_batch(config.trainConfig.batch_size): feed_dict = { model.inputX : testX_batch, #[batch,max_len] model.inputY : testY_batch, #[batch,max_len] model.seq_lens : test_seqlen, #[batch] } test_pre = sess.run([model.viterbi_sequence],feed_dict) #这里有点奇怪,和train、val出来的数据相比多了一个[] test_pre = test_pre[0] test_true_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(testY_batch,test_seqlen)] test_pre_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(test_pre,test_seqlen)] precision,recall,f1 = get_multi_metric(test_true_idx2label,test_pre_idx2label,test_seqlen,labels_list) precisions.append(precision) recalls.append(recall) f1s.append(f1) print("[test] precision:{:.4f} recall:{:.4f} f1:{:.4f}".format( mean(precisions),mean(recalls),mean(f1s))) def predict(word2idx,idx2word,idx2label): max_len = 60 input_list = [] input_len = [] with tf.Session() as sess: sess.run(tf.global_variables_initializer()) saver = tf.train.Saver() ckpt = tf.train.get_checkpoint_state(‘checkpoint/msra/‘) path = ckpt.model_checkpoint_path saver.restore(sess, path) # 读取保存的模型 while True: print("请输入一句话:") line = input() if line == ‘q‘: break line_len = len(line) input_len.append(line_len) word_list = [word2idx[word] if word in word2idx else word2idx[‘unknow‘] for word in line] if line_len < max_len: word_list =word_list + [0]*(max_len-line_len) else: word_list = word_list[:max_len] input_list.append(word_list) #需要增加一个维度 input_list = np.array(input_list) input_label = np.zeros((input_list.shape[0],input_list.shape[1])) #标签占位 input_len = np.array(input_len) feed_dict = { model.inputX : input_list, #[batch,max_len] model.inputY : input_label, #[batch,max_len] model.seq_lens : input_len, #[batch] } pred_label = sess.run([model.viterbi_sequence],feed_dict) pred_label = pred_label[0] # 将预测标签id还原为真实标签 pred_idx2label = [get_labels(label,idx2label,seq_len) for label,seq_len in zip(pred_label,input_len)] for line,pre,s_len in zip(input_list,pred_idx2label,input_len): res = find_all_tag(pre,s_len) for k in res: for v in res[k]: if v: print(k,"".join([idx2word[word] for word in line[v[0]:v[0]+v[1]]])) input_list = [] input_len = [] if __name__ == "__main__": config = Config() msraDataset = MsraDataset(config) word2idx = msraDataset.get_word2idx() idx2word = msraDataset.get_idx2word() label2idx = msraDataset.get_label2idx() idx2label = msraDataset.get_idx2label() embedding_pre = msraDataset.get_embedding() x_train,y_train,z_train = msraDataset.get_train_data() x_val,y_val,z_val = msraDataset.get_val_data() x_test,y_test,z_test = msraDataset.get_test_data() print("====验证是否得到相关数据===") print("word2idx:",len(word2idx)) print("idx2word:",len(idx2word)) print("label2idx:",len(label2idx)) print("idx2label:",len(idx2label)) print("embedding_pre:",embedding_pre.shape) print(x_train.shape,y_train.shape,z_train.shape) print(x_val.shape,y_val.shape,z_val.shape) print(x_test.shape,y_test.shape,z_test.shape) print("======打印相关参数======") print("batch_size:",config.trainConfig.batch_size) print("learning_rate:",config.trainConfig.learning_rate) print("embedding_dim:",config.msraConfig.embedding_dim) is_train,is_val,is_test = True,True,True model = BilstmCrfModel(config,embedding_pre) if is_train: trainBatchGen = BatchGenerator(x_train,y_train,z_train,shuffle=True) if is_val: valBatchGen = BatchGenerator(x_val,y_val,z_val,shuffle=False) if is_test: testBatchGen = BatchGenerator(x_test,y_test,z_test,shuffle=False) dataset = "msra" if dataset == "msra": save_path = os.path.join(PATH,‘checkpoint/msra/‘) if not os.path.exists(save_path): os.makedirs(save_path) #train(config,model,save_path,trainBatchGen,valBatchGen) #test(config,model,save_path,testBatchGen) predict(word2idx,idx2word,idx2label)

运行训练及测试:

部分结果:

====验证是否得到相关数据=== word2idx: 4026 idx2word: 4025 label2idx: 11 idx2label: 11 embedding_pre: (4027, 100) (36066, 60) (36066, 60) (36066,) (9016, 60) (9016, 60) (9016,) (5009, 60) (5009, 60) (5009,) ======打印相关参数====== batch_size: 128 learning_rate: 0.001 embedding_dim: 100 。。。。。。 [train] step:10500 loss:0.8870 precision:0.9699 recall:0.9734 f1:1.0000 [val] loss:1.5881 precision:0.8432 recall:0.8569 f1:0.7964 [train] step:10600 loss:1.3130 precision:0.9445 recall:0.9314 f1:0.8696 [val] loss:1.6127 precision:0.8302 recall:0.8720 f1:0.7913 [train] step:10700 loss:0.9924 precision:0.9762 recall:0.9730 f1:0.9836 [val] loss:1.6147 precision:0.8490 recall:0.8488 f1:0.7923 [train] step:10800 loss:0.9863 precision:0.9481 recall:0.9495 f1:0.9697 [val] loss:1.6455 precision:0.8375 recall:0.8672 f1:0.8015 [train] step:10900 loss:1.0629 precision:0.9580 recall:0.9148 f1:0.9355 [val] loss:1.7692 precision:0.8174 recall:0.8064 f1:0.7735 [train] step:11000 loss:0.9846 precision:0.9800 recall:0.9689 f1:0.9643 [val] loss:1.5785 precision:0.8562 recall:0.8544 f1:0.7996 [train] step:11100 loss:0.9315 precision:0.9609 recall:0.9651 f1:0.9455 [val] loss:1.5665 precision:0.8568 recall:0.8646 f1:0.8100 * [train] step:11200 loss:0.9989 precision:0.9804 recall:0.9629 f1:0.9697 [val] loss:1.5807 precision:0.8519 recall:0.8569 f1:0.7941

[test] precision:0.8610 recall:0.8780 f1:0.8341

进行预测:

请输入一句话: 我要感谢洛杉矶市民议政论坛、亚洲协会南加中心、美中关系全国委员会、美中友协美西分会等友好团体的盛情款待。 2020-11-15 08:02:48.049927: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10 nt 洛杉矶市民议政论坛、亚洲协会南加中心、美中关系全国委员会、美中友协美西分会 请输入一句话: 今天的演讲会是由哈佛大学费正清东亚研究中心主任傅高义主持的。 nt 哈佛大学 nt 清东亚研究中心 nr 傅高义 请输入一句话: 美方有哈佛大学典礼官亨特、美国驻华大使尚慕杰等。 nr 亨特、 nr 尚慕杰 请输入一句话:

基于tensorflow的bilstm_crf的命名实体识别(数据集是msra命名实体识别数据集)

标签:image ram one 自动补全 += tar lstm att lines

原文地址:https://www.cnblogs.com/xiximayou/p/13928829.html