标签:style blog http io ar color os 使用 sp

本栏目来源于对Coursera 在线课程 NLP(by Michael Collins)的理解。课程链接为:https://class.coursera.org/nlangp-001

1. Tagging Problems

1.1 POS Tagging

问题描述

Input:Profits soared at Boeing Co., easily topping forecasts on Wall Street, as their CEO Alan Mulally announced first quarter results.

Output:Profits/N soared/V at/P Boeing/N Co./N ,/, easily/ADV topping/V forecasts/N on/P Wall/N Street/N ,/, as/P their/POSS CEO/N Alan/N Mulally/N announced/V first/ADJ quarter/N results/N ./.

PS:N = Noun;V = Verb;P = Preposition;Adv = Adverb;Adj = Adjective;...

给定训练集,(x(i),y(i)),其中x(i)为句子x1(i)...nix(i),y(i)为tag序列,ni为第i个样本的长度。因此xj(i)为句子x(i)中第j个词,yj(i)为xj(i)的tag。例如Penn WSJ 的treebank 标注语料。而POS的难点包括(1)一词多义(即不同语境下一词可以被标注为多种tag);(2)不常见单词的处理(即训练语料中未出现的单词);另外,在POS过程中要考虑词的统计特性,如常见的词性和语法常识(比如“quarter”一般作为名词出现而非动词,D N V比D V N结构在句中更为常见)。

1.2 Named-Entity Recognition

问题描述

Input:Profits soared at Boeing Co., easily topping forecasts on Wall Street, as their CEO Alan Mulally announced first quarter results.

Output1:Profits soared at [Company Boeing Co.], easily topping forecasts on [LocationWall Street], as their CEO [Person Alan Mulally] announced first quarter results.

输出结果为命名实体识别的结果,如 PERSON, LOCATION, COMPANY...;与POS不同,每个单词或者被标注为NA(不包括命名实体)或者标注为命名实体的一部分(如SC为公司名开始,CC为公司名中间部分...)即输出如下结果:

Output2: Profits/NA soared/NA at/NA Boeing/SC Co./CC ,/NA easily/NA topping/NA forecasts/NA on/NA Wall/SL Street/CL ,/NA as/NA their/NA CEO/NA Alan/SP Mulally/CP announced/NA first/NA quarter/NA results/NA ./NA

PS:NA = No entity;SC = Start Company;CC = Continue Company;SL = Start Location;CL = Continue Location;...

2 Generative Models

2.1. hidden Markov models

training example:(x(1),y(1))...(x(m),y(m)),我们希望通过训练样本得到函数f:X→Y

方法一:conditional model

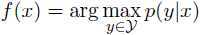

给定测试样本x,模型输出为:

方法二:generative model

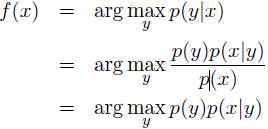

应用联合概率分布p(x,y),且p(x,y)=p(y)p(x|y)

其中p(y)是先验概率,p(x|y)是给定标签y的条件概率。

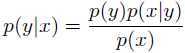

因此我们可以使用贝叶斯规则来得到条件概率p(y|x):

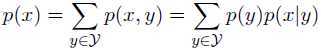

其中

其中

故:

2.2 Generative Tagging Models

V:单词集合,如:V ={the, dog, saw, cat, laughs,...}

K: 标注集合

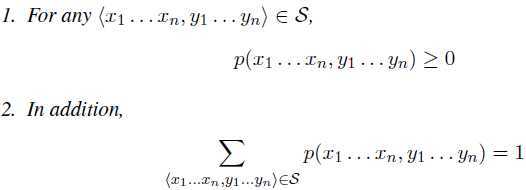

S:sequence/tag-sequence pairs <x1,...xn,y1,...yn>

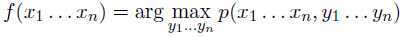

给定Generative Tagging Model,x1...xn的tag结果y1...yn为:

2.3 Trigram Hidden Markov Models (Trigram HMMs)

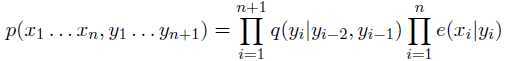

q(s|u,v):bigram标记为(u,v)后标记为s的概率,对trigram(u,v,s),s属于{K,STOP},u,v属于{K,*};

e(x|s):在s状态下观察结果为x的概率,x属于V,s属于K;

S:所有的sequence/tag-sequence对<x1...xn,y1...yn+1>,yn+1=STOP

PS:y0=y-1=*

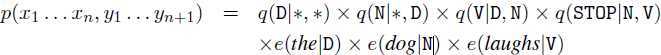

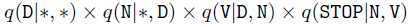

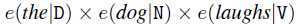

例如:若n=3,x1x2x3= the dog laughs,y1y2y3y4=D N V STOP,那么:

该模型为noisy-channel model, 为二阶马尔科夫过程,标注为D N V STOP的先验概率,

为二阶马尔科夫过程,标注为D N V STOP的先验概率, 是条件概率p(the dog laughs|D N V STOP)。

是条件概率p(the dog laughs|D N V STOP)。

Tagging Problems & Hidden Markov Models---NLP学习笔记(原创)

标签:style blog http io ar color os 使用 sp

原文地址:http://www.cnblogs.com/tec-vegetables/p/4056383.html