标签:style ret ons mat rand pypi pymongo inf headers

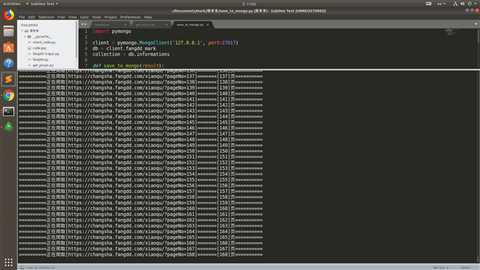

本来想正面刚一下这个验证码的,但是一直post不上去,只好设置随机延迟,防止反爬

fangdd.py

1 import requests 2 from lxml import etree 3 import re 4 from check_code import * #验证码处理模块 5 from get_pinyin import * #汉子转拼音模块 6 from save_to_mongo import * 7 import time 8 import random 9 10 11 class Fangdd(): 12 def __init__(self): 13 user_agent_list = [ 14 "Mozilla/5.0(Macintosh;IntelMacOSX10.6;rv:2.0.1)Gecko/20100101Firefox/4.0.1", 15 "Mozilla/4.0(compatible;MSIE6.0;WindowsNT5.1)", 16 "Opera/9.80(WindowsNT6.1;U;en)Presto/2.8.131Version/11.11", 17 "Mozilla/5.0(Macintosh;IntelMacOSX10_7_0)AppleWebKit/535.11(KHTML,likeGecko)Chrome/17.0.963.56Safari/535.11", 18 "Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1)", 19 "Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;Trident/4.0;SE2.XMetaSr1.0;SE2.XMetaSr1.0;.NETCLR2.0.50727;SE2.XMetaSr1.0)" 20 ] 21 #产生一个随机user-agent 22 self.headers={ 23 #从上面的列表上随机取一个 24 "User-Agent":random.choice(user_agent_list) 25 } 26 # self.c = Code_text() 27 # self.rand_time = random.randint(1, 5) 28 29 def get_html(self, url): 30 # 请求网页源代码 31 response = requests.get(url, headers=self.headers) 32 html = response.text 33 element = etree.HTML(html) 34 # 根据title判断是否反爬 35 title = element.xpath(‘//title/text()‘) 36 title = title[0] 37 if title == ‘chech captcha‘: 38 print(‘有内鬼,终止交易(爬虫已被发现,正在进行验证码处理)‘) 39 # self.c.post_code() 40 time.sleep(5) 41 else: 42 return html 43 44 45 def get_location(self, html): 46 # 得到全国各地地名 47 addresses = [] 48 element = etree.HTML(html) 49 lis = element.xpath(‘//div[@class="q3rm0"]/li[position()>1]//a/text()‘) 50 for li in lis: 51 # 得到的是汉字,而网址中是拼音,转换一下 52 li_pinyin = get_pinyin(li) 53 addresses.append(li_pinyin) 54 return addresses 55 56 57 def get_all_url(self, addresses): 58 # 对每一个地名的网址进行解析 59 urls = [] 60 for address in addresses: 61 addr_url = ‘https://%s.fangdd.com/xiaoqu‘ % address 62 urls.append(addr_url) 63 return urls 64 65 66 def parse_list_page(self, urls): 67 not_found = [] #网址中的拼音不全是汉字转换的,无法统一抓取 68 for p_url in urls: 69 # 设置随机数进行睡眠,防止反爬 70 # time.sleep(self.rand_time) 71 html = self.get_html(p_url) 72 element = etree.HTML(html) 73 # 多音字转换报错,根据title内容来确定 74 title = element.xpath(‘//title/text()‘) 75 title = title[0] 76 if title == ‘很抱歉!您访问的页面不存在!‘: 77 # 因为有的地区名字过长,网址中采用省略写法,所以根据拼音拼接的网址与真实网址不对应,导致404 78 print(‘由于拼音转换与网址不对应,从列表中删除该网址:%s‘ % p_url) 79 else: 80 print(title) 81 # 当小区数量不足20时,只有一页,最大页数为1 82 max_xiaoqu = element.xpath(‘//p[@class="filter-result"]/b/text()‘) 83 max_xiaoqu = max_xiaoqu[0] 84 max_xiaoqu = int(max_xiaoqu) 85 86 if max_xiaoqu <= 20 and max_xiaoqu != 0 : 87 print(‘该地区只有一页‘) 88 print(‘max_xiaoqu=%s‘ % max_xiaoqu) 89 max_page = 1 90 self.get_informations(p_url, max_page) 91 92 elif max_xiaoqu > 20: 93 # 找到最大页数,来确定循环的边界 94 max_page = element.xpath(‘//div[@class="pagebox"]/a[position()<last()]/text()‘) 95 max_page = max_page[-1].strip() 96 max_page = int(max_page) 97 self.get_informations(p_url, max_page) 98 99 # max_xiaoqu == 0的情况 100 else: 101 print(p_url+‘该地区没有小区‘) 102 103 104 def get_informations(self, g_url, max_page): 105 print(‘=‘*20+‘正在爬取网址[%s]‘%g_url + ‘=‘*30) 106 107 for pageNo in range(1, max_page+1): 108 time.sleep(random.randint(1, 3)) 109 pageNo_url = g_url+‘/?pageNo=%s‘ % pageNo 110 print(‘=‘*10 + ‘正在爬取[%s]=======[%s]页‘ % (pageNo_url, pageNo) + ‘=‘*10 ) 111 112 response = requests.get(pageNo_url, headers=self.headers) 113 html = response.text 114 element = etree.HTML(html) 115 lis = element.xpath(‘//ul[@class="lp-list"]//li‘) 116 117 for li in lis: 118 information = {} 119 li_url = li.xpath(‘.//h3[@class="name"]/a/@href‘) 120 121 name = li.xpath(‘.//h3[@class="name"]/a/text()‘) 122 name = name[0] 123 124 address = li.xpath(‘.//div[@class="house-cont"]//p[@class="address"]/text()‘) 125 address = address[0] 126 address = re.sub(‘ ‘, ‘‘, address) 127 price = li.xpath(‘.//div[@class="fr"]/span/em/text()‘) 128 price = price[0].strip() 129 price = re.sub(‘元/㎡‘, ‘‘, price) 130 information = { 131 ‘url‘: li_url, 132 ‘name‘: name, 133 ‘address‘: address, 134 ‘price‘: price 135 } 136 save_to_mongo(information) 137 138 139 def main(): 140 f = Fangdd() 141 url = ‘https://shanghai.fangdd.com/‘ 142 html = f.get_html(url) 143 address = f.get_location(html) 144 urls = f.get_all_url(address) 145 f.parse_list_page(urls) 146 147 148 if __name__ == ‘__main__‘: 149 main()

get_pinyin.py

1 from pypinyin import pinyin 2 3 4 def get_pinyin(text): 5 # style=0参数设置取消声调,详情http://pypinyin.mozillazg.com/zh_CN/v0.9.1/ 6 p = pinyin(text, style=0) 7 # [[‘chong‘], [‘qing‘]] 8 a = [] 9 for i in p: 10 a.append(i[0]) 11 # print(p) 12 b = ‘‘.join(a) 13 return b

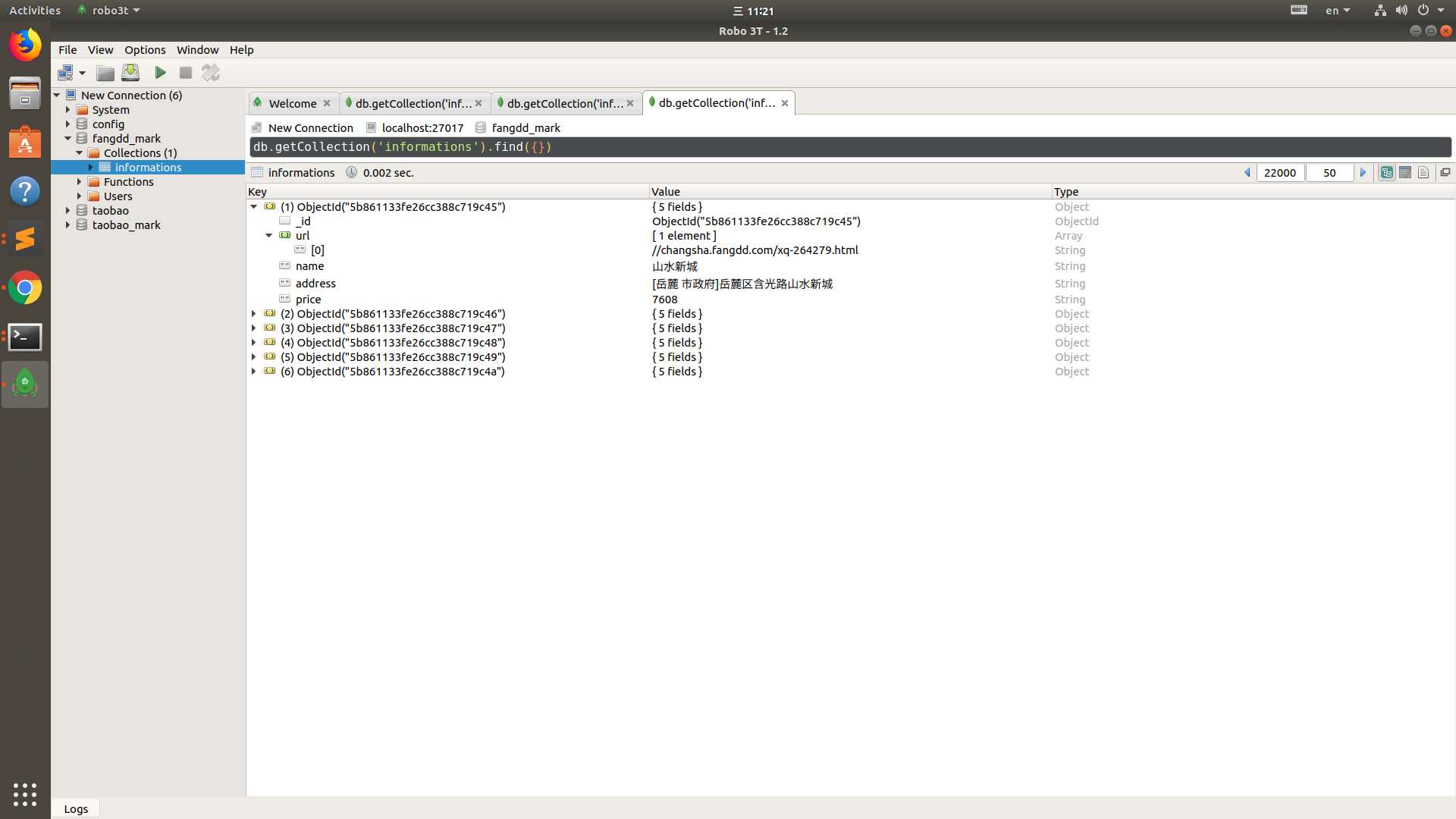

save_to_mongo.py

1 import pymongo 2 3 client = pymongo.MongoClient(‘127.0.0.1‘, port=27017) 4 db = client.fangdd_mark 5 collection = db.informations 6 7 def save_to_mongo(result): 8 try: 9 if collection.insert(result): 10 pass 11 # print(‘success save to mongodb‘) 12 except Exception: 13 print(‘error to mongo‘) 14

因为设置了延迟,再加上数据量比较大,所以爬取时间有点长,我打完了一把王者荣耀,c开头的还没爬完,此时数据库中已经有22000条信息了

运行结果:

标签:style ret ons mat rand pypi pymongo inf headers

原文地址:https://www.cnblogs.com/MC-Curry/p/9552504.html