标签:rac root dingo cal efi 百度 open https iss

1.京东商品页面爬取

打开某一个京东页面

https://item.jd.com/69336974189.html

代码:

import requests url="https://item.jd.com/69336974189.html" try: r=requests.get(url) r.raise_for_status() r.encoding=r.apparent_encoding print(r.text[:1000]) except: print("爬取失败!")

2.亚马逊商品页面的爬取

打开一个亚马逊链接

>>> import requests >>> r = requests.get("https://www.amazon.cn/gp/product/B01M8L5Z3Y") >>> r.status_code 200 >>> r.encoding ‘UTF-8‘ >>> r.encoding = r.apparent_encoding >>> r.text >>> r.request.headers {‘User-Agent‘: ‘python-requests/2.22.0‘, ‘Accept-Encoding‘: ‘gzip, deflate‘, ‘Accept‘: ‘*/*‘, ‘Connection‘: ‘keep-alive‘} >>> kv ={‘user-agent‘:‘Mozilla/5.0‘} >>> url = "https://www.amazon.cn/gp/product/B01M8L5Z3Y" >>> r = requests.get(url,headers = {‘user-agent‘:‘Mozilla/5.0‘}) #此处将浏览器端口改为‘user-agent‘:‘Mozilla/5.0‘ >>> r,status_code Traceback (most recent call last): File "<pyshell#22>", line 1, in <module> r,status_code NameError: name ‘status_code‘ is not defined #此处代码报错,一定要注意代码的规范书写,快找找错误在哪里 >>> r.status_code 200 >>> r.request.headers {‘user-agent‘: ‘Mozilla/5.0‘, ‘Accept-Encoding‘: ‘gzip, deflate‘, ‘Accept‘: ‘*/*‘, ‘Connection‘: ‘keep-alive‘} >>> r.text[:1000] ‘\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n \n \n\n\n\n\n\n\n\n\n\n\n\n\n\n\n \n\n\n\n\n\n\n\n\n\n\n\n\n\n\n \n \n\n\n \n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n <!doctype html><html class="a-no-js" data-19ax5a9jf="dingo">\n <head>\n<script type="text/javascript">var ue_t0=ue_t0||+new Date();</script>\n<script type="text/javascript">\nwindow.ue_ihb = (window.ue_ihb || window.ueinit || 0) + 1;\nif (window.ue_ihb === 1) {\nvar ue_hob=+new Date();\nvar ue_id=\‘E06HEJQW1W99HDZ0Z5H0\‘,\nue_csm = window,\nue_err_chan = \‘jserr-rw\‘,\nue = {};\n(function(d){var e=d.ue=d.ue||{},f=Date.now||function(){return+new Date};e.d=function(b){return f()-(b?0:d.ue_t0)};e.stub=function(b,a){if(!b[a]){var c=[];b[a]=function(){c.push([c.slice.call(arguments),e.d(),d.ue_id])};b[a].replay=function(b){for(var a;a=c.shift();)b(a[0],a[1],a[2])};b[a].isStub=1}};e.exec=function(b,a){return function(){try{return b.apply(this,arguments)}catch(c){ueLogError(c,{attribution:a||"undefined",logLevel:"WARN"})}}}})(ue_csm);\n\nue.stub(ue,"log");ue.stub(ue,"onunload");ue.stu‘ >>>

全部代码:

import requests url = "https://www.amazon.cn/gp/product/B01M8L5Z3Y" try: kv = {‘user-agent‘:‘Mozilla/5.0‘} r = requests.get(url,headers=kv) r.raise_for_status() r.encoding = r.apparent_encoding print(r.text[1000:2000]) except: print("爬取失败")

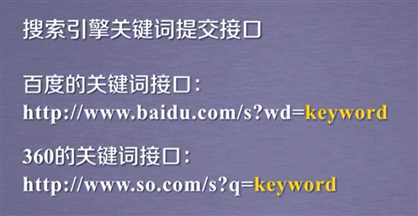

3,百度/360搜索关键词提交

搜索引擎关键词提交接口

百度的关键词接口:

http://www.baidu.com/s?wd=keyword

>>> import requests >>> kv = {‘wd‘:‘Python‘} >>> r = requests.get("http://www.baidu.com/s",params=kv) >>> r.status_code 200 >>> r.request.url ‘http://www.baidu.com/s?wd=Python‘ >>> len(r.text) 524221

代码:

import requests keyword = "Python" try: kv = {‘wd‘:keyword} r = requests.get("http://www.baidu.com/s",params=kv) print(r.request.url) r.raise_for_status() print(len(r.text)) except: print("爬取失败")

360的关键词接口:

http://www.so.com/s?q=keyword

代码:

import requests keyword = "Python" try: kv ={‘q‘:keyword} r = requests.get("http://www.so.com/s",params=kv) print(r.request.url) r.raise_for_status() print(r.text) except: print("爬取失败")

4.网络图片的爬取和存储

网络图片链接的格式:

http://www.example.com/picture.jpg

比如:

http://image.nationalgeographic.com.cn/2017/0211/20170211061910157.jpg

import requests import os url = "http://image.nationalgeographic.com.cn/2017/0211/20170211061910157.jpg" root = "D://MyProject//Python学习//爬虫学习//" path = root + url.split(‘/‘)[-1] try: if not os.path.exists(root):#判断路径是否存在 os.mkdir(root) if not os.path.exists(path):#判断是否有这个文件 r = requests.get(url) with open(path,‘wb‘) as f: f.write(r.content) f.close() print("文件保存成功") else: print("文件已存在") except: print("爬取失败")

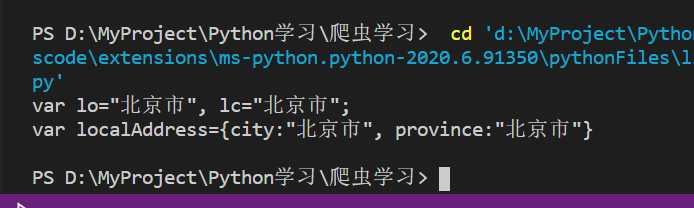

5.IP地址归属地的自动查询

import requests url = "http://ip.ws.126.net/ipquery?ip=" try: r = requests.get(url+‘202.204.80.112‘) r.raise_for_status() r.encoding = r.apparent_encoding print(r.text[-500:]) except: print("爬取失败 ")

标签:rac root dingo cal efi 百度 open https iss

原文地址:https://www.cnblogs.com/HGNET/p/13245702.html