标签:chm creat parallel transform ble 实例 ping ESS static

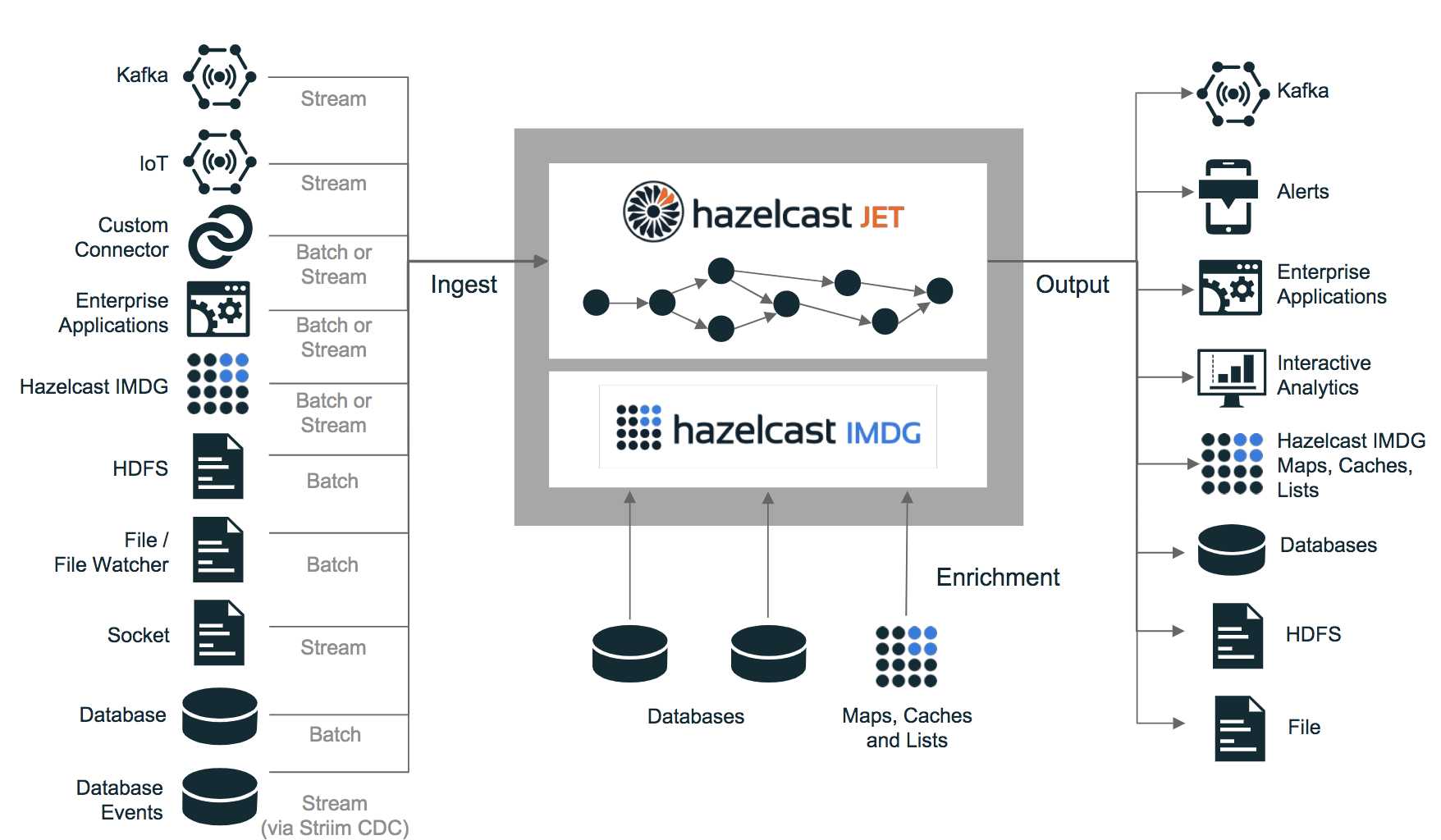

上次有简单写过关于潜入模式的使用,以下是一个使用server 模式基于docker-compose 运行的demo

version: "3"

services:

app:

image: dalongrong/alpine-oraclejdk8:8.131.11-full-arthas-tz

volumes:

- "./app:/app"

- "./mybooks:/var/opt/mybooks"

command: java -jar /app/hazelcast-jet-app.jar

jet:

image: hazelcast/hazelcast-jet

environment:

- "LOGGING_LEVEL=DEBUG"

- "PROMETHEUS_PORT=8080"

volumes:

- "./mybooks:/var/opt/mybooks"

ports:

- "5701:5701"

- "8080:8080"

manage:

image: hazelcast/hazelcast-jet-management-center

environment:

- "JET_MEMBER_ADDRESS=jet:5701"

ports:

- "8081:8081"

package com.dalong;

?

import com.hazelcast.jet.Jet;

import com.hazelcast.jet.JetInstance;

import com.hazelcast.jet.Traversers;

import com.hazelcast.jet.aggregate.AggregateOperations;

import com.hazelcast.jet.config.JetClientConfig;

import com.hazelcast.jet.config.JetConfig;

import com.hazelcast.jet.config.JobConfig;

import com.hazelcast.jet.pipeline.Pipeline;

import com.hazelcast.jet.pipeline.Sinks;

import com.hazelcast.jet.pipeline.Sources;

import com.hazelcast.jet.pipeline.WindowDefinition;

?

public class Application {

public static void main(String[] args) {

String path = "/var/opt/mybooks";

?

// batch mode

?

BatchMode(path);

?

// stream mode

?

// StreamMode(path);

}

//

private static void StreamMode(String path) {

JetClientConfig config = new JetClientConfig();

config.getNetworkConfig().addAddress("127.0.0.1:5701");

JetInstance jet = Jet.newJetClient(config);

// JetInstance jet = Jet.bootstrappedInstance();

MyPipe mypipe = new MyPipe();

JobConfig jobConfig =new JobConfig();

jobConfig.addClass(MyPipe.class);

jet.newJob(mypipe.buildPipeline(path),jobConfig).join();

}

private static void BatchMode(String path) {

JetClientConfig config = new JetClientConfig();

config.getNetworkConfig().addAddress("jet:5701");

JetInstance jet = Jet.newJetClient(config);

// JetInstance jet = Jet.bootstrappedInstance();

MyPipe2 mypipe2 = new MyPipe2();

JobConfig jobConfig =new JobConfig();

jobConfig.addClass(MyPipe2.class);

jet.newJob(mypipe2.buildPipeline(path),jobConfig).join();

}

}

package com.dalong;

?

import com.hazelcast.jet.Traversers;

import com.hazelcast.jet.aggregate.AggregateOperations;

import com.hazelcast.jet.pipeline.Pipeline;

import com.hazelcast.jet.pipeline.Sinks;

import com.hazelcast.jet.pipeline.Sources;

import com.hazelcast.jet.pipeline.WindowDefinition;

?

import java.io.Serializable;

?

/**

@author dalong

*/

public class MyPipe implements Serializable {

?

Pipeline buildPipeline(String path) {

Pipeline p = Pipeline.create();

p.readFrom(Sources.fileWatcher(path))

.withIngestionTimestamps()

.setLocalParallelism(1)

.flatMap(line -> Traversers.traverseArray(line.toLowerCase().split("\\\\W+")))

.filter(word -> !word.isEmpty())

.groupingKey(word -> word)

.window(WindowDefinition.tumbling(4))

.aggregate(AggregateOperations.counting())

.writeTo(Sinks.logger());

return p;

}

}

MyPipe2.java

package com.dalong;

?

import com.hazelcast.jet.Traversers;

import com.hazelcast.jet.aggregate.AggregateOperations;

import com.hazelcast.jet.pipeline.Pipeline;

import com.hazelcast.jet.pipeline.Sinks;

import com.hazelcast.jet.pipeline.Sources;

import com.hazelcast.jet.pipeline.WindowDefinition;

?

import java.io.Serializable;

?

/**

@author dalong

*/

public class MyPipe2 implements Serializable {

?

Pipeline buildPipeline(String path) {

Pipeline p = Pipeline.create();

p.readFrom(Sources.files(path))

.flatMap(line -> Traversers.traverseArray(line.toLowerCase().split("\\W+")))

.filter(word -> !word.isEmpty())

.groupingKey(word -> word)

.aggregate(AggregateOperations.counting())

.writeTo(Sinks.logger());

return p;

}

}

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

?

<groupId>com.dalong</groupId>

<artifactId>myid</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<encoding>UTF-8</encoding>

<java.version>1.8</java.version>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>com.hazelcast.jet</groupId>

<artifactId>hazelcast-jet</artifactId>

<version>4.2</version>

</dependency>

</dependencies>

<build>

<!-- Maven Shade Plugin -->

<finalName>hazelcast-jet-app</finalName>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<!-- Run shade goal on package phase -->

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers>

<!-- add Main-Class to manifest file -->

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.dalong.Application</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

JobConfig jobConfig =new JobConfig();

jobConfig.addClass(MyPipe.class);

jet.newJob(mypipe.buildPipeline(path),jobConfig).join();

不然会有问题

Exception in thread "main" com.hazelcast.nio.serialization.HazelcastSerializationException: Error deserializing vertex ‘fused(flat-map, filter)‘: com.hazelcast.nio.serialization.HazelcastSerializationException: java.lang.ClassNotFoundException: com.dalong.Application. Add it using JobConfig or start all members with it on classpath

docker-compose up -d jet manage

docker-compose up -d app

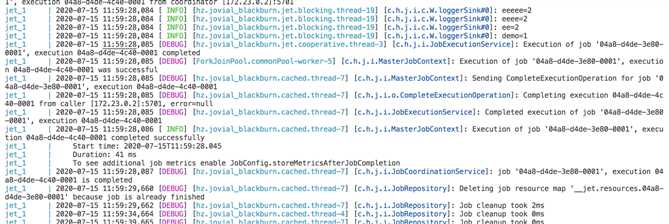

效果

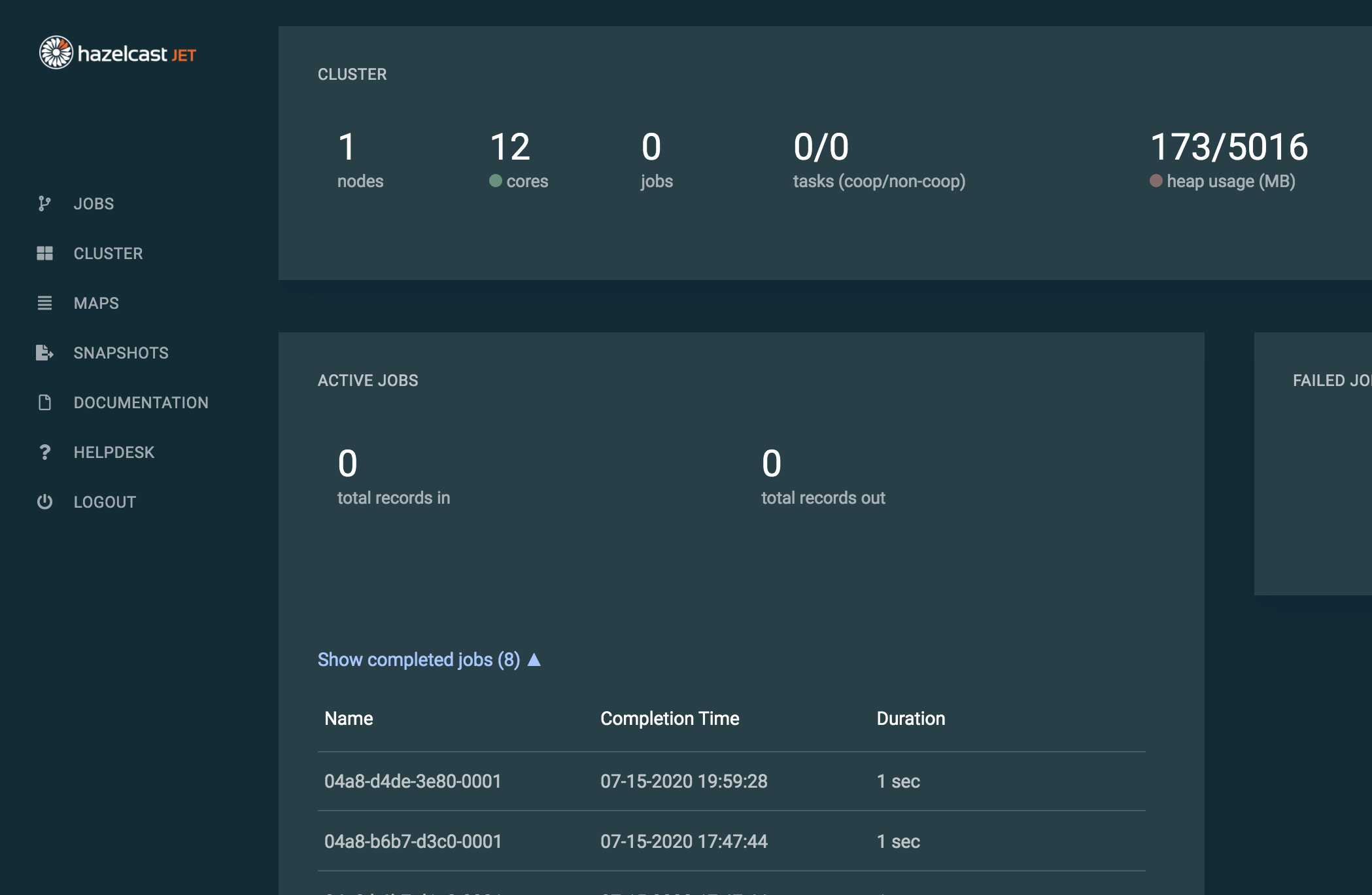

UI

https://github.com/rongfengliang/hazelcast-jet-docker-compose-learning

https://github.com/hazelcast/hazelcast-jet

https://jet-start.sh/

标签:chm creat parallel transform ble 实例 ping ESS static

原文地址:https://www.cnblogs.com/rongfengliang/p/13307698.html