标签:des style blog http ext com

Embodiments of this invention relate to RDMA (remote direct memory access) data transfer in a virtual environment.

Traditional RDMA allows data to move directly from the memory of one computer into that of another without involving either one‘s CPU (central processing unit), and specifically, either one‘s operating system. This permits high-throughput, low-latency networking by eliminating the need to copy data between application memory and the data buffers in the operating system.

A virtual computing environment refers to a computer system in which a single physical machine may be observed as multiple virtual machines, and where a set of physical hardware resources can be used as multiple virtual resources. Each virtual machine can run its own operating system that may control the set of virtual hardware resources.

Typically, data transfer between two virtual machines passes through a virtual network device. However, virtualization of certain devices may introduce considerable overhead.

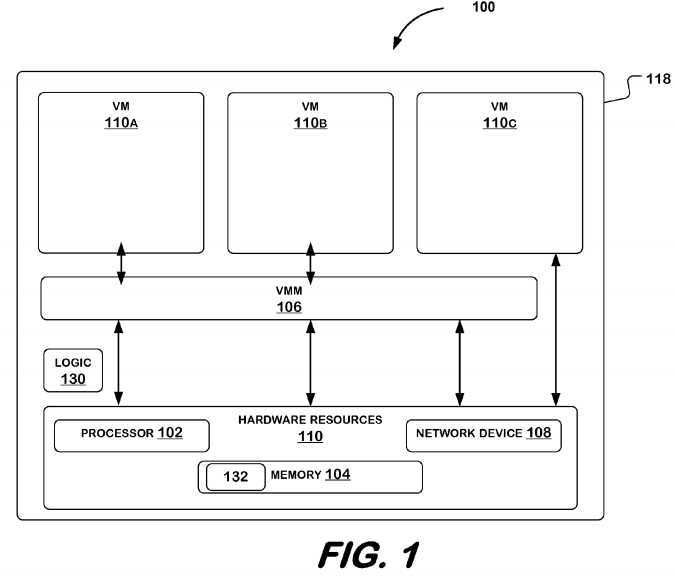

FIG. 1 illustrates a computer system?100?that supports a virtual environment. System?100?may comprise virtual machines?110A,?110B,?110C, virtual machine monitor?106, hardware resources?110, and logic?130. Logic?130?may comprise hardware, software, or a combination of hardware and software (e.g., firmware). For example, logic?130?may comprise circuitry (i.e., one or more circuits), to perform operations described herein. For example, logic?130?may comprise one or more digital circuits, one or more analog circuits, one or more state machines, programmable logic, and/or one or more ASIC‘s (Application-Specific Integrated Circuits). Logic?130?may be hardwired to perform the one or more operations. Alternatively or additionally, logic?130?may be embodied in firmware, in machine-executable instructions?132?stored in a memory, such as memory?104, to perform these operations, or in various other components of system?100. Logic?130?may be used to perform various functions by various components as described herein.

Hardware resources?110?refer to physical hardware components associated with system?118?including, but not limited to, a processor?102, such as CPU (central processing unit), memory?104, and network device?108. Processor?102, host memory?104, and network device?108?may be comprised in a single circuit board, such as, for example, a system motherboard?118. Alternatively, network device?108?may be comprised in a circuit card that may be inserted into a circuit card slot.

Processor?102?may comprise processing circuitry to carry out computational tasks of system?100. For example, processor?102?may include a CPU such as, for example, the Intel? Pentium? family of processors, or Intel? Xeon? processor, both commercially available from Intel? Corporation. Of course, alternatively, processor102?may comprise another type of processor, such as, for example, a microprocessor that is manufactured and/or commercially available from Intel? Corporation, or a source other than Intel? Corporation, without departing from embodiments of the invention.

Network device?108?as referred to herein relates to a device which may be coupled to a data transmission medium to transmit data to or receive data from other devices coupled to the data transmission medium. For example, network device?108?may be designed to transmit data to or receive data from devices coupled to a network such as a local area network. Such a network device may communicate with other devices according to any one of several data communication formats such as, for example, communication formats according to versions of IEEE Std. 802.3 (Ethernet), IEEE Std. 802.11, IEEE Std. 802.16, Universal Serial Bus, Firewire, asynchronous transfer mode (ATM), synchronous optical network (SONET) or synchronous digital hierarchy (SDH) standards.

Memory?104?may store machine-executable instructions?132?that are capable of being executed, and/or data capable of being accessed, operated upon, and/or manipulated by logic, such as logic?130, and/or processor, such as processor?102. The execution of program instructions?132?and/or the accessing, operation upon, and/or manipulation of this data by logic?130?for example, may result in, for example, system?100?and/or logic?130?carrying out some or all of the operations described herein. Memory?104?may, for example, comprise read only, mass storage, random access computer-accessible memory, and/or one or more other types of machine-accessible memories. This may include, for example, DRAM (dynamic random access memory) or SRAM (static random access memory), but embodiments of the invention are not so limited.

System?100?may comprise a plurality of virtual machines?110A,?110B,?110C. While only three are shown, system?100?may comprise more or less virtual machines than those illustrated. One or more virtual machines?110A,?110B,?110C may each include hardware resources?110. Hardware resources?110?may be virtualized, meaning that a single physical hardware resource?110?may be partitioned into multiple virtual hardware resources to enable system?100?to use the single physical hardware resource?110?in multiple virtual machines?110A,?110B,?110C. Virtualization may be implemented using VMM?106. In an embodiment, VMM?106?comprises software that imposes a virtualization layer in system?100?in which hardware resources?110?may be virtualized into virtual machines?110A,?110B,?110C.

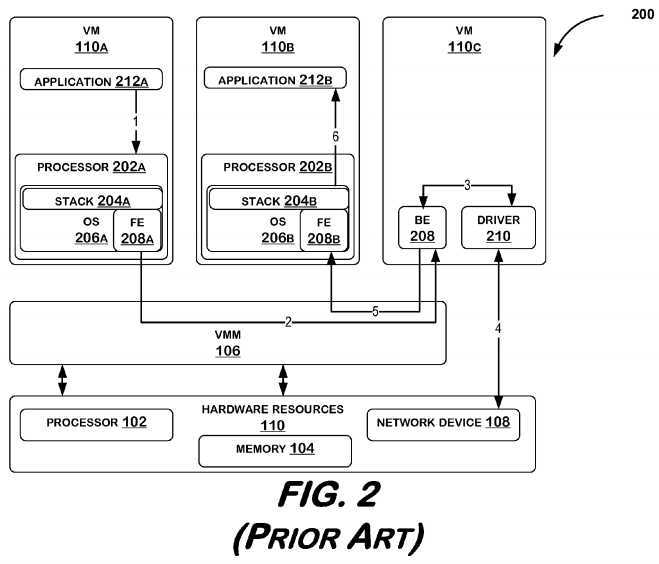

FIG. 2 illustrates a conventional virtual environment system?200. In this conventional system?200, data is typically transferred between, for example, virtual machine?110A and virtual machine?110B, by using a virtual network device, such as virtual machine?110C. Virtual machines?110A,?110B may each comprise application 212A,?212B, and virtual processor?202A,?202B. Processors?202A,?202B may each execute operating system?206A,?206B, where a network protocol stack?204A,?204B may be executed. Operating system?206A,?206B may also include a front end driver?208A,?208B to assist in the transfer of data. Virtual machine?110C may be used as a virtual network device to further assist in the transfer of data. Virtual machine?110C may include a back end driver?208?that interfaces with device driver?210, which in turn communicates with network device?108.

In conventional system?200, application?212A on virtual machine?110A may initiate data transfer by generating a message, and passing the message to network protocol stack?204A executed by operating system?206A of processor?202A (1). Once the message is passed through network stack?204A, front end driver?208A may transmit the message to back end driver?208?on virtual machine?110C (2). Back end driver?208?may communicate the message to actual network device driver?210?(3). Network device driver?210?may then communicate the message to network device?108?(4), where network device?108?may determine destination address of message. Upon determining that the message is to be forwarded to virtual machine?110B, network device?108?may pass the message back to device driver?210?(4), where device driver?210?may transmit to back end driver?208?(3). Back end driver?208?may then transmit the message to front end driver?208B on virtual machine?110B (destination) (5), where the message is passed up through stack?202B, and finally to application?212B.

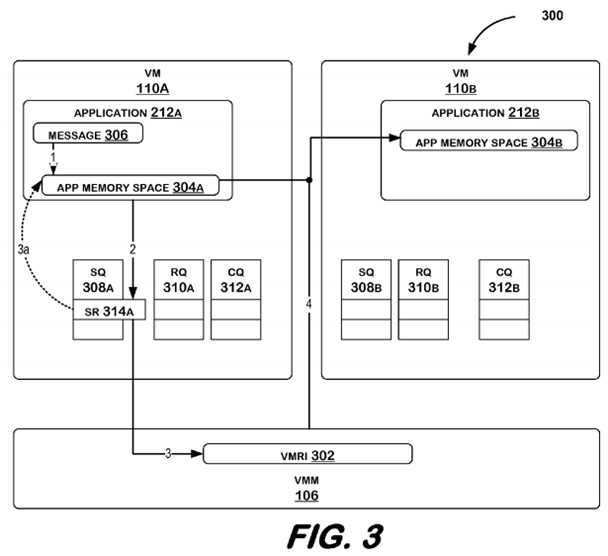

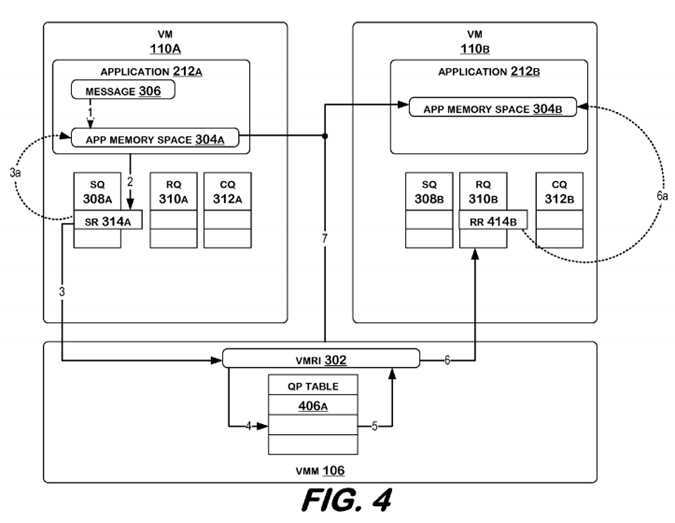

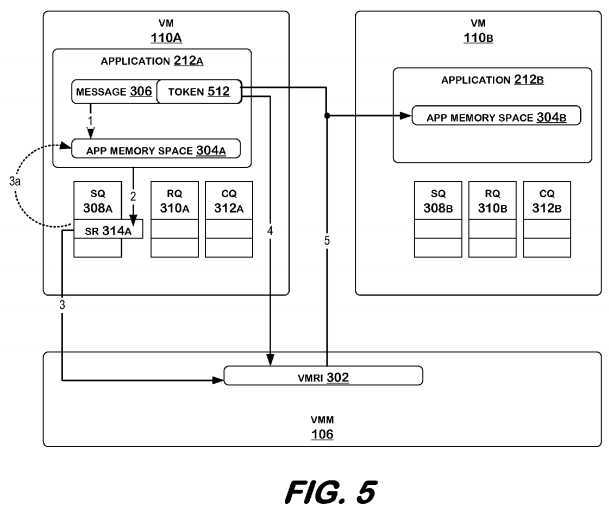

FIG. 3 illustrates a system in accordance with embodiments of the invention. FIG. 4 illustrates the system according to one embodiment, and FIG. 5 illustrates the system according to another embodiment.

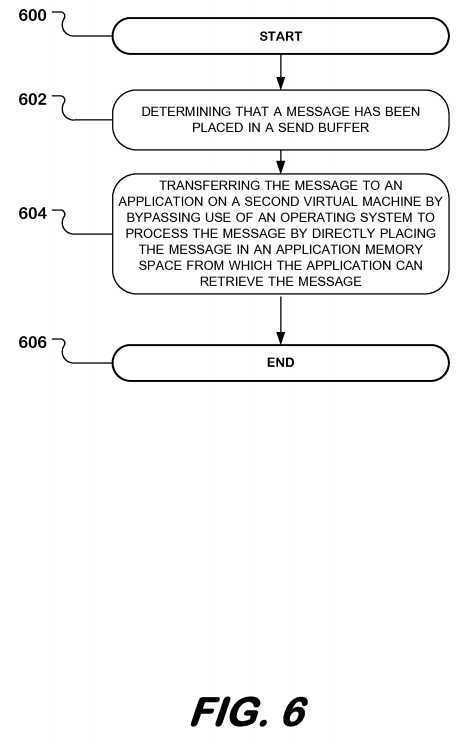

FIG. 6 is a flowchart illustrating a method according to an embodiment. The method begins at block?600?and continues to block?602?where the method may comprise determining that a message has been placed in a send buffer.

Referring back to FIG. 3, source application?212A may generate a message?306, and may store message?306?(1) in a send buffer of an application memory space?304A. A message may comprise data and control information to indicate, for example, what to do with the data. Application memory space?304A may be comprised in, for example, an SRAM memory, or a DRAM memory, such as memory?104. Application?212A may generate send request?314A, and post send request?314A to send queue?308A (2). Send request?314A may include information about message?306, such as an address at which message?306?is stored, and may also include information about the send request?314A itself, such as the request type (i.e., SEND), and the length of the request.

In an embodiment, VMRI (virtual machine RDMA interface)?302?may detect that a send request?314A has been posted to send queue?308A, indicating to VMRI?302?that a message?306?has been placed in a send buffer of application memory space?304A. VMRI?302?refers to an interface that may be called by operating systems?206A,?206B of virtual machines?110A,?110B. VMRI?302?may be implemented in any combination of software and hardware. In an embodiment, VMRI?302?is presented as a service of VMM?106. VMRI?302?may be based, at least in part, on RDMA semantics as defined by any of several RDMA-based protocols. An RDMA-based protocol may comprise, for example, Virtual Interface Architecture (VIA), Version 1.0, Dec. 16, 1997; Infiniband? Architecture (IBA), Volume 2, Release 1.2.1., October 2006; or Remote Direct Data Placement (RDDP), RFC 4296, December 2005. Other volumes and/or specifications of these specifications, as well as other protocols, may be used without departing from embodiments of the invention.

When source application?212A wishes to send data to destination application?212B, source application?212A may perform one or more operations that result in operating system?206A calling VMRI?302. When VMRI is called, it may set-up queue pairs between the source application?212A and the destination application212B, including send queues?308A,?308B, receive queues?310A,?310B, and completion queues?312A,?312B. The queue pairs are set-up such that each send queue?308A,?308B has a corresponding receive queue?310A,?310B, and a corresponding completion queue?312A,?312B. For example, send queue?308A may correspond with receive queue?310B (and send queue?308B may correspond to receive queue?310A). These are described in more detail below.

At block?604, the method may comprise transferring the message to an application on a second virtual machine by bypassing use of an operating system to process the message by directly placing the message in an application memory space from which the application can retrieve the message.

Referring to FIG. 3, message?306?may be transferred from virtual machine?110A to virtual machine?110B by bypassing use of either operating system?206A,?206B (not shown in FIG. 3 since they are not used) by directly placing message?306?in application memory space?304B from which application?212B may retrieve message?306. Processing of message by operating system may include, but is not limited to, for example, passing message through a network protocol stack, and copying message to one or more intermediate (not source or destination) buffers. VMRI?302?may read send request?314A from send queue?308A (3), and retrieve message?306?from send buffer in application memory space?304A as indicated by send request?314A (3a—send request?314A references send buffer in application memory space?304A). VMRI?302?may then directly transfer message?306?to application memory space?304B of destination application?212B (4).

In an embodiment, as illustrated in FIG. 4, directly placing message?306?in an application memory space may comprise VMRI?302?determining a corresponding receive queue?310B from which a receive buffer in application memory space?304B may be determined, and copying message?306?from the send buffer to the receive buffer. VMRI?302?may do this by reading send buffer address from send request?314A (3,?4), and using a queue pair table?406A to match send queue?308A to receive queue?310B. Queue pair table?406A may be initiated when VMRI?302?is called by, for example, operating system?206A. Corresponding receive queue310B may be returned to VMRI?302?(5). VMRI?302?may then access receive queue?310B (6). Receive queue?310B may include receive request?414B, which includes a reference to receive buffer address (6a). Receive request?414B may be placed in receive queue?310B by application?212B when, for example, it expects other messages to arrive from other computer systems on the network. After determining receive buffer address, VMRI?302?may directly transfer message?306?to application memory space?304B (7) by transferring message?306?from send buffer in application memory space?304A to receive buffer in application memory space?304B. In an embodiment, VMRI?302?may update receive request?414B with the status of the transfer, and application?212B may check the status of receive request?414B to determine if data has arrived.

In another embodiment, as illustrated in FIG. 5, directly placing message?306?in an application memory space may comprise VMRI?302?generating from token?512?a receive buffer address in application memory space?304B, and copying message?306?from the send buffer in application memory space?304A to the receive buffer in application memory space?304B. In this embodiment, message?306?may include a token?512. Token?512?may uniquely identify a registered memory region on a specific computer, and may further be unique for all nodes on the network. Token?512?may be generated when application?212B registers a memory region on virtual machine?110B to allow memory operations (such as RDMA) to/from that memory region. Token?512?may then be passed to application?212A. In this embodiment, VMRI?302?may obtain message?306?with token?512?from application memory space?304A at send buffer address, extract token?512, and may generate from the token a receive buffer address in application memory space?304B (4). Message?306?may then be copied from send buffer in application memory space?304A to receive buffer in application memory space?304B (5).

In an embodiment, completion queues?312A may be updated. In an embodiment, completion queue?312A may be updated when message?306?is actually transferred to application memory space?304B of destination application?212B. In another embodiment, completion queue?312A may be updated when message?306?is transferred from application memory space?304A of source application?212A.

The method may end at block?606.

Conclusion

Therefore, in an embodiment, a method may comprise determining that a message has been placed in a send buffer; and transferring the message to an application on a second virtual machine by bypassing use of an operating system to process the message by directly placing the message in an application memory space from which the application can retrieve the message.

Embodiments of the invention utilize an RDMA-based protocol to transfer data without involving the operating system or host processor. Such direct copying of data from one application memory space to another application memory space eliminates the need for intermediate buffering, which may both introduce latency and limit bandwidth.

SRC=http://www.freepatentsonline.com/8707331.html

PatentTips – RDMA data transfer in a virtual environment,布布扣,bubuko.com

PatentTips – RDMA data transfer in a virtual environment

标签:des style blog http ext com

原文地址:http://www.cnblogs.com/coryxie/p/3790144.html